Global cloud spend is expected to approach the $600 billion mark in 2023 – 21% growth over the previous year – showing just how ubiquitous it has become to the global economy. More and more companies are being born into the cloud (digital natives), joining those who have undergone cloud migration efforts because they recognized that the public cloud provides the best value and opportunity for business expansion.

As companies of all sizes continue to grow, the scope of their cloud environments and resulting spend will only get bigger; as your operations scale, it’s only natural that your infrastructure costs will rise as well. The key to success, and one of the signs of an organization that is well-versed in FinOps practices, is growing and scaling your cloud footprint to suit your business needs and nothing more. A big part of that revolves around optimizing your spend wherever possible by taking advantage of savings opportunities, as well as reining in unnecessary costs.

This is easier said than done due to the dynamic nature and complexity of cloud environments, and requires both expertise and diligence to ensure that your cloud spend is kept under control. With that in mind, here are three areas in which you can start or improve your existing AWS cost optimization efforts:

Increase your commitment discount coverage

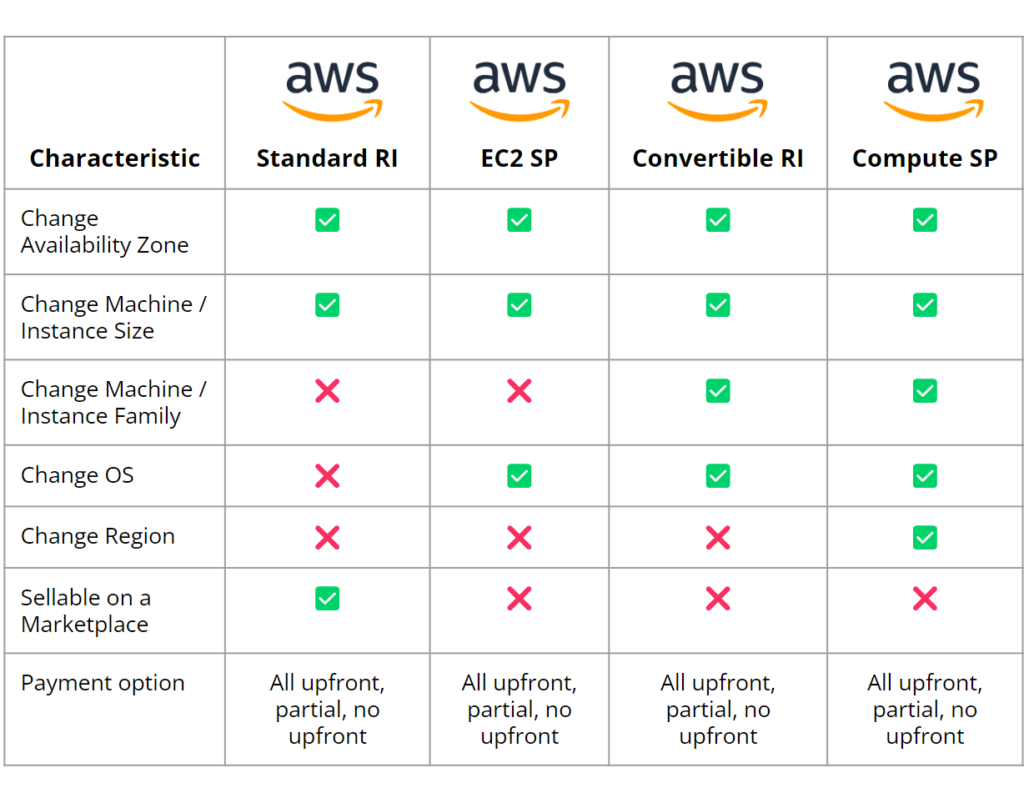

With EC2 and other compute services likely making up 50-70% of your overall cloud bill, lowering those costs represents the best opportunity for significant savings. AWS offers resource commitment discounts in the form of Savings Plans and Reserved Instances, both of which can be purchased in either 1- or 3-year terms, and which also come with some important differences in terms of buybacks and flexibility:

You can read more about the differences between these commitment types here, but the fact of the matter is that managing commitments and maximizing the savings available is a full-time job. One must take several different factors into account when forecasting compute usage – e.g. machine types, regions, cloud services, etc. – and then track usage and expiration dates to ensure that you’re hitting the right milestones.

The best way to maximize your commitment coverage is to cover as much of your workloads as you can with 3-year RIs or Savings Plans, which offer 60-70% discounts as opposed to the 25-35% offered by 1-years. Bear in mind, of course, that 3-year commits are inherently riskier than 1-year deals simply because it’s much harder to predict your workloads that far out. For companies that have the maturity and stability to do so, they could cover roughly half of their forecasted workloads with 3-year commitments, and then use 1-year commitments to round out their discount coverage.

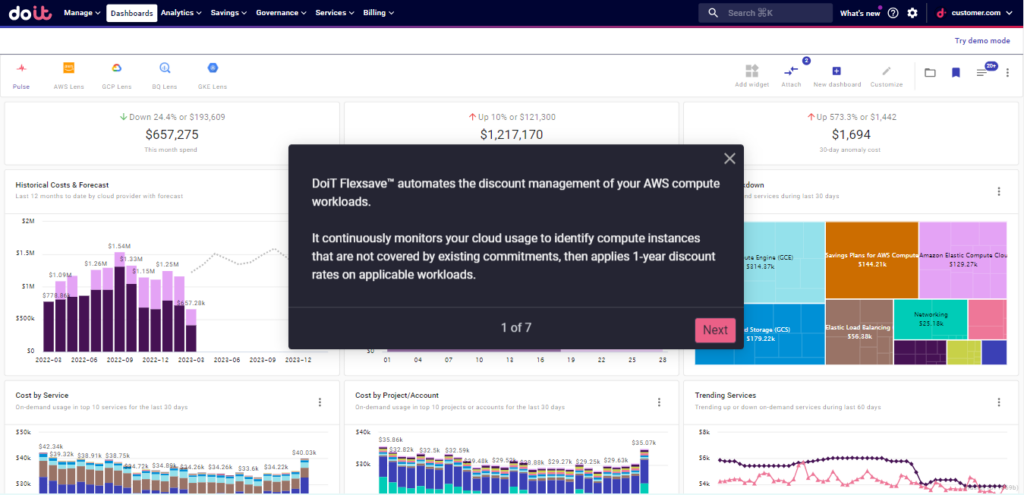

The beauty of a solution like DoiT Flexsave™ is that it automates the management of those 1-year commitments to maximize the savings of any on-demand compute workloads (including EC2, Fargate, and Lambda) that aren’t already discounted. Not only does this ease the FinOps management burden, but it also removes the risk of overcommitting to resources that you won’t end up using.

Flexsave also serves as a FinOps hub for your AWS commitment strategy by providing an overview of your existing Savings Plans, as well as analytics into your discount coverage directly on your dashboard. This graph shows the coverage of your ten most prevalent SKUs by default, but if you’d like to see more SKUs, or break it down by region, you can do so by opening up a Cloud Analytics report directly from the dashboard. From there, you can get a better understanding of what services may not be eligible for discounts, and explore whether there are ways to rearchitect those workloads to improve your savings.

Click the image below to learn more about how to use the Flexsave dashboard:

Take advantage of Spot Instances

Like Savings Plans and RIs, Spot Instances can provide steep discounts on on-demand compute workloads, but with a heavy caveat – Spot workloads can be reclaimed by AWS with just a 2-minute warning. So while you can get even greater savings with Spot Instances with up to 90% discounts, they come with a great deal more risk, and should really only be used on fault tolerant operations like containerized workloads, stateless web servers, testing environments, or big data applications.

Spot Instances can be managed on AWS with Auto Scaling groups (ASGs), but deploying them naturally requires a certain degree of flexibility regarding the instance types and Availability Zones that you request. Why? Because none may be available to you at your target specifications. ASGs must also be manually configured and regularly tuned to ensure that your compute needs can be met consistently without meaningful interruptions. Apart from the manual, tedious nature of the process, if there’s an incorrect configuration, it might not even work.

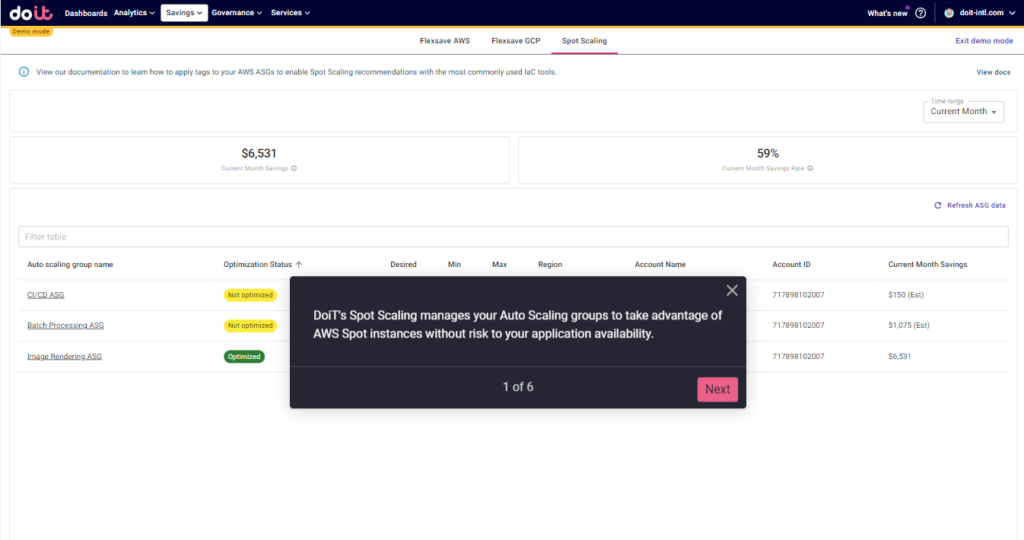

Given these risks and the effort involved, many practitioners choose to not even bother with Spot Instances. But DoiT Spot Scaling automates this process to remove the risk of interruptions and help to reliably run your workloads using Spot Instances. The tool automatically analyzes your ASGs to recommend best practice configurations, then replaces on-demand instances with the heavily discounted Spot Instances when applicable. And to eliminate the risk involved, Spot Scaling provides fallback to on-demand for situations where there isn’t any spot capacity in the market.

To see Spot Scaling in action, click the image below:

Audit your storage for optimization opportunities

While not quite as dominant as compute on your monthly cloud bill, storage costs can quickly ramp up as you scale, which makes it an area that should be regularly audited to ensure that costs are kept to a minimum. AWS provides different classes of storage that vary in price depending on how frequently you need to access the data, which means that objects whose retrieval frequency has changed may still be stored in S3 Standard when they could be moved to Infrequent Access or even Glacier.

To maximize the cost efficiency of your storage classes, you can use lifecycle policies to automatically transition data between different storage classes based on your access patterns. Make sure that you’re also taking into account the amount of objects and their size, as retrieval and transfer prices are charged per GB. You can also use Amazon S3 Select to retrieve specific data from S3 objects, potentially reducing the amount of data transferred.

Another thing to keep in mind is that a lot of small objects can get expensive very quickly. If you have a lot of tiny files, it might make sense to store them using a database service like DynamoDB or MySQL rather than S3. If that’s not applicable, explore the possibility of batching them and storing them as a single file.

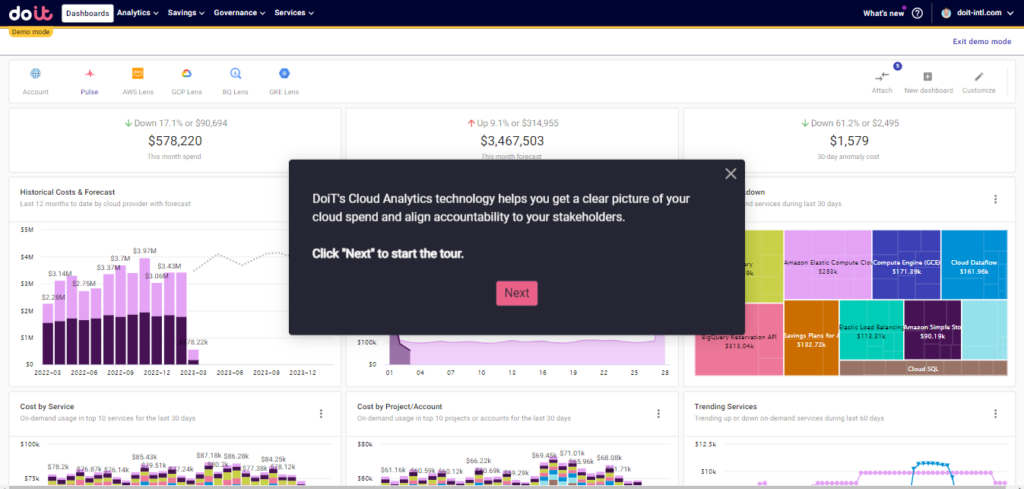

DoiT Cloud Analytics enables this kind of analysis by utilizing cost allocation groupings to quickly gain insights on what is driving costs by breaking them down by service, SKU, availability zone, etc.

To get a better understanding of how these Cloud Analytics features work within DoiT Cloud Navigator, click the image below to take an interactive tour: