Managing secrets natively in Kubernetes is not a secure option. Secrets in Kubernetes is just a base64 encoded plain text that can be consumed in a running pod.

Disclaimer:

This tutorial is designed to give you the understanding of each component on the setup in a step by step fashion. Nothing stopping you from using terraform to create that setup, but going blindly after such an important setup will lead to issues that would be harder to resolve without that deep understanding.

Sure you can encrypt a secret in Kubernetes, but that secret is only encrypted at rest and when mounted inside the Pod, it is only a file or env vars that can be easily accessed from within the Pod so in case of a breach the data could be compromised if someone has access to the Pod or even to the namespace where the pod is via kubectl.

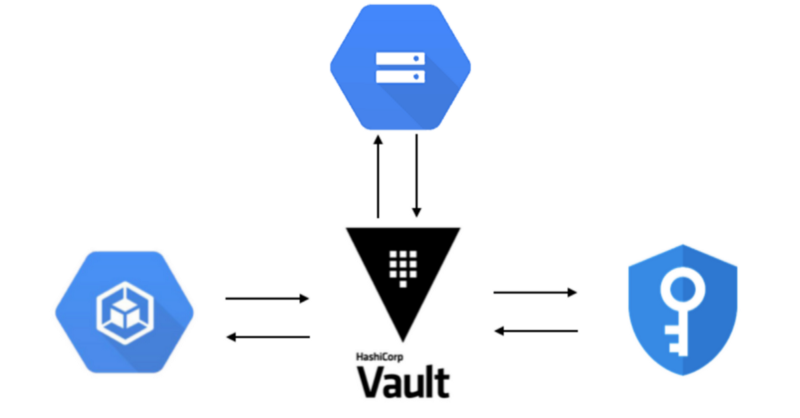

Hasicorp Vault is a secure way to manage secrets as well as audit and revoke access to them. it’s one thing to install and use vault but another to consume those secrets on a Pod.

This post is about installing Vault on GKE with Terraform and Helm, for consuming these secrets you can further read my other blog post on consuming secrets from Vault transparently on a Pod.

This approach is somewhat easier to manage if you’re only looking for Vault and do not need the advance Consul features such as Consul template with Vault.

In this tutorial, I will go over how to install a high availability vault using Google Storage GCS as Vault backend with TLS end to end.

Note:

You should create the Vault setup per environment, to better tests upgrades and separate the environments from each other.

I do not recommend exposing the vault as a service, should you need to access it you can do that via the command:

$ kubectl port-forward vault-0 8200:8200

and access the UI via https://127.0.0.1:8200 as detailed below.

If you have VM’s that need to access that vault, you should use VPC peering since services and pods are native IP’s — this is not covered here.

Tutorial:

Outline:

- Create TLS certificates for vault

- Create a GCS Bucket for Vault storage backend

- Create KMS keyring and encryption key for Vault auto-unseal.

- Create service accounts for Vault to access KMS and GCS storage backend.

- Install Hashicorp vault official Helm chart via helm tillerless.

Creating TLS certificates for Vault:

One of the Production hardening recommendations is that communications between vault and clients will be encrypted by TLS both for incoming and outgoing traffic.

We are going to create a certificate that will be used for:

- Kubernetes vault service address.

- 127.0.0.1

We will use CloudFlare SSL took kit (cfssl and cfssljson) in order to generate these certificates.

Installation requires a working Go 1.12+ installation and a properly set GOPATH.

Important!: make sure the GOPATH bin is in your path:

export PATH=$GOPATH/bin:$PATH

Installing CloudFlare SSL ToolKit:

go get -u github.com/cloudflare/cfssl/cmd/cfssl go get -u github.com/cloudflare/cfssl/cmd/cfssljson

Initialize a Certificate Authority (CA):

$ mkdir vault-ca && cd vault-ca

Create the CA files:

CA config file with the expiration of 5 years

$ cat <<EOF > ca-config.json

{

"signing": {

"default": {

"expiry": "8760h"

},

"profiles": {

"default": {

"usages": ["signing", "key encipherment", "server auth", "client auth"],

"expiry": "8760h"

}

}

}

}

EOF

CA Signing Request:

$ cat <<EOF > ca-csr.json

{

"hosts": [

"cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "US",

"L": "NewYork",

"O": "Kubernetes",

"OU": "CA",

"ST": "NewYork"

}

]

}

EOF

VAULT Certificate Signing Request to be signed by the CA above:

note: change the namespace for vault if it's not the default namespace

$ cat <<EOF > vault-csr.json

{

"CN": "Vault-GKE",

"hosts": [

"127.0.0.1",

"vault.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "US",

"L": "NewYork",

"O": "Innovia",

"OU": "Vault",

"ST": "NewYork"

}

]

}

EOF

Obviously, you can change the cert info on the bottom under the “names” section to your liking.

Run the following command to initialize the CA using the file you’ve just edited:

$ cfssl gencert -initca ca-csr.json | cfssljson -bare ca 2019/11/12 16:35:01 [INFO] generating a new CA key and certificate from CSR 2019/11/12 16:35:01 [INFO] generate received request 2019/11/12 16:35:01 [INFO] received CSR 2019/11/12 16:35:01 [INFO] generating key: rsa-2048 2019/11/12 16:35:01 [INFO] encoded CSR 2019/11/12 16:35:01 [INFO] signed certificate with serial number 425581644650417483788325060652779897454211028144

Create a private key and sign the TLS certificate:

$ cfssl gencert \ -ca=ca.pem \ -ca-key=ca-key.pem \ -config=ca-config.json \ -profile=default \ vault-csr.json | cfssljson -bare vault 2019/11/12 16:36:33 [INFO] generate received request 2019/11/12 16:36:33 [INFO] received CSR 2019/11/12 16:36:33 [INFO] generating key: rsa-2048 2019/11/12 16:36:34 [INFO] encoded CSR 2019/11/12 16:36:34 [INFO] signed certificate with serial number 311973563616303179057952194819087555625015840298

At this point you should have the following files in the current working directory:

ca-key.pem ca.pem vault-key.pem vault.pem

keep the CA files secured you will need to use them to resign the cert when its expires (CA is for 5 years, Vault is for 1year)

Create a secret for the Vault TLS and CA.pem

kubectl create secret generic vault-tls \ --from-file=ca.pem \ --from-file=vault.pem \ --from-file=vault-key.pem

Set GCP Project for rest of this tutorial:

$ export GCP_PROJECT=<your_project_id>

Enabling GCP APIs required by this tutorial:

$ gcloud services enable \

cloudapis.googleapis.com \

cloudkms.googleapis.com \

container.googleapis.com \

containerregistry.googleapis.com \

iam.googleapis.com \

--project ${GCP_PROJECT}

Operation "operations/acf.8e126724-bbde-4c0d-b516-5dca5b8443ee" finished successfully.

Vault Storage Backend

When running in HA mode, Vault servers have two additional states: standby and active. Within a Vault cluster, only a single instance will be active and handles all requests (reads and writes) and all standby nodes redirect requests to the active node.

Let’s create the bucket on gcs using the gsutil command, Bucket names must be globally unique across all of Google Cloud, so choose a unique name

$ export GCS_BUCKET_NAME=mycompany-vault-data $ gsutil mb gs://$GCS_BUCKET_NAME $ gsutil versioning set on gs://$GCS_BUCKET_NAME

Even though the data is encrypted in transit and at rest, be sure to set the appropriate permissions on the bucket to limit exposure. You may want to create a service account that limits Vault’s interactions with Google Cloud to objects in the storage bucket using IAM permissions.

Vault Auto unseal

when Vault is restarted it starts up sealed and encrypted. in order to use it you must unseal it, there’s a new feature with is auto unseal that can read the master keys and root token from CloudKMS automatically.

Create KMS Keyring and Crypto Key:

In this section we will create the KMS keyring and key for encrypting and decrypting vault master keys and root token:

Create the vault-helm-unseal-kr kms keyring:

$ gcloud kms keyrings create vault-helm-unseal-kr \

--location global \

--project ${GCP_PROJECT}

Create the encryption key:

$ gcloud kms keys create vault-helm-unseal-key \

--location global \

--keyring vault-helm-unseal-kr \

--purpose encryption \

--project ${GCP_PROJECT}

Create the GCP service accounts and IAM permissions for vault

Setup variables:

$ export VAULT_SA_NAME=vault-server; export VAULT_SA=$VAULT_SA_NAME@$GCP_PROJECT.iam.gserviceaccount.com

Create the vault server service account:

$ gcloud iam service-accounts create $VAULT_SA_NAME \

--display-name "Vault server service account" \

--project ${GCP_PROJECT}

Create the Vault server service account key (credentials JSON file):

$ gcloud iam service-accounts keys create \

--iam-account $VAULT_SA /tmp/vault_gcs_key.json

created key [be22cfe6e30f3a3fcfc6ebaa23ca3ba905dd60ab] of type [json] as [/tmp/vault_gcs_key.json] for [[email protected]]

Create the secret to store the vault google service account in

$ kubectl create secret generic vault-gcs \ --from-file=/tmp/vault_gcs_key.json secret/vault-gcs created

Grant access to vault storage GCS Bucket:

$ gsutil iam ch \

serviceAccount:${VAULT_SA}:objectAdmin \

gs://${GCS_BUCKET_NAME}

Grant access to the vault kms key:

$ gcloud kms keys add-iam-policy-binding \

vault-helm-unseal-key \

--location global \

--keyring vault-helm-unseal-kr \

--member serviceAccount:${VAULT_SA} \

--role roles/cloudkms.cryptoKeyEncrypterDecrypter \

--project ${GCP_PROJECT}

Updated IAM policy for key [vault-helm-unseal-key].

bindings:

- members:

- serviceAccount:[email protected]

role: roles/cloudkms.cryptoKeyEncrypterDecrypter

etag: BwWZ6sIYovk=

version: 1

Note: If for some reason you have deleted the service account and recreated it you must delete the IAM policy on the key, else skip to the Get the vault hashicorp official chart

$ gcloud kms keys get-iam-policy vault-helm-unseal-key --location global --keyring vault-helm-unseal-kr > kms-policy.yaml

edit the policy file and remove the members under binding then save the file

bindings: etag: BwWXQz4HjuI= version: 1

re-apply the policy:

$ gcloud kms keys set-iam-policy vault-helm-unseal-key --location global --keyring vault-helm-unseal-kr kms-policy.yaml

Get the Vault official Hashicorp chart:

note:

starting from version 0.3.0 there’s a Kubernetes vault integration that will automatically inject secrets to Pod by rendering the secret as a file on a volume. I highly recommend using my vault secrets webhook because it is a more secure way of injecting a secret to a Pod as well as automating the consumption of the secrets.

export CHART_VERSION=0.3.0

Get the chart and unpack it:

$ wget https://github.com/hashicorp/vault-helm/archive/v$CHART_VERSION.tar.gz && tar zxf v$CHART_VERSION.tar.gz && rm v$CHART_VERSION.tar.gz

Setting the values.yaml for the chart:

The following gist has place holders for variables substitution

global:

tlsDisable: false

server:

# resources:

# requests:

# memory: 256Mi

# cpu: 250m

# limits:

# memory: 256Mi

# cpu: 250m

extraEnvironmentVars:

GOOGLE_APPLICATION_CREDENTIALS: /vault/userconfig/vault-gcs/vault_gcs_key.json

extraVolumes:

- type: secret

name: vault-gcs

path: "/vault/userconfig"

- type: secret

name: vault-tls

path: "/etc/tls"

authDelegator:

enabled: true

ha:

enabled: true

# This should be HCL.

config: |

ui = true

listener "tcp" {

tls_disable = 0

tls_cert_file = "/etc/tls/vault-tls/vault.pem"

tls_key_file = "/etc/tls/vault-tls/vault-key.pem"

tls_client_ca_file = "/etc/tls/vault-tls/ca.pem"

tls_min_version = "tls12"

address = "[::]:8200"

cluster_address = "[::]:8201"

}

storage "gcs" {

bucket = "GCS_BUCKET_NAME"

ha_enabled = "true"

}

# Example configuration for using auto-unseal, using Google Cloud KMS. The

# GKMS keys must already exist, and the cluster must have a service account

# that is authorized to access GCP KMS.

seal "gcpckms" {

project = "GCP_PROJECT"

region = "global"

key_ring = "vault-helm-unseal-kr"

crypto_key = "vault-helm-unseal-key"

}

# Exposing VAULT UI to a GCP loadbalancer WITH IAP Backend config

# 1. create the backend config https://cloud.google.com/iap/docs/enabling-kubernetes-howto

# 2. create a Google Managed Certificate https://cloud.google.com/load-balancing/docs/ssl-certificates

# 3. create static global ip "gcloud compute addresses create vault-ui --global"

# and set the loadBalancerIP below

# 4. create a DNS entry for that IP - and update the host in the ingress section below

# 5. uncomment the section below

# 6. install

# -------------------------------------

# readinessProbe:

# enabled: true

# path: /v1/sys/health?standbyok=true

# ui:

# enabled: true

# serviceType: "NodePort"

# externalPort: 443

# loadBalancerIP: "LOAD_BALANCER_IP"

# service:

# clusterIP: {}

# type: NodePort

# annotations:

# cloud.google.com/app-protocols: '{"http":"HTTPS"}'

# beta.cloud.google.com/backend-config: '{"ports": {"http":"config-default"}}'

# ingress:

# enabled: true

# labels: {}

# # traffic: external

# annotations:

# # must be global static ip not regional!

# kubernetes.io/ingress.global-static-ip-name: "vault-ui"

# #the controller will only create rules for port 443 based on the TLS section.

# kubernetes.io/ingress.allow-http: "false"

# # represents the specific pre-shared SSL certificate for the Ingress controller to use.

# networking.gke.io/managed-certificates: "vault-ui-certificate"

# # kubernetes.io/tls-acme: "true"

# hosts:

# - host: vault.domain.com

# paths:

# - /*

use the command below to create a new values files called vault-gke-values.yaml

$ curl -s https://gist.githubusercontent.com/innovia/53c05bf69312706fc93ffe3bb685b223/raw/adc169605984da8ba82082191c8f631579b1b199/vault-gke-values.yaml | sed "s/GCP_PROJECT/$GCP_PROJECT/g" | sed "s/GCS_BUCKET_NAME/$GCS_BUCKET_NAME/g" > vault-helm-$CHART_VERSION/vault-gke-values.yaml

Inspect the created file to make sure you have the correct project and GCS bucket.

$ cat vault-helm-$CHART_VERSION/vault-gke-values.yaml | grep -E 'bucket|project' bucket = "<COMPANY>-vault-data" project = "ami-playground"

using helm 2.x:

If you don’t have tiller installed on the cluster you can skip the tiller setup by installing a tillerless plugin for helm, which will bring up local tiller on your computer and point helm to use that, otherwise skip to the install the vault chart section below.

Install the tillerless helm plugin:

if you don't have helm already install it via

$ brew install helm@2

Initialize the client-only so that tiller server is not installed

helm init --client-only

install helm-tillerless plugin

helm plugin install https://github.com/rimusz/helm-tiller

start tiller via helm

$ helm tiller start Installed Helm version v2.16.1 Copied found /usr/local/bin/tiller to helm-tiller/bin Helm and Tiller are the same version! Starting Tiller... Tiller namespace: kube-system

Using helm 3

$ brew install helm

Install the Vault chart:

note: if you are using helm 3 the output will not list the resources.

$ helm upgrade --install vault -f vault-helm-$CHART_VERSION/vault-gke-values.yaml vault-helm-$CHART_VERSION release "vault" does not exist. Installing it now. NAME: vault LAST DEPLOYED: Wed Nov 13 15:41:55 2019 NAMESPACE: default STATUS: DEPLOYED RESOURCES: ==> v1/ConfigMap NAME AGE vault-config 0s ==> v1/Service NAME AGE vault 0s ==> v1/ServiceAccount NAME AGE vault 0s ==> v1/StatefulSet NAME AGE vault 0s ==> v1beta1/ClusterRoleBinding NAME AGE vault-server-binding 0s ==> v1beta1/PodDisruptionBudget NAME AGE vault 0s NOTES: Thank you for installing HashiCorp Vault! Now that you have deployed Vault, you should look over the docs on using Vault with Kubernetes available here: https://www.vaultproject.io/docs/ Your release is named vault. To learn more about the release, try: $ helm status vault $ helm get vault

Vault should start running and be in an uninitialized state.

The following warnings are OK since vault is un-initialized yet:

=> Vault server started! Log data will stream in below: 2019-12-17T19:07:37.937Z [INFO] proxy environment: http_proxy= https_proxy= no_proxy= 2019-12-17T19:07:38.909Z [INFO] core: stored unseal keys supported, attempting fetch 2019-12-17T19:07:39.037Z [WARN] failed to unseal core: error="stored unseal keys are supported, but none were found" 2019-12-17T19:07:44.038Z [INFO] core: stored unseal keys supported, attempting fetch 2019-12-17T19:07:44.080Z [INFO] core: autoseal: seal configuration missing, but cannot check old path as core is sealed: seal_type=recovery 2019-12-17T19:07:44.174Z [WARN] failed to unseal core: error="stored unseal keys are supported, but none were found" --- kubectl describe pod vault-0 Events: Type Reason Age From Message ---- ------ ---- ---- ------- ... Warning Unhealthy 3s (x9 over 27s) kubelet, minikube Readiness probe failed: Key Value

Initialize vault with KMS auto unseal

open up a port-forward to Vault using the command:

$ kubectl port-forward vault-0 8200:8200 > /dev/null & export PID=$!; echo "vault port-forward pid: $PID"

Connect to Vault using the CA.pem cert

$ export VAULT_ADDR=https://127.0.0.1:8200; export VAULT_CACERT=$PWD/ca.pem my vault ca.pem for example is at: VAULT_CACERT: /Users/ami/vault-gke-medium/ca.pem

Install vault client (make sure your client is the same version as the server)

$ brew install vault

Check the status:

$ vault status Key Value --- ----- Recovery Seal Type gcpckms Initialized false Sealed true Total Recovery Shares 0 Threshold 0 Unseal Progress 0/0 Unseal Nonce n/a Version n/a HA Enabled true

now initialize vault:

vault operator init Recovery Key 1: 33nCanHWgYMR/VPj6bNQdHXJiayL6WeB8Ourx4kHYNaX Recovery Key 2: IMf7RjptFxtGQUbEWUWehanCBiSY7VhElkM7rRVxczGc Recovery Key 3: zGuzk/PhNet9OHL4cW2H7d3XypDxfwWXkmajclLPklK4 Recovery Key 4: nCFS0dt0cNGB2LWk0F+3Vmz9TbVNpeIsXbIXDbRarlnT Recovery Key 5: 9GxXr/6T8OJWJrWqyHQxayR0BAK+WTdbT870AzKEFl2V Initial Root Token: s.1ukhSgycySjZUJRD0bZjSEit Success! Vault is initialized Recovery key initialized with 5 key shares and a key threshold of 3. Please securely distribute the key shares printed above.

Keep these keys safe.

Trusting the self-signed certificate authority:

Since we have created the ca.pem by ourselves, it will not be trusted since it is not a part of the CA’s bundle that comes with your computer.

we can add a trust by following the instructions below for your operating system.

Mac OS:

Setting “always trust” for the CA will allow you to open Vault UI in the browser without any errors:

$ sudo security add-trusted-cert -d -k /Library/Keychains/System.keychain $VAULT_CACERT

Windows 10:

Follow the instructions here to add the cert to the trusted publishers:

Setting up Kubernetes backend authentication with Vault

Now that Vault is up and highly available we can move forward and connect Vault with Kubernetes.

We will use a service account to do the initial login of Vault to Kubernetes,

This service account token will be configured inside the vault using vault CLI.

This service account has special permission called “system:auth-delegator” that will allow vault to pass the service account of the pod to Kubernetes for authentication, once authenticated vault returns a vault login token to the client that will talk to Vault and get the secrets it needs.

The client will use the login token and login to Vault to get the secret

Vault will check a mapping between a vault role, service account, namespace and the policy to allows/deny the access.

let’s create the service account for that vault-reviewer

Please note; if you have set up Vault on any other namespace, make sure to update this file accordingly.

kubectl apply -f vault-reviewer.yaml

enable the Kubernetes auth backend:

# Make sure you are logged in to vault using the root token $ vault login $ vault auth enable kubernetes Success! Enabled kubernetes auth method at: kubernetes/

Configure Vault with the vault-reviewer token and ca:

note: if you setup vault on any other namespace set the -n <namespace> flag after each kubectl command

$ VAULT_SA_TOKEN_NAME=$(kubectl get sa vault-reviewer -o jsonpath="{.secrets[*]['name']}")

$ SA_JWT_TOKEN=$(kubectl get secret "$VAULT_SA_TOKEN_NAME" -o jsonpath="{.data.token}" | base64 --decode; echo)

$ SA_CA_CRT=$(kubectl get secret "$VAULT_SA_TOKEN_NAME" -o jsonpath="{.data['ca\.crt']}" | base64 --decode; echo)

$ vault write auth/kubernetes/config token_reviewer_jwt="$SA_JWT_TOKEN" kubernetes_host=https://kubernetes.default kubernetes_ca_cert="$SA_CA_CRT"

Success! Data written to: auth/kubernetes/config

Basic requirements for a pod to access a secret:

- the Pod must have a service account

- the Vault CA.pem secret must exist on the namespace that the Pod is running on

- a policy with a minimum read to the secret must exist

path "secret/foo" {

capabilities = ["read"]

}

- a Vault role must be created in Vault:

vault write auth/kubernetes/role/<role_name> \ bound_service_account_names=<service_account_name> \ bound_service_account_namespaces=<service_account_namespace> \ policies=<policy_name>

This concludes the setup for Hashicorp vault on GKE, I highly recommend setting up vault secrets webhooks to seamlessly consume secrets from Vault based only on a few annotations.

How to setup Vault UI with Identity-Aware Proxy (IAP) via a load-balancer

Identity-aware proxy is a way to authenticate a user without the need to set up a VPN or SSH Bastion.

If you want to set up a load balancer for the service with identity-aware proxy you can do that by following the steps below, otherwise, you can access vault UI via kubectl port-forward vault-0 8200

The following process does not bind a google user to Vault by any means — it serves as a multi-factor authentication only, there is a way to use JWT for vault authentication but that allows any user from your domain to choose a role which is less secure…

Note:

You still need the self-signed certificate for Vault service itself, the load balancer certificate is required to enable IAP and https.

Prerequisites:

- A certificate for the load balancer must be created via Google Managed certificate, or created as a Kubernetes secret.

https://cloud.google.com/load-balancing/docs/ssl-certificates - The domain must be verified via Google webmaster tools

- A global static IP must be created and a DNS entry needs to be created

(if you are using externalDNS service then you don’t need that)

$ gcloud compute addresses create vault-ui --global

You can create a certificate using the following YAML

$ kubectl apply -f managed-cert.yaml

Once created, give it 15-20 minutes to change the status from Proviosning to Active

Check the status of the cert:

$ kubectl describe ManagedCertificate vault-ui-certificate

Name: vault-ui-certificate

Namespace: default

Labels: <none>

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"networking.gke.io/v1beta1","kind":"ManagedCertificate","metadata":{"annotations":{},"name":"vault-ui-certificate","namespac...

API Version: networking.gke.io/v1beta1

Kind: ManagedCertificate

Metadata:

Creation Timestamp: 2020-01-13T23:10:28Z

Generation: 3

Resource Version: 7120865

Self Link: /apis/networking.gke.io/v1beta1/namespaces/default/managedcertificates/vault-ui-certificate

UID: e35e7a1b-3659-11ea-ae90-42010aa80174

Spec:

Domains:

vault.ami-playground.doit-intl.com

Status:

Certificate Name: mcrt-9462e1f4-6dd6-4cf2-8769-9693ba29789e

Certificate Status: Active

Domain Status:

Domain: vault.ami-playground.doit-intl.com

Status: Active

Expire Time: 2020-04-12T15:12:29.000-07:00

Events: <none>

Configuring IAP for GKE:

You may choose to follow the complete instructions instead of the steps summarized below:

Configure the IAP for your domain via Oauth consent screen and create the client credentials.

Once you created the client you need to copy the client ID and add it to the authorized redirect URIs field in the following format:

https://iap.googleapis.com/v1/oauth/clientIds/<CLIENT_ID>:handleRedirect

Create the secret that will be used by the backend config:

kubectl create secret generic my-secret --from-literal=client_id=client_id_key \

--from-literal=client_secret=client_secret_key

Create a backend config for the IAP:

enable the section on the bottom of the vault-gke.yaml files and make sure the values for the global static IP as well as the DNS for the host are updated

Note:

you must delete the vault installation and recreate it with helm since GKE ingress has issues updating existing ingresses.

to summarize the values yaml file:

- we are enabling the vault UI service on port 443 and exposing it via a NodePort

- we are setting up the vault service with the IAP via a backend config

- we are enabling the ingress with a static global IP and the DNS as the host that is mapped to it

- we are disabling HTTP on the load balancer

- we are configuring the communication between the load balancer and the vault pods to be https only

- we are setting up the managed certificate for the load balancer so that the load balancer will be an HTTPS listener

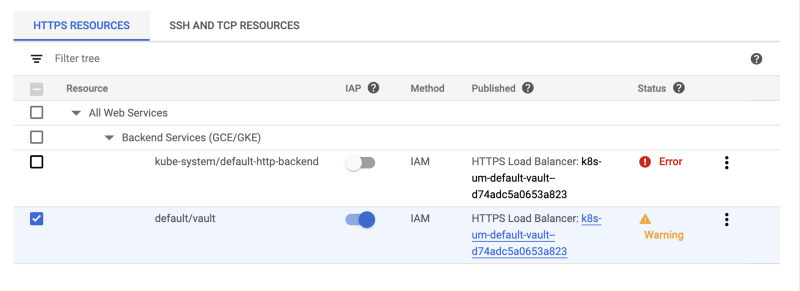

Once deployed, if you will check the IAP page you will see the following errors and warnings (you might see both backend services with an ERROR if you are using a shared VPC networking. the real test is to check the vault UI URL in the browser)

The first error is for the default backend (the one that serves 404’s), the error is simply an indication that IAP won’t be active for any 404 page, which is the intended behavior.

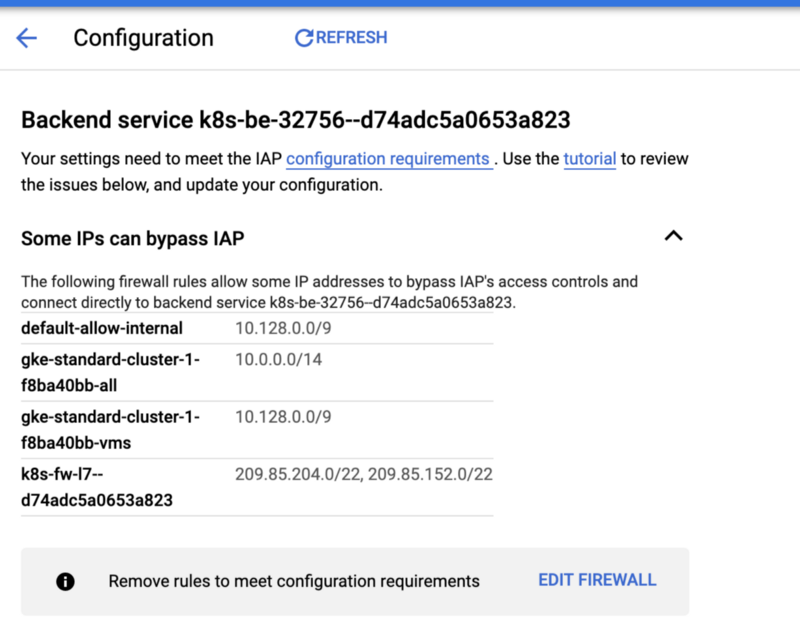

The other is just a warning, if you click on the warning you will see something like this:

All it means is that GCP detected that some firewall rules will bypass the IAP such as internal networks, and the load balancer talking to vault backend.

select the default/vault on the IAP page and from the info panel on the left add members that will need access to the vault-ui via the load balancer

add the member with the “IAP-secured Web App User” permission to allow that user to access vault ui.