A popular feature offered by Heroku is their Review Apps solution. It generates a disposable environment and instance of an app during a pull request (PR), and after merging the PR, it destroys the temp environment.

Inspired by this concept and a recent DoiT International customer meeting, I set out recreate the behavior atop Google Cloud Platform (GCP) using a cloud native approach and popular open source tools.

Developer experience (20 minute demo)

By adhering to GitOps principles, your development team only has to worry about writing code and when they create a PR, within seconds or minutes a ready-to-review version of their latest code is accessible online.

Subsequent commits will update the review app until reviewers/testers are satisfied and approve/merge the PR. Once the app is pushed to a higher environment, the review app is destroyed — rinse and repeat per feature.

Components involved

- Github — source control

- Kustomize — Kubernetes-native config management

- Argo CD — declarative GitOps CD for Kubernetes

- Kong Ingress Controller — API gateway

- Google Kubernetes Engine (GKE) — managed Kubernetes

- Google Cloud Build — CI server

- Google Container Registry (GCR) — private container image registry

- Google Secret Manager — centralized secrets management

- Google Cloud DNS — API-enabled DNS service (optional)

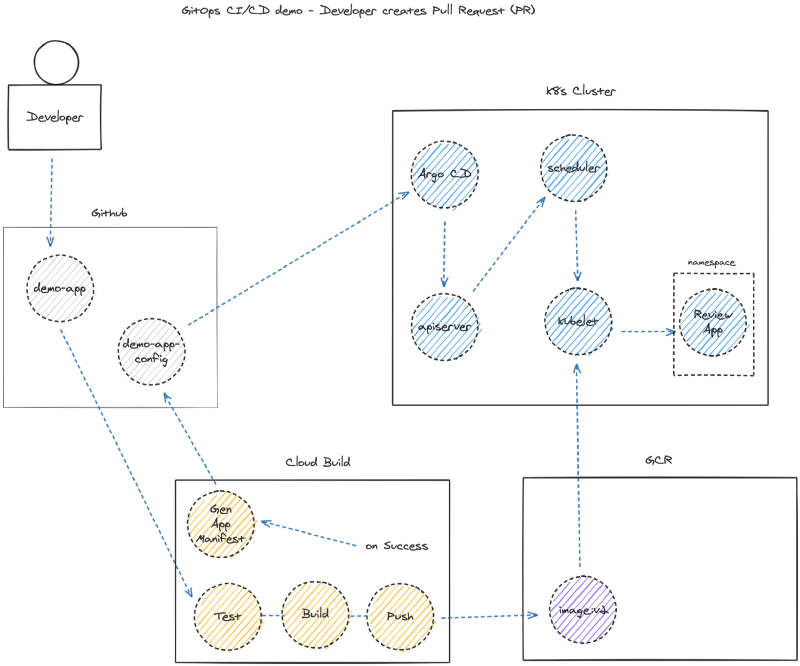

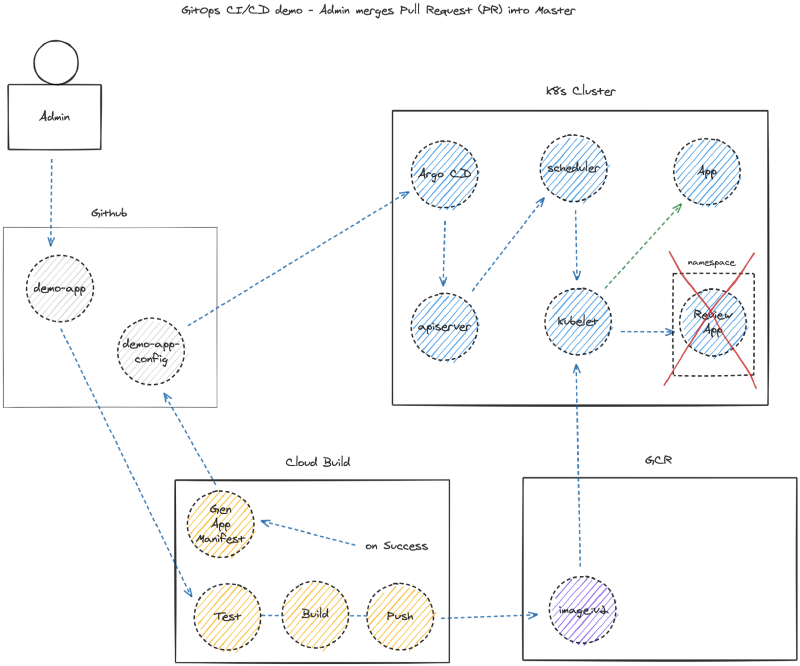

Architecture

The primary functionality for review apps is that upon a developer creating a pull request (PR) in source control, the deployment pipeline will build a copy of the app and host it in an isolated environment with unique URL for others to review.

The additional functionality includes promoting the application to production after merging the PR, and removing the review app.

TL;DR

To make everything work as shown requires the following steps:

- Create separate App and Config source repos on Github

- Enable Google APIs and bootstrap GKE cluster and Argo CD

- Install Kong API Gateway (ingress controller) via Config repo

- Create DNS A record to kong-proxy external IP

- Connect Cloud Build to your Github repository

- Create private key and store in Google Secret Manager

- Add pub key to Github Config repo

- Create 3 Cloud Build triggers (push , PR, merge)

- Authorize Cloud Build service account to access Secrets

- Add Cloud Build config files to App repo

- Add Kubernetes manifests and Kustomize overlays to Config repo

Create Github source repositories

The following repositories are working examples but I’ll cover the steps to build them in sections to follow.

- Demo App — Dockerfile and Cloud Build CI scripts)

- Demo App Config — setup scripts and Kubernetes manifests for Argo CD

Enable APIs, bootstrap GKE and Argo CD

In the following script I enabled the Google Cloud service APIs and spin up a Kubernetes cluster.

In a previous article, I introduced the concept of the app of apps pattern used even by the Argo dev team at Intuit. In designing this solution, the dynamic creation and removal of an “Application” works perfectly for our use case of creating and destroying the review apps.

Install Kong API Gateway (ingress controller)

For Kong, simply add a kong.yaml file in the /apps folder in the Config repository. Argo CD will detect and monitor any repo or folder you point it to. I chose Kong because every Ingress I create will configure a host making it ideal for dynamically generating new review app host URLs.

Add the Kong config files in that target folder and it will be installed on your Kubernetes cluster (repeat for any desired apps).

Note the source.path (kong). That is the folder in the repo that will include YAML manifests Argo CD will install. I created a file in the repo called 01-install.yaml using Kong’s own single manifest in the /kong folder in the Config repo. ( https://bit.ly/k4k8s points to latest Kong config )

curl

--output kong/01-install.yaml

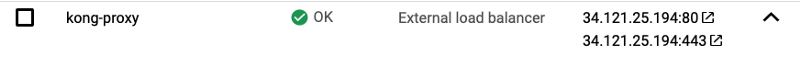

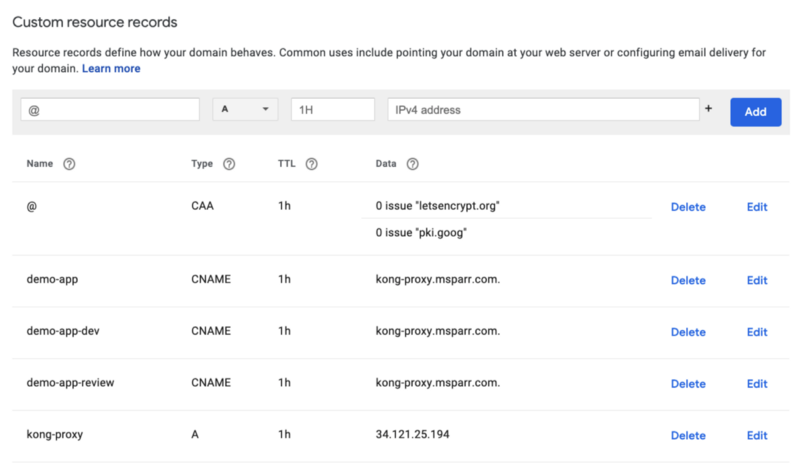

Create DNS A record for Kong Proxy

After Kong installs on your cluster it creates an external TCP load balancer (Service) with external IP.

You want to point your domain to this IP and can manually using your provider. For my initial demo I just configured the A record and some CNAME aliases like below.

An alternative approach is to use Google Cloud DNS to manage your domain. I would have to point to Google’s nameservers, and then could dynamically create review app URLs based on PR number (ideal). After the GKE and Kong bootstrapping, you can use the following commands to set it up.

Connect Cloud Build to Github

Normally I despise articles that redirect you to other articles but for brevity sake, please follow these two steps to connect Cloud Build to Github and add an SSH key to Secret Manager are nicely described in the following two articles from Google:

- Connect Cloud Build to Github

- Add key and secret to access private repos — note you want to enable “commit” checkbox on the Config repo so your App build pipeline can then commit changes to the Docker image tag version.

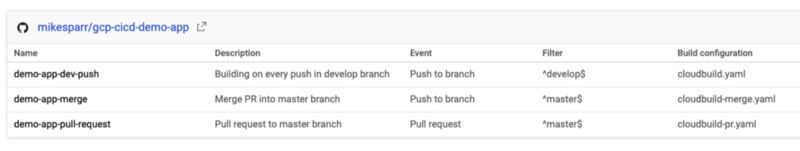

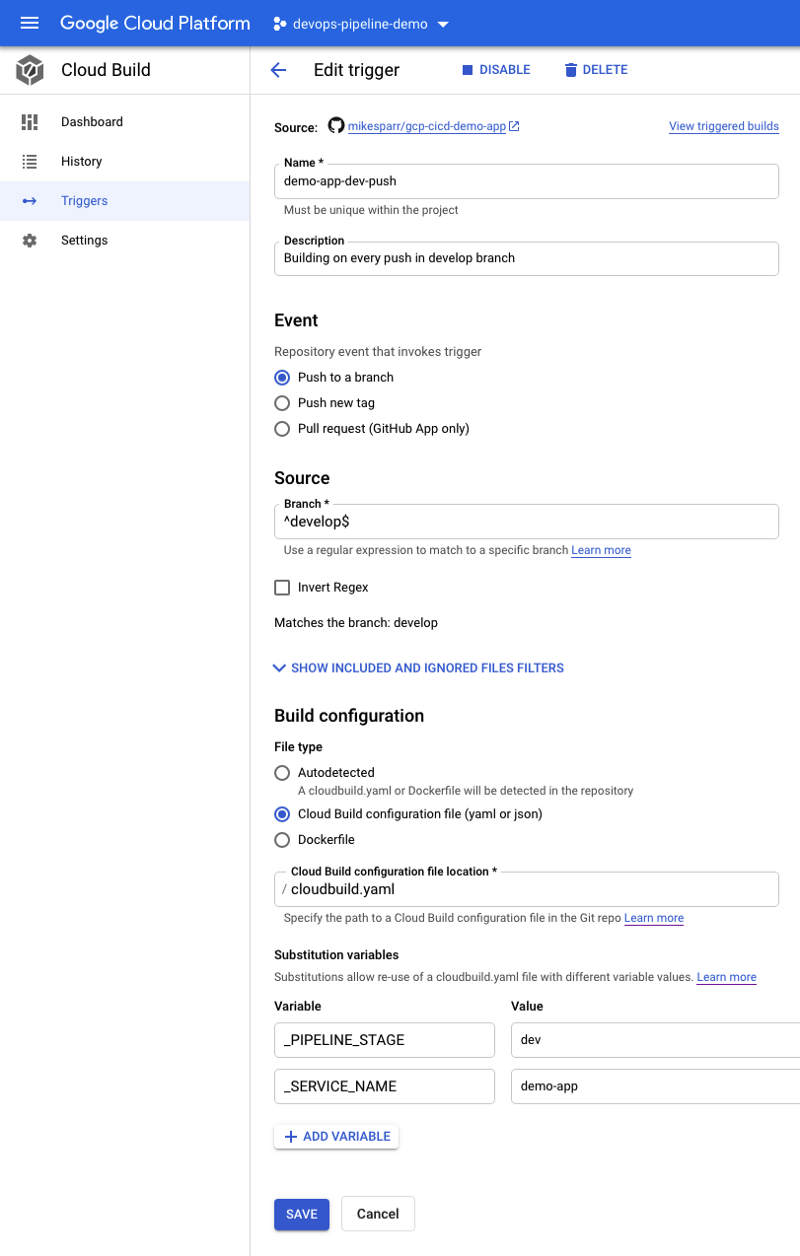

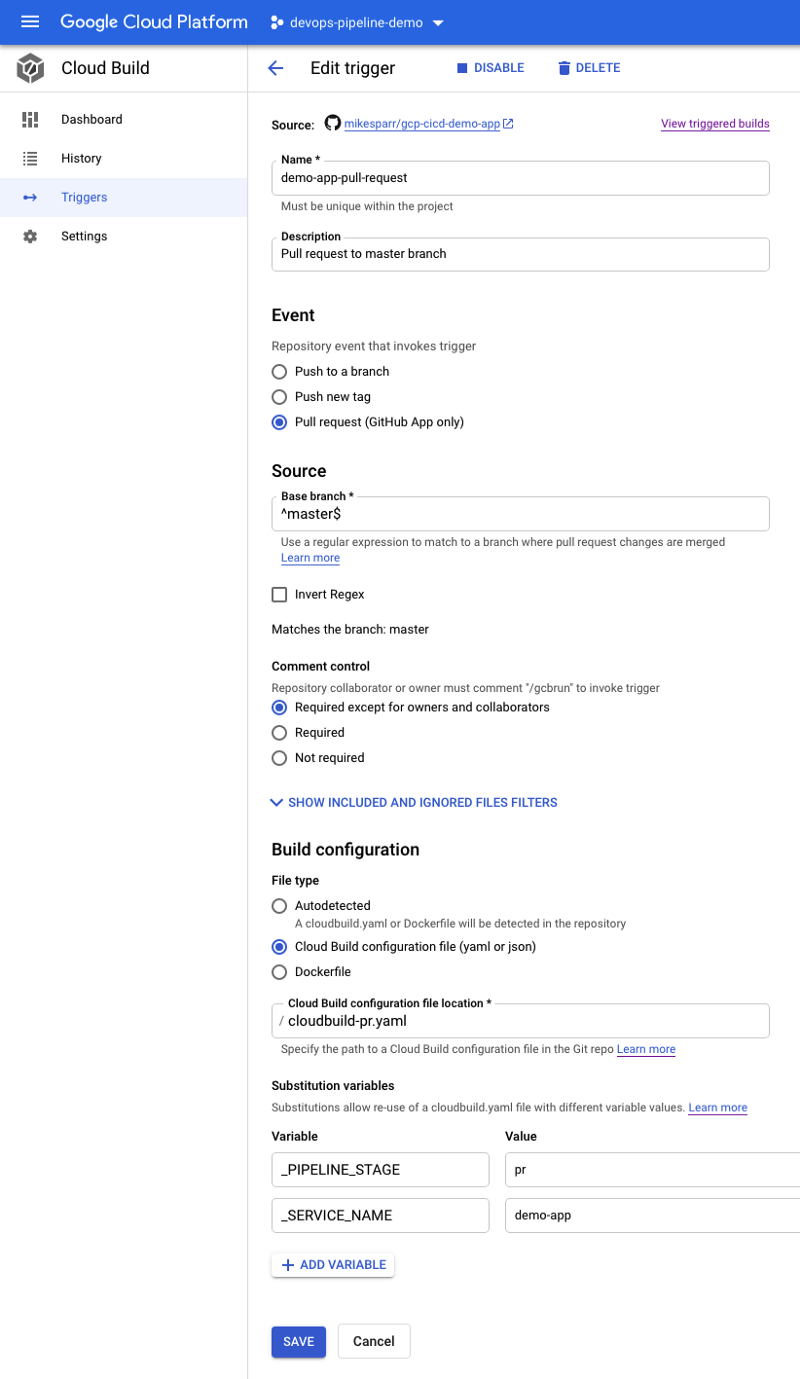

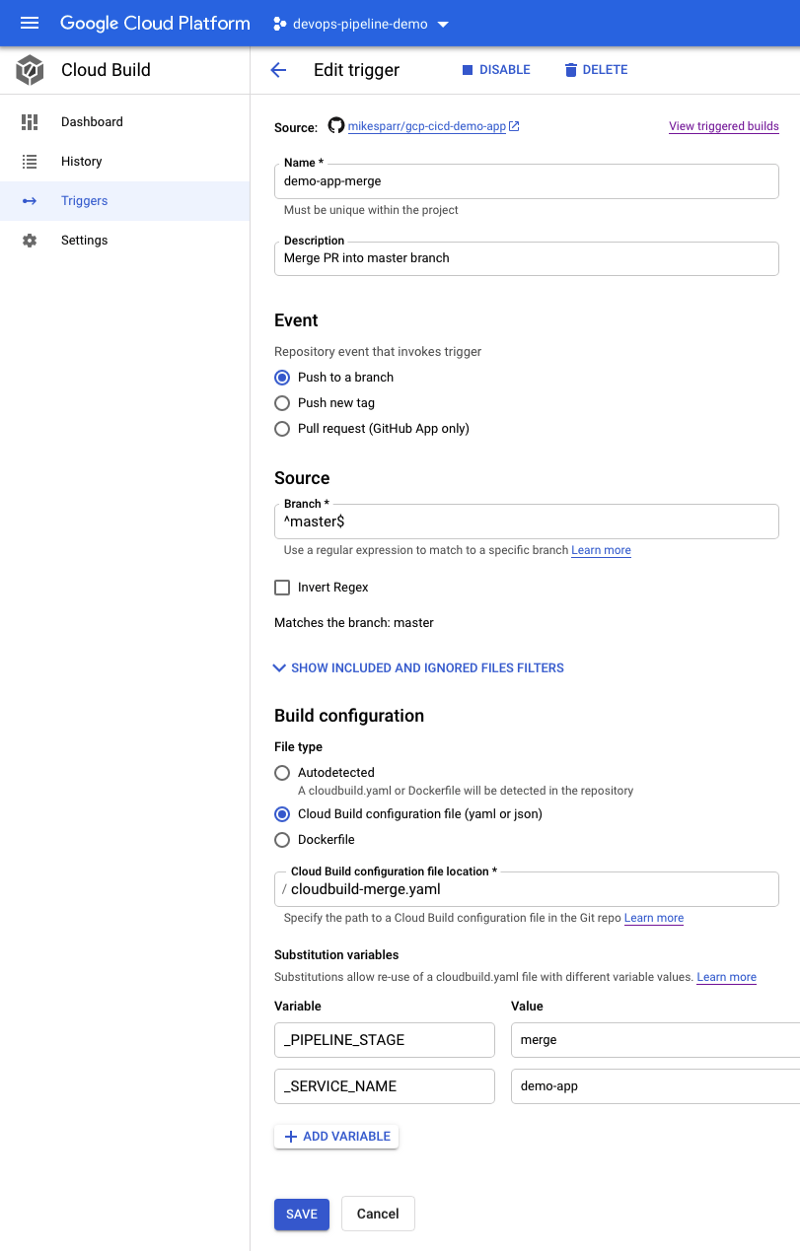

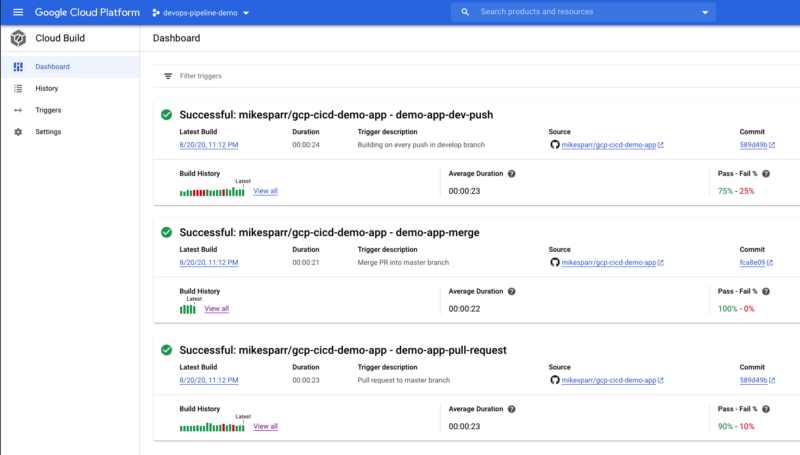

Create 3 Cloud Build triggers

To simulate the desired behavior technically we would only have to create the PR and merge triggers and respective config files, but normally you would want app builds and deploys for developers too so I included a third push trigger to the develop branch.

Note in the configs below I add user-defined ENV vars (bottom of each page). These allow the config files to reference app name and optionally add conditional steps based on stage.

For simplicity I created 3 triggers and 3 separate config files (next section) but you could consolidate them using these features.

Add Cloud Build config files to App repo

The initial part of the build config files are pretty basic and simply build a Docker image and push it to Google Container Registry (GCR). The latter steps use the Secret Manager secret to clone the config repo, make changes to files, and push a commit. This triggers Argo CD to sync the latest changes and update the apps on your GKE cluster.

Note in this file the sed -i ... is where it updates the image tag to the latest build pushed to GCR.

Note in the PR stage we use the v$_PR_NUMBER for tagging the images so they can be updated on subsequent commits during PR review (I may change this at some point). Another unique aspect is we copy from the /templates/demo-app-review.yaml to the /apps folder so Argo CD begins to monitor the target path.

What’s unique here is the “Removing review app…” where we rm apps/demo-app-review.yaml file. Since we turned on autoSync and prune in our Application config, ArgoCD will detect this and remove the review app from the GKE cluster.

Add Kubernetes manifests and Kustomize overlays

We could (and should) have another blog post just covering Kustomize and how it enables Kubernetes-native config management. Traditionally you package your apps with Helm charts, or “sonnet” tools using code, but my preference is to keep it simple and extensible with Kustomize.

The way the Config repo is organized is each app, in our case /demo-app/base , includes its manifests and a kustomization.yaml file.

- namespace.yaml — custom namespace

- ingress.yaml — Ingress config that Kong detects and adds route

- service.yaml — network “wrapper” for backend pods

- deployment.yaml — your actual app (pointing to image)

- kustomization.yaml — Kustomize config

We then add overlays to represent our environments, and these configs will replace or patch the YAML files with environment-specific values like app name suffix, namespace, Ingress host name (to allow dynamic review app URLs).

/overlays/develop— develop env configs/overlays/review— review app env configs/overlays/production— production env configs

You can see these in the example Github config repo, but below is an example of and overlay config and patch file to create environment-specific URLs in the Ingress.

Note the patchesStrategicMerge property points to a file. Kustomize will merge the contents of that file (or files) to override custom values like the Ingress host name below to enable custom URL per environment.

Optional dynamic URLs for each PR

For my initial demo I just used the same URL but if using Cloud DNS, then add another step to your cloudbuild-pr.yaml file like below to make the URLs dynamic. At the time of this writing, I haven’t tested it yet but intend to update my nameservers and test that too.

You would also add another sed -i ... command in the config script to dynamically replace the ingress-host.yaml patch file each PR deploy.

Summary

Although there is a lot of content in this post, the overall process is not too difficult and by leveraging the example repos I shared you too could empower your engineers to focus less on infrastructure and more on what they do best.

Join my team

Join DoiT International and create your own amazing discoveries like this one by visiting our careers page

- https://careers.doit.com (remote positions near time zones)