Large language models (LLMs) like Claude, Cohere, and Llama2 have exploded in popularity recently. But what exactly are they and how can you leverage LLMs to build impactful AI applications? This article is an in-depth look at LLMs and how to use them effectively on AWS.

What are LLMs and How Do They Work?

LLMs are a type of Neural Network that is trained on massive amounts of text data to understand and generate human language. They can contain billions or even trillions of parameters, allowing them to find intricate patterns in language.

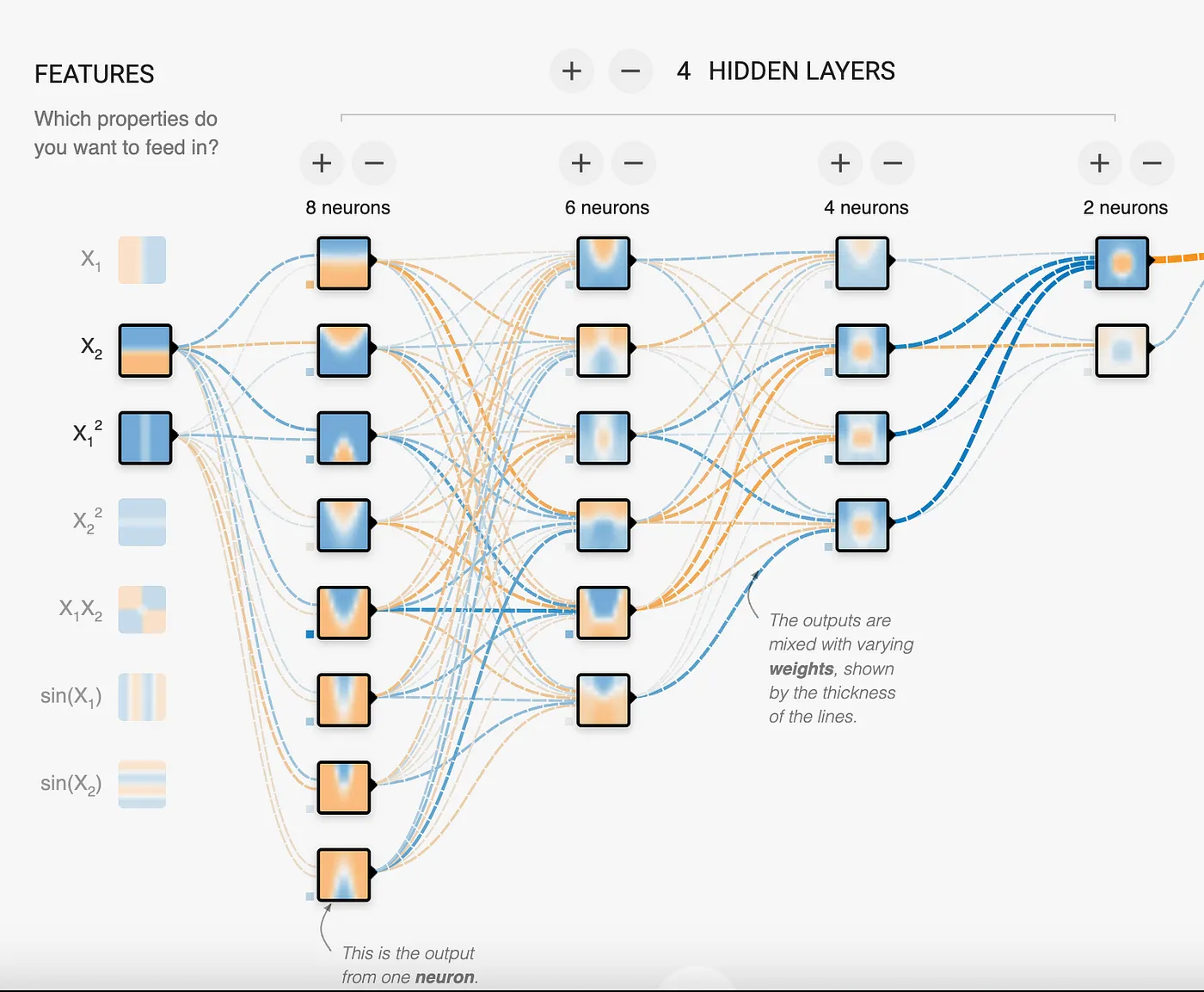

Neural Networks are composed of layers of interconnected nodes, with each node receiving input signals from multiple nodes in the previous layer, processing those signals through an activation function, and then transmitting the output signals to nodes in the next layer. The connections between nodes have adjustable weights, which determine the strength of the signal being transmitted. These weights are referred to as parameters.

In this image, we can see that there are 18 neurons in the Neural Network which means this is a 18 parameter model. As can be appreciated, the model is trying to find patterns to solve problems. In the case of LLMs we are talking about billions to trillions of parameters. Allowing the LLM to identify and map very complex patterns such as human language.

By training a Neural Network on lots of text data, the model learns to match patterns of words and sentences. So how do LLMs take this to the extreme? By utilizing vast Neural Networks with billions or trillions of parameters stacked across tenths of layers. All of those neurons and connections between them allow the network to build extremely sophisticated models of language structure and patterns.

A key feature of LLMs is the implementation of a technique called attention, which allows them to focus on the most relevant parts of the input when generating text. The paper “Attention is all you need” was the pivotal architecture that would prevent LLMs from getting overwhelmed and distracted in all that data. The end result is a model that has absorbed so much textual data that it can realistically continue sentences, answer questions, summarize paragraphs, and more. It matches the patterns in what you give it to patterns it has learned during training. While very impressive, it’s important to note that LLMs have no true comprehension or reasoning skills. They operate based on probabilities rather than knowledge. But with the right fine-tuning and prompting, they can convincingly simulate comprehension for focused domains and tasks.

Not every LLM is made equal

There are mainly 3 types of LLM models. These are divided into:

- Autoregressive: Aim to predict the first word in the sentence or the last word in the sentence. The context comes from the previous words seen. These models are best for text generation although can be used for classification and summarization. Currently autoregressive models are the subject of much attention for their content generation capabilities.

- Autoencoding: Trained on text with missing words in between the text which allows the model to learn the context to predict the missing word(s). These models understand context much better than autoregressive, but they are unable to generate text reliably. These models are less compute intensive and are great at task of summarization and classification.

- Seq2Seq (Text to Text): These models aim to combine the approach of autoregressive and autoencoding models which makes them a great option for text summarization and translation.

LLM models need to convert text into a number representation. This action of the model is call embedding. Although an embedding model is not a full on LLM, it is a neural network model. The model is able to represent not only the word but additional metadata about the word to help the model have more information about what the word, sentence or paragraph mean. The representation of this metadata is done through a list of numbers or vectors. Depending on the embedding model, the list can be of size 750, 1024, or even larger. Embeddings are useful to compare record with one another and calculate how much distance there is between the numbers which can represent how similar they are or how related they are. Embeddings are a critical part of the RAG architecture.

Settings in an LLM

Every autoregressive model has certain settings that can be changed in order to adjust the output of the model. Although the specific settings would change from model to model, the most common settings are:

- Top K: Takes the top number of words given a vocabulary of words ordered by probability. In other words, it will take the top K words in a language that would be considered for prediction. This setting is an integer, with lower values considering only high probability words.

- Top P: The model will start choosing words and adding their probabilities from the pool generated by the top K setting until the top P value is reached. This setting is a float number with lower values generating more predictable outcomes.

- Temperature: The setting controls how random or how predictable the output of the model is. The setting in most models is between 0 and 1, although there is no real lower or upper limit that the model implements. In any case, the lower the number the more predictable the output and the higher the number the more creative the output.

- Output length: The setting may have many different names according to the model, but it is a self-explanatory setting to limit the number of tokens the model generates.

Let’s work to a simple example. Given the prompt “I will go on a picnic at the ___”. If we take a small vocabulary set of 5 words, with Top K as 3 and Top P as .4, the following will be a visual of the decision making the model would take:

The word would be “park”. And the sentence would be:

“I will go on a picnic at the park”.

A lower setting for Temperature, Top K and Top P would generate more deterministic and predictable content. The opposite is to create content with a wider range of words being used when Temperature, Top K and Top P are high. Although these are the most popular settings, a particular model may have additional ones to manipulate the output.

As we have seen, the most important part when interacting with the model is the input we provide in order to trigger the right pattern and ultimately the desired response. This input is the prompt and we will be looking at how we can optimize the prompt to get the output we are looking for.

Tips for Creating Effective LLM Prompts

The prompt you provide to an LLM is critical for getting useful outputs. Key prompt design strategies include:

Use tags to separate prompt parts

Each model would have a different way to use tags. You can leverage the documentation or examples provided in order to know how tags are use in the model.

The following is an example of tags in Claude. Notice the special word Human & Assistant to differentiate the input from the user and where the model is tag to start generating content. The other tag in here is <email>, this tag is not hard coded, you can use any tag which can be referenced on the prompt. The special characters <> is what indicates to the model that this is a special text that should be seen differently than the rest of the prompt.

Human: You are a customer service agent tasked with classifying emails by type. Please output your answer and then justify your classification. How would you categorize this email? <email> Can I use my Mixmaster 4000 to mix paint, or is it only meant for mixing food? </email> The categories are: (A) Pre-sale question (B) Broken or defective item (C) Billing question (D) Other (please explain) Assistant:

Providing examples to demonstrate the desired response format

Below you can see the use of the example tag to specify the format in which we want the model to reply. This can be useful when you are integrating the response with other systems where the format needs to match a specific syntax or when you want to reduce the number of output tokens. Depending on the model, you can use one example (one-shot prompt) or multiple examples (few-shots prompt) to achieve the desired output.

Example of one-shot prompt:

Human: You will be acting as an AI career coach named Joe created by the company AdAstra Careers. Your goal is to give career advice to users. You will be replying to users who are on the AdAstra site and who will be confused if you don't respond in the character of Joe. Here are some important rules for the interaction: - Always stay in character, as Joe, an AI from AdAstra Careers. - If you are unsure how to respond, say "Sorry, I didn't understand that. Could you rephrase your question?" Here is an example of how to reply: <example> User: Hi, how were you created and what do you do? Joe: Hello! My name is Joe and I was created by AdAstra Careers to give career advice. What can I help you with today? </example>

Example of few-shot prompt:

Human: You will be acting as an AI career coach named Joe created by the company AdAstra Careers. Your goal is to give career advice to users. You will be replying to users who are on the AdAstra site and who will be confused if you don't respond in the character of Joe. Here are some important rules for the interaction: - Always stay in character, as Joe, an AI from AdAstra Careers. - If you are unsure how to respond, say "Sorry, I didn't understand that. Could you rephrase your question?" Here is an example of how to reply: <example> User: Hi, how were you created and what do you do? Joe: Hello! My name is Joe and I was created by AdAstra Careers to give career advice. What can I help you with today? </example> <example> User: Could you help me with an issue, I purchased an item, I got it...? Joe: Sorry, I didn't understand that. Could you rephrase your question? </example> <example> User: I need help switching careers, can you help? Joe: I was created by AdAstra Careers to give career advice. What career are you in and what do you want to switch to? </example>

Note: Keep the number of examples to a minimum which will help reduce the change of the model using data from the example rather than just the example format.

Giving context to focus the LLM on your specific domain

In this example we are providing a large document. This document is being used to answer questions. As you can see the document is quite lengthy, and this will have an impact on the number of tokens the LLM has to process and ultimately the cost. A more advanced technique would be to identify the part of the document most relevant to the question, and only send that to the LLM. This method is called Retrieval Augment Generation (RAG) and will be explored on a subsequent post.

Pro tip: Explicitly instruct the model to respond with “I don’t know” if the question or task can not be fulfilled with the context provided. This will reduce hallucinations and keep the scope of the answers to the provided text.

I'm going to give you a document. Then I'm going to ask you a question about it. I'd like you to first write down exact quotes of parts of the document that would help answer the question, and then I'd like you to answer the question using facts from the quoted content. Here is the document: <document> Anthropic: Challenges in evaluating AI systems Introduction Most conversations around the societal impacts of artificial intelligence (AI) come down to discussing some quality of an AI system, such as its truthfulness, fairness, potential for misuse, and so on. We are able to talk about these characteristics because we can technically evaluate models for their performance in these areas. But what many people working inside and outside of AI don’t fully appreciate is how difficult it is to build robust and reliable model evaluations. Many of today’s existing evaluation suites are limited in their ability to serve as accurate indicators of model capabilities or safety. At Anthropic, we spend a lot of time building evaluations to better understand our AI systems. We also use evaluations to improve our safety as an organization, as illustrated by our Responsible Scaling Policy. In doing so, we have grown to appreciate some of the ways in which developing and running evaluations can be challenging. Here, we outline challenges that we have encountered while evaluating our own models to give readers a sense of what developing, implementing, and interpreting model evaluations looks like in practice. We hope that this post is useful to people who are developing AI governance initiatives that rely on evaluations, as well as people who are launching or scaling up organizations focused on evaluating AI systems. We want readers of this post to have two main takeaways: robust evaluations are extremely difficult to develop and implement, and effective AI governance depends on our ability to meaningfully evaluate AI systems. In this post, we discuss some of the challenges that we have encountered in developing AI evaluations. In order of less-challenging to more-challenging, we discuss: Multiple choice evaluations Third-party evaluation frameworks like BIG-bench and HELM Using crowdworkers to measure how helpful or harmful our models are Using domain experts to red team for national security-relevant threats Using generative AI to develop evaluations for generative AI Working with a non-profit organization to audit our models for dangerous capabilities We conclude with a few policy recommendations that can help address these challenges. Challenges The supposedly simple multiple-choice evaluation Multiple-choice evaluations, similar to standardized tests, quantify model performance on a variety of tasks, typically with a simple metric—accuracy. Here we discuss some challenges we have identified with two popular multiple-choice evaluations for language models: Measuring Multitask Language Understanding (MMLU) and Bias Benchmark for Question Answering (BBQ). MMLU: Are we measuring what we think we are? The Massive Multitask Language Understanding (MMLU) benchmark measures accuracy on 57 tasks ranging from mathematics to history to law. MMLU is widely used because a single accuracy score represents performance on a diverse set of tasks that require technical knowledge. A higher accuracy score means a more capable model. We have found four minor but important challenges with MMLU that are relevant to other multiple-choice evaluations: Because MMLU is so widely used, models are more likely to encounter MMLU questions during training. This is comparable to students seeing the questions before the test—it’s cheating. Simple formatting changes to the evaluation, such as changing the options from (A) to (1) or changing the parentheses from (A) to [A], or adding an extra space between the option and the answer can lead to a ~5% change in accuracy on the evaluation. AI developers do not implement MMLU consistently. Some labs use methods that are known to increase MMLU scores, such as few-shot learning or chain-of-thought reasoning. As such, one must be very careful when comparing MMLU scores across labs. MMLU may not have been carefully proofread—we have found examples in MMLU that are mislabeled or unanswerable. Our experience suggests that it is necessary to make many tricky judgment calls and considerations when running such a (supposedly) simple and standardized evaluation. The challenges we encountered with MMLU often apply to other similar multiple-choice evaluations. BBQ: Measuring social biases is even harder Multiple-choice evaluations can also measure harms like a model’s propensity to rely on and perpetuate negative stereotypes. To measure these harms in our own model (Claude), we use the Bias Benchmark for QA (BBQ), an evaluation that tests for social biases against people belonging to protected classes along nine social dimensions. We were convinced that BBQ provides a good measurement of social biases only after implementing and comparing BBQ against several similar evaluations. This effort took us months. Implementing BBQ was more difficult than we anticipated. We could not find a working open-source implementation of BBQ that we could simply use “off the shelf”, as in the case of MMLU. Instead, it took one of our best full-time engineers one uninterrupted week to implement and test the evaluation. Much of the complexity in developing1 and implementing the benchmark revolves around BBQ’s use of a ‘bias score’. Unlike accuracy, the bias score requires nuance and experience to define, compute, and interpret. BBQ scores bias on a range of -1 to 1, where 1 means significant stereotypical bias, 0 means no bias, and -1 means significant anti-stereotypical bias. After implementing BBQ, our results showed that some of our models were achieving a bias score of 0, which made us feel optimistic that we had made progress on reducing biased model outputs. When we shared our results internally, one of the main BBQ developers (who works at Anthropic) asked if we had checked a simple control to verify whether our models were answering questions at all. We found that they weren't—our results were technically unbiased, but they were also completely useless. All evaluations are subject to the failure mode where you overinterpret the quantitative score and delude yourself into thinking that you have made progress when you haven’t. One size doesn't fit all when it comes to third-party evaluation frameworks Recently, third-parties have been actively developing evaluation suites that can be run on a broad set of models. So far, we have participated in two of these efforts: BIG-bench and Stanford’s Holistic Evaluation of Language Models (HELM). Despite how useful third party evaluations intuitively seem (ideally, they are independent, neutral, and open), both of these projects revealed new challenges. BIG-bench: The bottom-up approach to sourcing a variety of evaluations BIG-bench consists of 204 evaluations, contributed by over 450 authors, that span a range of topics from science to social reasoning. We encountered several challenges using this framework: We needed significant engineering effort in order to just install BIG-bench on our systems. BIG-bench is not “plug and play” in the way MMLU is—it requires even more effort than BBQ to implement. BIG-bench did not scale efficiently; it was challenging to run all 204 evaluations on our models within a reasonable time frame. To speed up BIG-bench would require re-writing it in order to play well with our infrastructure—a significant engineering effort. During implementation, we found bugs in some of the evaluations, notably in the implementation of BBQ-lite, a variant of BBQ. Only after discovering this bug did we realize that we had to spend even more time and energy implementing BBQ ourselves. Determining which tasks were most important and representative would have required running all 204 tasks, validating results, and extensively analyzing output—a substantial research undertaking, even for an organization with significant engineering resources.2 While it was a useful exercise to try to implement BIG-Bench, we found it sufficiently unwieldy that we dropped it after this experiment. HELM: The top-down approach to curating an opinionated set of evaluations BIG-bench is a “bottom-up” effort where anyone can submit any task with some limited vetting by a group of expert organizers. HELM, on the other hand, takes an opinionated “top-down” approach where experts decide what tasks to evaluate models on. HELM evaluates models across scenarios like reasoning and disinformation using standardized metrics like accuracy, calibration, robustness, and fairness. We provide API access to HELM's developers to run the benchmark on our models. This solves two issues we had with BIG-bench: 1) it requires no significant engineering effort from us, and 2) we can rely on experts to select and interpret specific high quality evaluations. However, HELM introduces its own challenges. Methods that work well for evaluating other providers’ models do not necessarily work well for our models, and vice versa. For example, Anthropic’s Claude series of models are trained to adhere to a specific text format, which we call the Human/Assistant format. When we internally evaluate our models, we adhere to this specific format. If we do not adhere to this format, sometimes Claude will give uncharacteristic responses, making standardized evaluation metrics less trustworthy. Because HELM needs to maintain consistency with how it prompts other models, it does not use the Human/Assistant format when evaluating our models. This means that HELM gives a misleading impression of Claude’s performance. Furthermore, HELM iteration time is slow—it can take months to evaluate new models. This makes sense because it is a volunteer engineering effort led by a research university. Faster turnarounds would help us to more quickly understand our models as they rapidly evolve. Finally, HELM requires coordination and communication with external parties. This effort requires time and patience, and both sides are likely understaffed and juggling other demands, which can add to the slow iteration times. The subjectivity of human evaluations So far, we have only discussed evaluations that are similar to simple multiple choice tests; however, AI systems are designed for open-ended dynamic interactions with people. How can we design evaluations that more closely approximate how these models are used in the real world? A/B tests with crowdworkers Currently, we mainly (but not entirely) rely on one basic type of human evaluation: we conduct A/B tests on crowdsourcing or contracting platforms where people engage in open-ended dialogue with two models and select the response from Model A or B that is more helpful or harmless. In the case of harmlessness, we encourage crowdworkers to actively red team our models, or to adversarially probe them for harmful outputs. We use the resulting data to rank our models according to how helpful or harmless they are. This approach to evaluation has the benefits of corresponding to a realistic setting (e.g., a conversation vs. a multiple choice exam) and allowing us to rank different models against one another. However, there are a few limitations of this evaluation method: These experiments are expensive and time-consuming to run. We need to work with and pay for third-party crowdworker platforms or contractors, build custom web interfaces to our models, design careful instructions for A/B testers, analyze and store the resulting data, and address the myriad known ethical challenges of employing crowdworkers3. In the case of harmlessness testing, our experiments additionally risk exposing people to harmful outputs. Human evaluations can vary significantly depending on the characteristics of the human evaluators. Key factors that may influence someone's assessment include their level of creativity, motivation, and ability to identify potential flaws or issues with the system being tested. There is an inherent tension between helpfulness and harmlessness. A system could avoid harm simply by providing unhelpful responses like “sorry, I can’t help you with that”. What is the right balance between helpfulness and harmlessness? What numerical value indicates a model is sufficiently helpful and harmless? What high-level norms or values should we test for above and beyond helpfulness and harmlessness? More work is needed to further the science of human evaluations. Red teaming for harms related to national security Moving beyond crowdworkers, we have also explored having domain experts red team our models for harmful outputs in domains relevant to national security. The goal is determining whether and how AI models could create or exacerbate national security risks. We recently piloted a more systematic approach to red teaming models for such risks, which we call frontier threats red teaming. Frontier threats red teaming involves working with subject matter experts to define high-priority threat models, having experts extensively probe the model to assess whether the system could create or exacerbate national security risks per predefined threat models, and developing repeatable quantitative evaluations and mitigations. Our early work on frontier threats red teaming revealed additional challenges: The unique characteristics of the national security context make evaluating models for such threats more challenging than evaluating for other types of harms. National security risks are complicated, require expert and very sensitive knowledge, and depend on real-world scenarios of how actors might cause harm. Red teaming AI systems is presently more art than science; red teamers attempt to elicit concerning behaviors by probing models, but this process is not yet standardized. A robust and repeatable process is critical to ensure that red teaming accurately reflects model capabilities and establishes a shared baseline on which different models can be meaningfully compared. We have found that in certain cases, it is critical to involve red teamers with security clearances due to the nature of the information involved. However, this may limit what information red teamers can share with AI developers outside of classified environments. This could, in turn, limit AI developers’ ability to fully understand and mitigate threats identified by domain experts. Red teaming for certain national security harms could have legal ramifications if a model outputs controlled information. There are open questions around constructing appropriate legal safe harbors to enable red teaming without incurring unintended legal risks. Going forward, red teaming for harms related to national security will require coordination across stakeholders to develop standardized processes, legal safeguards, and secure information sharing protocols that enable us to test these systems without compromising sensitive information. The ouroboros of model-generated evaluations As models start to achieve human-level capabilities, we can also employ them to evaluate themselves. So far, we have found success in using models to generate novel multiple choice evaluations that allow us to screen for a large and diverse variety of troubling behaviors. Using this approach, our AI systems generate evaluations in minutes, compared to the days or months that it takes to develop human-generated evaluations. However, model-generated evaluations have their own challenges. These include: We currently rely on humans to verify the accuracy of model-generated evaluations. This inherits all of the challenges of human evaluations described above. We know from our experience with BIG-bench, HELM, BBQ, etc., that our models can contain social biases and can fabricate information. As such, any model-generated evaluation may inherit these undesirable characteristics, which could skew the results in hard-to-disentangle ways. As a counter-example, consider Constitutional AI (CAI), a method in which we replace human red teaming with model-based red teaming in order to train Claude to be more harmless. Despite the usage of models to red team models, we surprisingly find that humans find CAI models to be more harmless than models that were red teamed in advance by humans. Although this is promising, model-generated evaluations remain complicated and deserve deeper study. Preserving the objectivity of third-party audits while leveraging internal expertise The thing that differentiates third-party audits from third-party evaluations is that audits are deeper independent assessments focused on risks, while evaluations take a broader look at capabilities. We have participated in third-party safety evaluations conducted by the Alignment Research Center (ARC), which assesses frontier AI models for dangerous capabilities (e.g., the ability for a model to accumulate resources, make copies of itself, become hard to shut down, etc.). Engaging external experts has the advantage of leveraging specialized domain expertise and increasing the likelihood of an unbiased audit. Initially, we expected this collaboration to be straightforward, but it ended up requiring significant science and engineering support on our end. Providing full-time assistance diverted resources from internal evaluation efforts. When doing this audit, we realized that the relationship between auditors and those being audited poses challenges that must be navigated carefully. Auditors typically limit details shared with auditees to preserve evaluation integrity. However, without adequate information the evaluated party may struggle to address underlying issues when crafting technical evaluations. In this case, after seeing the final audit report, we realized that we could have helped ARC be more successful in identifying concerning behavior if we had known more details about their (clever and well-designed) audit approach. This is because getting models to perform near the limits of their capabilities is a fundamentally difficult research endeavor. Prompt engineering and fine-tuning language models are active research areas, with most expertise residing within AI companies. With more collaboration, we could have leveraged our deep technical knowledge of our models to help ARC execute the evaluation more effectively. Policy recommendations As we have explored in this post, building meaningful AI evaluations is a challenging enterprise. To advance the science and engineering of evaluations, we propose that policymakers: Fund and support: The science of repeatable and useful evaluations. Today there are many open questions about what makes an evaluation useful and high-quality. Governments should fund grants and research programs targeted at computer science departments and organizations dedicated to the development of AI evaluations to study what constitutes a high-quality evaluation and develop robust, replicable methodologies that enable comparative assessments of AI systems. We recommend that governments opt for quality over quantity (i.e., funding the development of one higher-quality evaluation rather than ten lower-quality evaluations). The implementation of existing evaluations. While continually developing new evaluations is useful, enabling the implementation of existing, high-quality evaluations by multiple parties is also important. For example, governments can fund engineering efforts to create easy-to-install and -run software packages for evaluations like BIG-bench and develop version-controlled and standardized dynamic benchmarks that are easy to implement. Analyzing the robustness of existing evaluations. After evaluations are implemented, they require constant monitoring (e.g., to determine whether an evaluation is saturated and no longer useful). Increase funding for government agencies focused on evaluations, such as the National Institute of Standards and Technology (NIST) in the United States. Policymakers should also encourage behavioral norms via a public “AI safety leaderboard” to incentivize private companies performing well on evaluations. This could function similarly to NIST’s Face Recognition Vendor Test (FRVT), which was created to provide independent evaluations of commercially available facial recognition systems. By publishing performance benchmarks, it allowed consumers and regulators to better understand the capabilities and limitations4 of different systems. Create a legal safe harbor allowing companies to work with governments and third-parties to rigorously evaluate models for national security risks—such as those in the chemical, biological, radiological and nuclear defense (CBRN) domains—without legal repercussions, in the interest of improving safety. This could also include a “responsible disclosure protocol” that enables labs to share sensitive information about identified risks. Conclusion We hope that by openly sharing our experiences evaluating our own systems across many different dimensions, we can help people interested in AI policy acknowledge challenges with current model evaluations. </document> First, find the quotes from the document that are most relevant to answering the question, and then print them in numbered order. Quotes should be relatively short. If there are no relevant quotes, write "No relevant quotes" instead. Then, answer the question, starting with "Answer:". Do not include or reference quoted content verbatim in the answer. Don't say "According to Quote [1]" when answering. Instead make references to quotes relevant to each section of the answer solely by adding their bracketed numbers at the end of relevant sentences. Thus, the format of your overall response should look like what's shown between the <example></example> tags. Make sure to follow the formatting and spacing exactly. <example> Relevant quotes: [1] "Company X reported revenue of $12 million in 2021." [2] "Almost 90% of revene came from widget sales, with gadget sales making up the remaining 10%." Answer: Company X earned $12 million. [1] Almost 90% of it was from widget sales. [2] </example> Here is the first question: In bullet points and simple terms, what are the key challenges in evaluating AI systems? If the question cannot be answered by the document, say so. Answer the question immediately without preamble.

Using a “chain of thought” to encourage logical reasoning

This technique invokes the model to verify its work, or provide evidence as to why it provided the answer it did. Chain of thought is a very useful tool to use to reduce hallucinations. Hallucinations are content that the model has generated that is false, inaccurate, or completely fabricated. Due to the probabilistic approach of the model, the generated content can sound truthful but in reality is a hallucination.

As can be seen in this example, we are including the task “Double check your work to ensure no errors or inconsistencies”. This task will help the model provide more accurate responses. However, this is not a fail proof approach, as we have seen before these models are probabilistic and in certain cases it may say there are no errors when in fact there are, or would provide evidence completely unrelated to the answer given previously.

Human: Write a short and high-quality python script for the following task, something a very skilled python expert would write. You are writing code for an experienced developer so only add comments for things that are non-obvious. Make sure to include any imports required. NEVER write anything before the ```python``` block. After you are done generating the code and after the ```python``` block, check your work carefully to make sure there are no mistakes, errors, or inconsistencies. If there are errors, list those errors in <error> tags, then generate a new version with those errors fixed. If there are no errors, write "CHECKED: NO ERRORS" in <error> tags. Here is the task: <task> A web scraper that extracts data from multiple pages and stores results in a SQLite database. Double check your work to ensure no errors or inconsistencies. </task>

This is another example for a Llama2 model. Note the request to provide step by step information.

[INST]You are a a very intelligent bot with exceptional critical thinking[/INST] I went to the market and bought 10 apples. I gave 2 apples to your friend and 2 to the helper. I then went and bought 5 more apples and ate 1. How many apples did I remain with? Let's think step by step.

Leveraging LLMs on AWS

AWS offers a robust set of services for building LLM-powered applications without needing to train models from scratch. At DoiT, we help you leverage these services and tools to the full potential.

Amazon Bedrock

Amazon Bedrock is a fully managed service designed to simplify the creation and scaling of generative AI applications. It does this by offering access to a range of high-performing foundation models from leading AI companies such as AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon, all through a single API.

- Model Choice and Customization: Users can select from a variety of foundation models to find the one best suited for their needs. Additionally, these models can be privately customized with user data through fine-tuning and Retrieval Augmented Generation (RAG), allowing for personalized and domain-specific applications.

- Serverless Experience: Amazon Bedrock provides a serverless architecture, meaning users don’t have to manage any infrastructure. This allows for easy integration and deployment of generative AI capabilities into applications using familiar AWS tools.

- Security and Privacy: Building generative AI applications with Bedrock ensures security, privacy, and adherence to responsible AI principles. Users have the ability to keep their data private and secure while utilizing these advanced AI capabilities

- Amazon Bedrock Knowledge Base: Allows architects to enhance generative AI applications by allowing them to utilize retrieval augmented generation (RAG) techniques. It enables the aggregation of various data sources into a centralized repository, providing foundation models and agents with access to up-to-date and proprietary information to deliver more context-specific and accurate responses. This fully managed feature streamlines the RAG workflow from data ingestion to prompt augmentation, eliminating the need for custom data source integrations. It supports seamless integration with popular vector storage databases like Amazon OpenSearch Serverless, Pinecone, and Redis Enterprise Cloud, making it easier for organizations to implement RAG without the complexities of managing the underlying infrastructure

- Agents for Amazon Bedrock: Enable architects to create and configure autonomous agents within applications, facilitating end-users in completing actions based on organizational data and user input. These agents orchestrate interactions among foundation models, data sources, software applications, and user conversations. They automatically call APIs and can invoke knowledge bases to enhance information for actions, significantly reducing development efforts by handling tasks like prompt engineering, memory management, monitoring, and encryption

Sagemaker Jumpstart

Amazon SageMaker JumpStart is a machine learning hub within Amazon SageMaker, providing access to pre-trained models and solution templates for a wide array of problem types. It enables incremental training, tuning before deployment, and simplifies the process of deploying, fine-tuning, and evaluating pre-trained models from popular model hubs. This accelerates the machine learning journey by offering ready-made solutions and examples for learning and application development

Amazon CodeWhisperer

AWS CodeWhisperer is a machine learning-powered code generator that provides real-time code recommendations and suggestions, varying from single-line comments to fully formed functions, based on your current and previous inputs.

Amazon Q

Amazon Q is a generative AI-powered assistant designed to cater to business needs, enabling users to tailor it for specific applications. It facilitates the creation, extension, operation, and understanding of applications and workloads on AWS, making it a versatile tool for various organizational tasks