If you’re trying to get a grip on anomaly detection with machine learning but facing challenges, you’re certainly not alone. Maybe you’ve spent hours trying to figure out why your cloud costs suddenly spiked last month, or you’re struggling to tell the difference between normal traffic surges and potential security threats. These are common hurdles that many teams face.

Unanticipated data patterns in cloud environments can easily tank your operational efficiency and cost you thousands if you don’t address them quickly. How do these anomalies come about? To name just a few ways, sudden and unexplained increases in cloud expenses, irregular system behaviors that reduce performance, or even potential security threats that put sensitive data at risk.

Thanks to the general complexity of cloud systems, there’s another layer of difficulty, though. Multiple services and branching components make it challenging to pinpoint the root cause of any issues that arise. But to maintain error-free operations, enhance system reliability, and mitigate risks, engineering teams need to be able to quickly and accurately detect and respond to these outliers.

So, what exactly is anomaly detection, and how does it work within the context of machine learning? In this article, we’ll take a look at different techniques used for anomaly detection in machine learning models and explore various ways it can be beneficial.

What is anomaly detection?

(Source)

At its core, anomaly detection is the science of identifying unusual patterns that don’t match up with expected behavior. Think of it as your cloud infrastructure’s immune system, constantly monitoring, learning, and flagging potentially problematic deviations from the norm. But what exactly should you be watching for?

When it comes to your cloud environment, anomalies can surface in two critical areas: operations and costs. Sometimes these go hand in hand—an unexpected spike in traffic might drive both performance issues and increased expenses. But here’s the interesting part: cost anomalies don’t always stem from operational changes. You might find yourself facing sudden cost increases that have nothing to do with system performance or user activity.

Why does this matter? Because catching these financial surprises early can save you hundreds or thousands in unexpected cloud expenses. Unlike traditional monitoring systems that rely on static thresholds, today’s outlier detection capabilities leverage sophisticated machine learning algorithms to adapt to dynamic environments.

This adaptive approach is huge in cloud infrastructures where “normal” behavior can change dramatically based on the time of day, day of week, or seasonal patterns. For instance, an ecommerce platform might see perfectly normal (and expected) traffic spikes during a popular annual sale that would trigger alerts on any other day of the year. By leveraging machine learning and artificial intelligence, anomaly detection can accurately identify these patterns and exceptions, distinguishing between normal and abnormal behavior—whether they’re affecting your operations, your costs, or both.

Types of anomalies to watch out for

As you manage your cloud environment, you’ll encounter various types of anomalies across three major categories: security threats that could compromise your systems, operational issues that affect performance, and cost spikes that impact your bottom line. But how do you identify and address each type effectively?

Here are some fundamental patterns these anomalies can take:

Point anomalies are your sudden, dramatic changes—the ones that make you do a double take. Think of a 10x spike in API calls or an unexpected surge in data transfer costs. While these sharp deviations are easy to spot, the tricky part is telling the difference between a legitimate spike from your successful marketing campaign and a potential security breach.

Contextual anomalies require you to think about timing and circumstances. That high CPU utilization? It might be perfectly normal during your peak business hours but could signal a serious problem at 3 a.m. These anomalies demand smarter detection systems that understand your business context, not just raw data. A good baseline is to ask yourself: when does “unusual” actually mean “concerning” for your specific operations?

Collective anomalies are perhaps the most sophisticated (and potentially dangerous) to watch for. Imagine multiple data points that look innocent on their own but reveal a troubling pattern when viewed together. A classic example would be a DDoS attack, where individual requests seem normal, but their collective behavior exposes the threat. It’s about spotting a coordinated effort rather than a single suspicious action.

3 anomaly detection techniques: How to spot issues early

(Source)

The catalog of anomaly detection techniques is rich and varied, with each approach offering distinct advantages for different situations. Given this diversity, consider which of the following approaches makes the most sense for your cloud environment.

1. Supervised detection

Think of supervised detection as training your system with a detailed playbook. You’ll need labeled data showing both normal operations and known anomalies. While this approach offers high accuracy for familiar issues, the catch comes in identifying rare events or threats you’ve never seen before. It’s like training a security guard using photos of known thieves—great for spotting familiar faces but far less effective when a new criminal shows up.

2. Unsupervised detection

Unsupervised anomaly detection has become the go-to choice for cloud environments because it doesn’t require any previous examples to work effectively. When you’re migrating your applications to the cloud, you rarely have historical training data showing what anomalies look like. Think about it: How could you possibly have labeled examples of cost spikes or performance issues for a system that’s just getting started?

Instead of relying on past examples, unsupervised learning methods determine what’s “normal” by observing your patterns over time. It operates on a simple but powerful principle that anomalies are rare and notably different from regular patterns. This flexibility makes it particularly valuable as your cloud environment evolves and new types of anomalies emerge.

3. Semi-supervised detection

Want the best of both worlds? Semi-supervised detection offers exactly that. By using just a small amount of labeled data to improve accuracy while maintaining flexibility, this hybrid approach has gained popularity in cloud environments. You get the benefit of any historical knowledge you might have while still being able to catch new, unexpected issues.

The reality is, most cloud anomaly detection systems lean heavily toward unsupervised learning. It matches the real-world challenges you face. When you’re dealing with dynamic cloud systems, waiting to accumulate enough labeled examples of anomalies isn’t practical. You need a system that can start protecting your environment immediately, learning and adapting to normal data points as it goes.

Common algorithms for anomaly detection

Anomaly detection systems use a sophisticated array of algorithms, each bringing unique strengths to the table in identifying unusual patterns within the data. Data science and statistical methods, such as Z-score analysis, time series data, and interquartile range (IQR) calculations, provide a great foundation, particularly for real-time detection needs. These approaches, often based in Python, are highly effective at pinpointing immediate, clear deviations from typical behavior, making them ideal for scenarios where quick action is required (such as fraud detection or network monitoring). Their simplicity and efficiency make them a preferred choice for datasets with relatively straightforward distributions.

Machine learning techniques take anomaly detection to the next level by incorporating advanced pattern recognition capabilities. For example, random forest decision trees, and more specifically isolation forests—a regression machine-learning algorithm specifically used for anomaly detection—are designed to efficiently separate anomalous data points by leveraging the fact that anomalies tend to be more isolated and less frequent than normal data. This makes them highly scalable and suitable for large datasets with complex structures.

Deep learning techniques, particularly autoencoders, add yet another layer of sophistication. Autoencoders excel at learning intricate normal patterns within high-dimensional data. They are able to identify even the most subtle deviations from these learned norms, such as those found in healthcare imaging or when detecting cybersecurity threats.

For data with a temporal dimension, time series–specific methods like ARIMA (Autoregressive Integrated Moving Average) and Prophet come into play. These algorithms are tailored to handle the time-dependent aspects of data. This is especially critical for applications like cloud performance monitoring, stock market data analysis, and energy usage forecasting, where patterns often follow daily, weekly, or seasonal trends.

ARIMA is widely used for modeling linear temporal relationships, while Prophet (developed by Facebook) shines when working with datasets containing missing values or irregular trends. Both are great at capturing complex temporal dependencies and forecasting expected behavior ranges, making them invaluable tools you can use for predicting future anomalies based on past trends.

Together, these anomaly detection algorithms form a powerful tool kit. They are capable of addressing a wide range of challenges across industries and ensuring systems continue to operate efficiently and securely.

Real-world applications of anomaly detection

(Source)

The applications and use cases of anomaly detection methods in cloud environments extend far beyond that of just simple monitoring. In cloud cost management, these systems help organizations find unexpected spending patterns, optimizing things like workloads before they impact the bottom line. This was the case for Moralis, which partnered with DoiT to drive better efficiencies as a Web3 development platform and realized a 10% cost savings upon implementation. A similar proactive approach can help your company save thousands in potential cloud cost overruns.

In infrastructure monitoring, anomaly detection serves as an early warning system for potential problems. By finding subtle deviations in performance metrics, these systems can predict and prevent outages before they even take place. This predictive capability takes infrastructure management from reactive to proactive, minimizing downtime and boosting system reliability.

Security teams can use anomaly detection to identify potential breaches and unusual access patterns. This ability to detect even the subtlest of deviations from normal behavior patterns has become vital in an era where sophisticated cyberattacks often hide within activity that appears to be normal.

Challenges in anomaly detection

As with any technology, there are pros and cons to implementing anomaly detection systems. These systems are designed to identify unusual patterns or behaviors in data, which can provide significant benefits like early detection of issues, prevention of potential outages, and improved operational efficiency. However, getting these systems to detect the right anomalies and reduce false positives can be a challenge.

The data-quality dilemma

One of the first hurdles is data quality and quantity. To establish reliable baseline patterns, you need to have access to large amounts of clean, relevant data. Without it, it’s likely that the system will end up struggling to differentiate between normal variations and true anomalies. Poor data can lead to inaccurate results, undermining the system’s effectiveness right from the start.

The false-positive problem

Another common challenge is dealing with false positives. Anomaly detection systems often flag events as suspicious, even when they don’t represent real issues. This can result in alert fatigue, where users become desensitized and annoyed with frequent notifications, ultimately making them more likely to miss critical alerts. Striking the right balance between sensitivity and precision is key to maintaining the effectiveness of the system.

The cost factor

Then there’s the computational cost challenge. Processing massive amounts of data—from your cost and usage reports (CUR) to system logs and metrics—requires significant computing power. The training phase alone can be computationally expensive, often making it impractical for enterprises to handle in-house. This is where partnering with a specialized provider like DoiT can make a difference, taking this heavy lifting off your shoulders.

Cloud environment complexity

The dynamic nature of cloud environments further complicates any anomaly detection implementation. Usage patterns in the cloud can change frequently, services are highly interdependent, and resource consumption varies widely depending on demand. These fluctuations make it harder to establish and maintain true baseline patterns. A successful anomaly detection system needs to be able to adapt to these changing conditions while maintaining the accuracy needed to identify actual anomalies.

Integration and workflow alignment

Integrating anomaly detection systems into existing workflows can also pose challenges. You need to ensure that such systems align with operational processes already in place. It’s also important that these systems provide actionable insights that teams can act on quickly. Without proper integration and user training, even the best anomaly detection system may fail to deliver its full value.

Even with these challenges, though, the potential benefits of anomaly detection systems make them a valuable tool if your organization is looking to improve reliability and efficiency in its cloud environment. By being aware of and addressing these obstacles through thoughtful planning and robust implementation strategies, your business can unlock the full potential of anomaly detection technology.

Best practices for implementing anomaly detection

(Source)

Successful anomaly detection implementation starts with clear optimization objectives. Define what an anomaly is within your specific context and establish measurable goals for your detection system. This helps in choosing appropriate algorithms and setting meaningful thresholds that make sense.

But identifying anomalies is just the beginning: What will you do when you find them? Your system should support multiple response mechanisms. Consider implementing:

- Dynamic threshold alerts that adapt to your changing environment

- Automated remediation systems that can take immediate corrective action

- Intelligent scaling limits that prevent runaway resource usage

- Customized notification workflows based on anomaly severity

Regular system tuning and adaptation are crucial. Cloud environments evolve, and your anomaly detection system should evolve with them. This includes regular model retraining, any necessary threshold adjustments, predictive maintenance, and performance metric tracking.

Finally, you’ll want to establish clear response procedures for when anomalies are detected. There’s no point in having the fastest detection system if the response is slow or disorganized. Document escalation paths and automate common responses wherever possible.

Making cloud spend predictable with DoiT

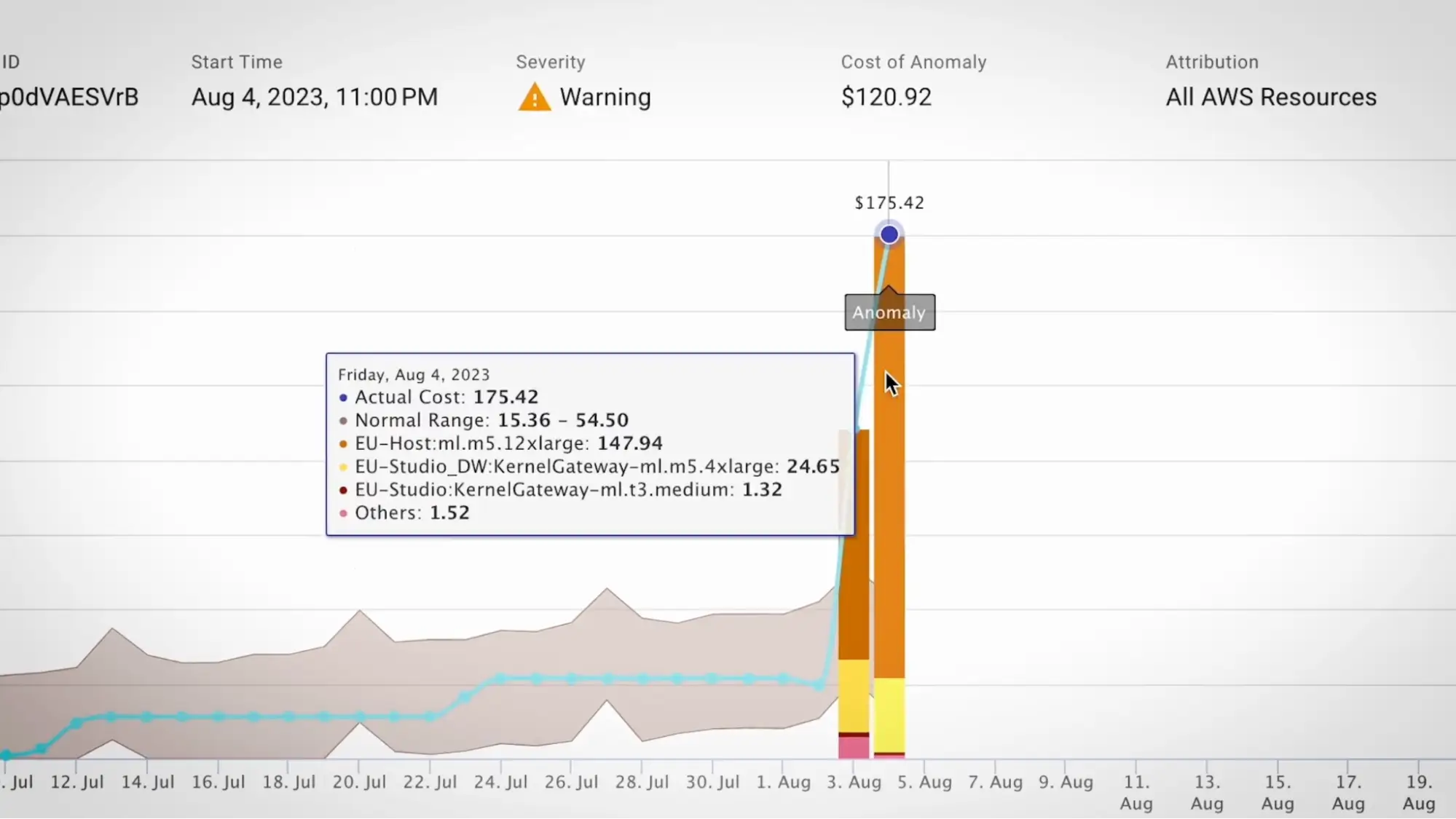

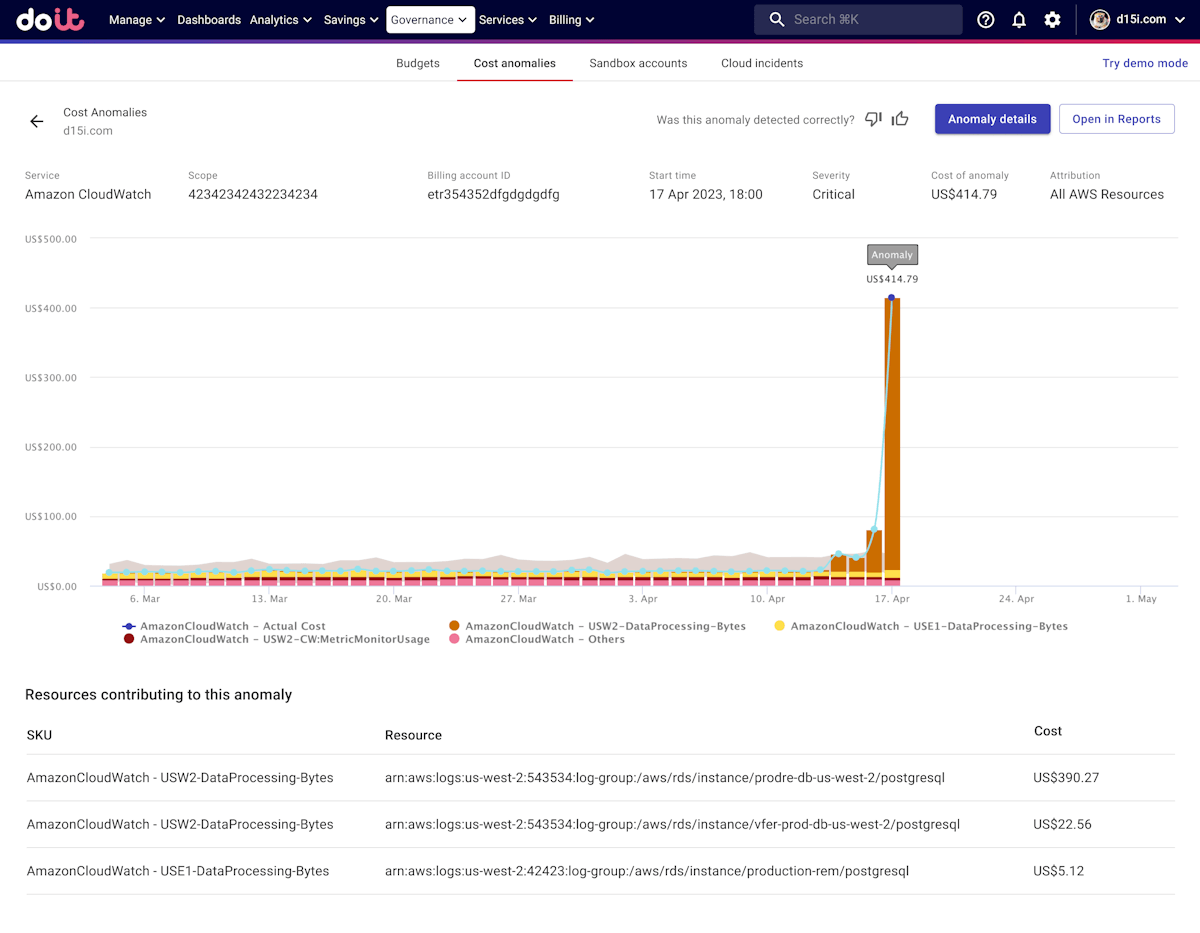

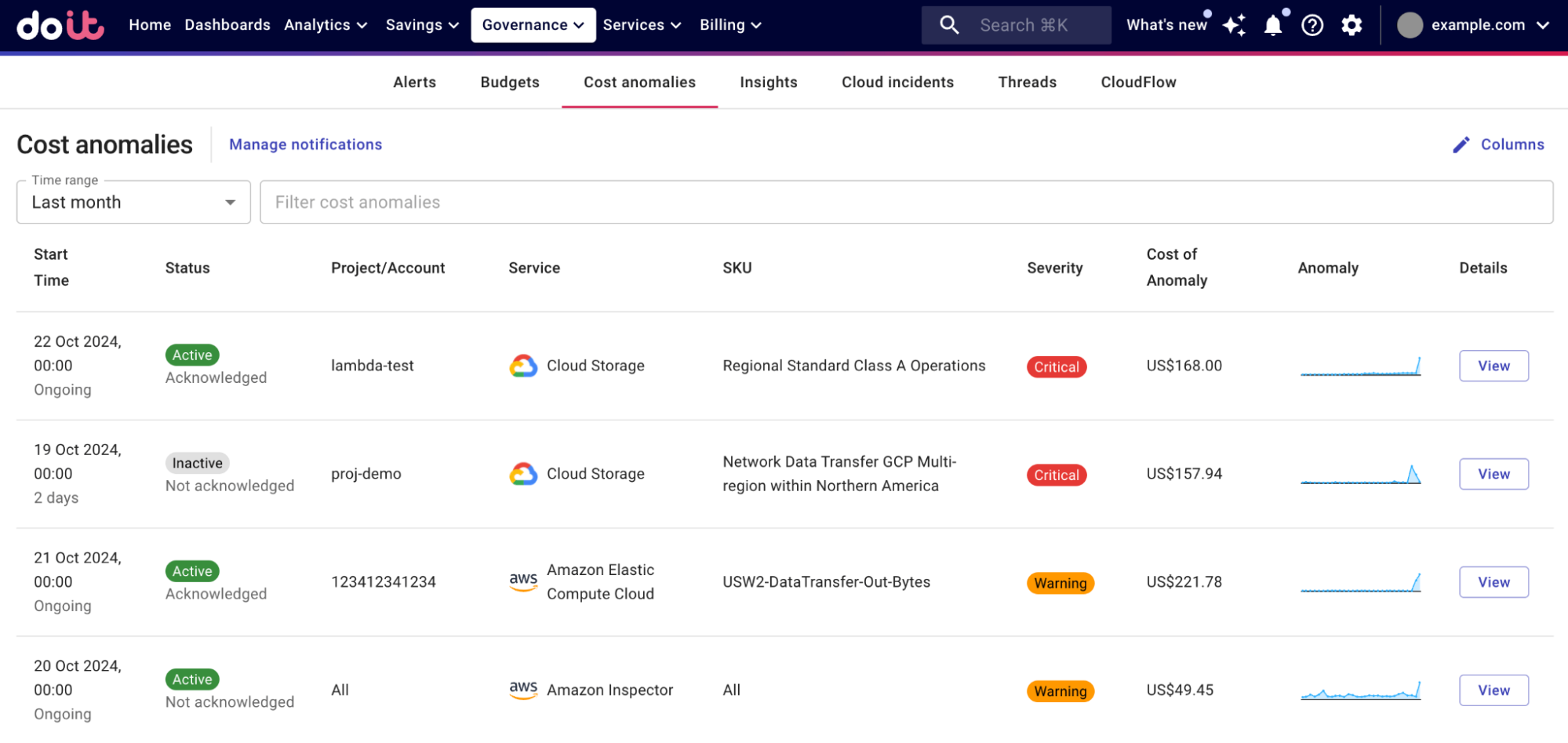

Anomaly Detection in DoiT Cloud Intelligence keeps an eye on your cloud spending, spotting unusual patterns automatically so you can avoid surprises before they hit your budget.

Our system combines sophisticated machine learning algorithms with deep cloud expertise to provide accurate, actionable anomaly detection. We understand that each organization’s cloud usage patterns are unique, and DoiT’s solution adapts to your specific environment and requirements.

By combining smart machine learning with deep cloud expertise, we deliver insights tailored to your unique usage. Check out DoiT’s platform and pricing to make your cloud setup more predictable and cost-efficient.