Making sense of networks and equipment through IP Address Management (IPAM)

Recently I’ve noticed an increase in customers reporting challenges with their networking, namely peering, due to IP address range collisions. This is an obvious indication of a need to plan for and manage IP addresses throughout the organization.

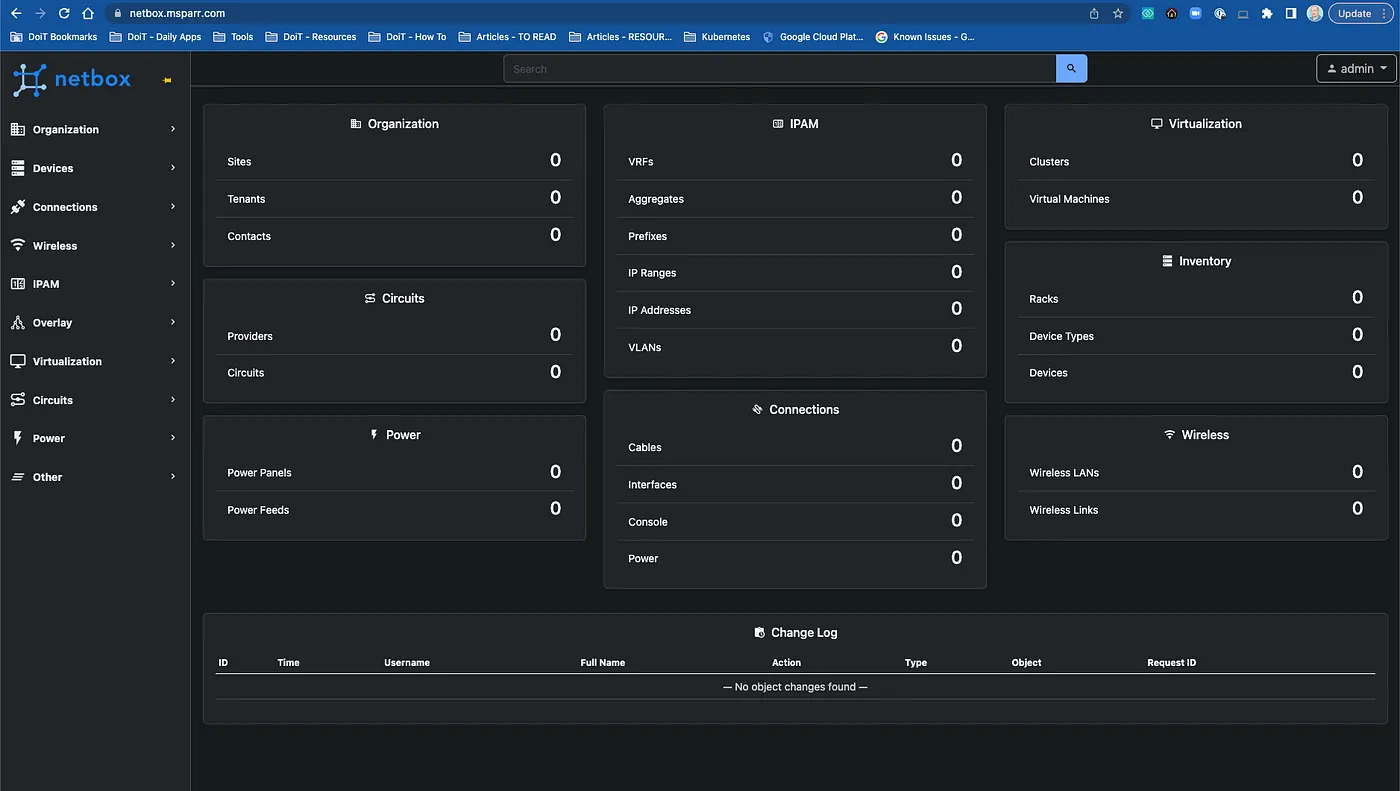

Although you can keep track of your IPs in a shared spreadsheet, there are also software tools available. This post demonstrates how to run a popular open source IP address management (IPAM) tool called Netbox in a cloud-native way on Google Cloud Platform (GCP).

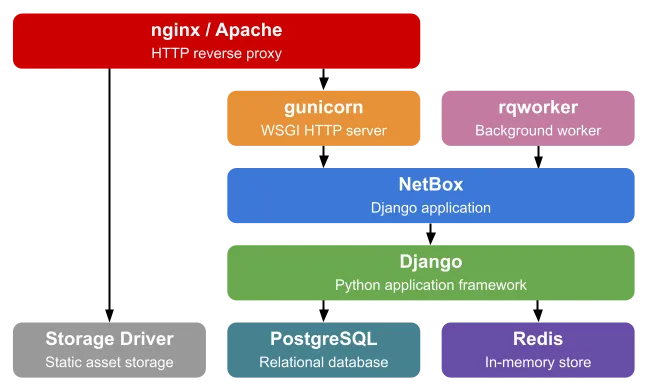

Traditional Stack

Historically Netbox is run on one or more virtual machines, fronted by a web server. There is a community-managed Docker image, but the only instructions are to run it using docker compose. This architecture resembles many applications companies build or run, however, so it’s a great candidate to also illustrate how to migrate to public cloud.

Source: Netbox — standard Netbox installation

Cloud-native Design

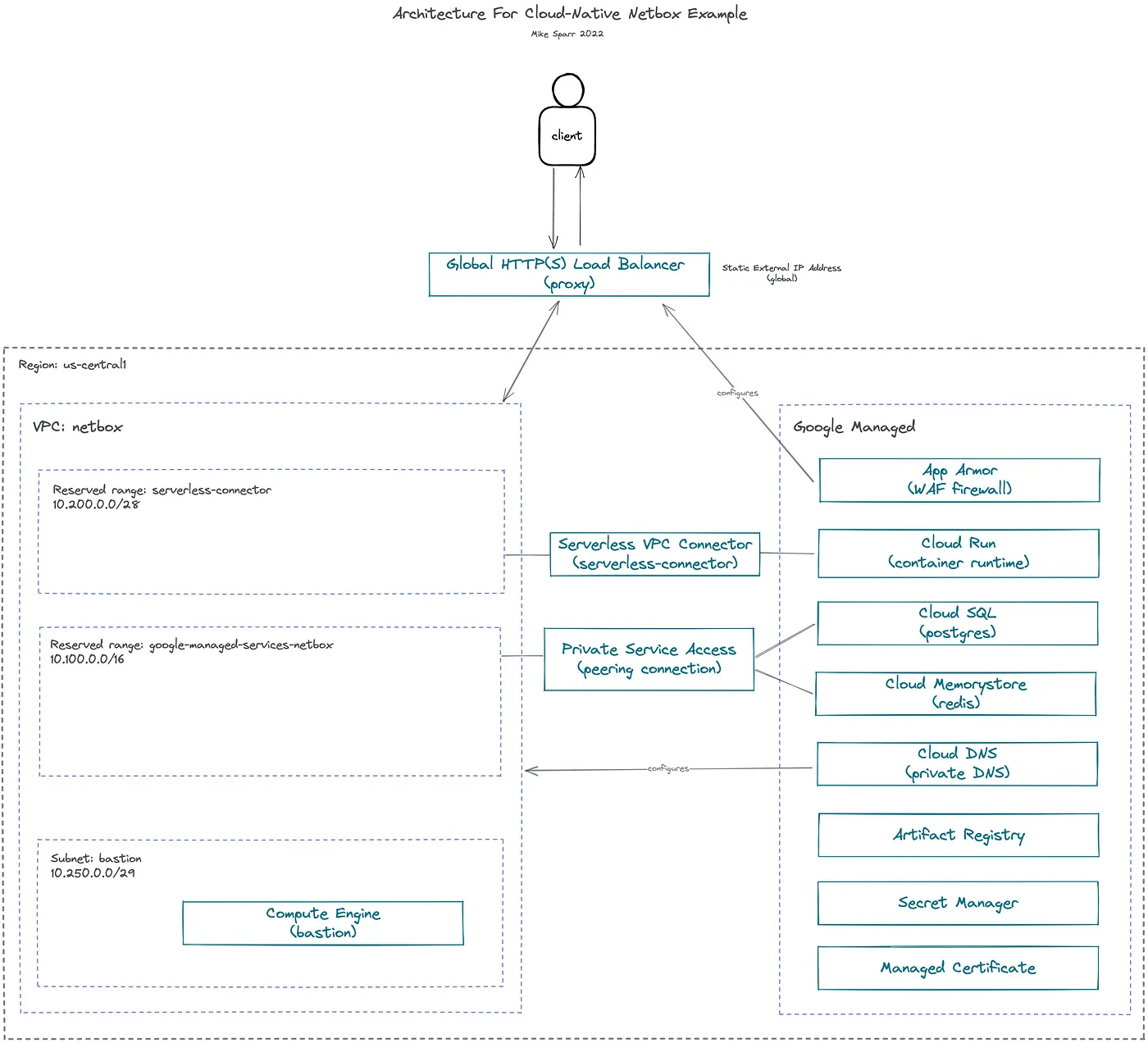

I decided to figure out how the Docker image works, its dependencies and configuration parameters, and deploy it instead to GCP using only managed services. This example can serve as an illustration of how you may either “move and improve” or “rip and replace” applications as you migrate to public cloud.

Revised Netbox installation on GCP using managed services

Application components

- Netbox application (Python app using Django framework)

- PostgreSQL database (Cloud SQL)

- Redis (Cloud Memorystore)

Design decisions

- Managed database and cache (Cloud SQL, Cloud Memorystore)

- Private-only IPs for databases and cache (Private Service Access)

- Private DNS for database hostnames (Cloud DNS)

- Secrets stored in secret manager (Secret Manager)

- Serverless container runtime (Cloud Run, Artifact Registry)

- Global load balancer with TLS (HTTP(S) Load Balancing, Managed Certificate)

- WAF firewall (Cloud Armor)

Something I witness many orgs struggle with is connecting managed services, and serverless apps, over private IP addresses. This example illustrates how you reserve private ranges in your VPC network, and then assign them to the managed services, creating a connectivity bridge.

Cloud DNS is used to establish private hostnames for the apps to connect to databases. This offers more flexibility in the future if you ever change out databases, or need to failover, because you can simply update your DNS records and apps still point to the same domain. In theory, I could have leveraged DNS forwarding and connected it all to my public domain, but internally it’s not required so I used example.com.

We didn’t need the bastion (or jump host) VM, but I spun one up to test connections while building everything out. Normally a bastion would be deployed in a managed instance group (MIG) size 1, and without external IP addresses.

Secure, Load-balanced Web Application

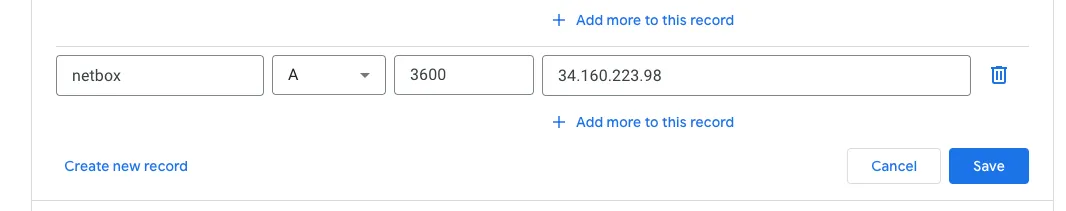

To best illustrate how everything fits together, I used a personal domain and registered an ‘A record’ for the static IP address I assigned to the Global Load Balancer and a managed certificate was automatically provisioned.

For added security, I applied a Cloud Armor (WAF firewall) policy to the load balancer, and restricted IP ranges (see below).

Implementation Code

The code below illustrates the step-by-step commands I used to set everything up, including networking, environment variables and secrets, databases, artifact registry and Docker images, Cloud Run, load balancing, and WAF firewall.

#!/usr/bin/env bash

#####################################################################

# REFERENCES

# - https://docs.netbox.dev/en/stable/installation/3-netbox/

# - https://github.com/netbox-community/netbox-docker/wiki/

# - https://hub.docker.com/r/netboxcommunity/netbox

# - https://cloud.google.com/sql/docs/postgres/configure-private-ip

# - https://cloud.google.com/sql/docs/postgres/create-instance

# - https://cloud.google.com/sql/docs/postgres/create-manage-databases#gcloud

# - https://cloud.google.com/sql/docs/postgres/create-manage-users#gcloud

# - https://cloud.google.com/memorystore/docs/redis/create-manage-instances#creating_a_redis_instance_with_a_specific_ip_address_range

# - https://cloud.google.com/artifact-registry/docs/docker/store-docker-container-images

# - https://cloud.google.com/artifact-registry/docs/docker/pushing-and-pulling

# - https://cloud.google.com/dns/docs/zones#create-private-zone

# - https://cloud.google.com/dns/docs/records

# - https://cloud.google.com/secret-manager/docs/configuring-secret-manager

# - https://cloud.google.com/secret-manager/docs/create-secret

# - https://cloud.google.com/run/docs/configuring/secrets#command-line

# - https://cloud.google.com/run/docs/configuring/connecting-vpc#gcloud

#####################################################################

export PROJECT_ID=$(gcloud config get-value project)

export PROJECT_USER=$(gcloud config get-value core/account) # set current user

export PROJECT_NUMBER=$(gcloud projects describe $PROJECT_ID --format="value(projectNumber)")

export IDNS=${PROJECT_ID}.svc.id.goog # workflow identity domain

export GCP_REGION="us-central1" # CHANGEME (OPT)

export GCP_ZONE="us-central1-a" # CHANGEME (OPT)

export NETWORK_NAME="default"

# enable apis

gcloud services enable compute.googleapis.com \

servicenetworking.googleapis.com \

vpcaccess.googleapis.com \

secretmanager.googleapis.com \

sqladmin.googleapis.com \

redis.googleapis.com \

artifactregistry.googleapis.com \

dns.googleapis.com \

cloudbuild.googleapis.com \

storage.googleapis.com\

run.googleapis.com

# configure gcloud sdk

gcloud config set compute/region $GCP_REGION

gcloud config set compute/zone $GCP_ZONE

#############################################################

# NETWORKING

#############################################################

export NETBOX_NETWORK_NAME="netbox"

export NETBOX_RESERVED_RANGE_NAME="google-managed-services-netbox"

export SUBNET_BASTION_NAME="bastion"

export SUBNET_BASTION_RANGE="10.250.0.0/29"

export CONNECTOR_NAME="serverless-connector"

export CONNECTOR_RANGE="10.200.0.0/28"

export DNS_ZONE="private-zone"

export DNS_SUFFIX="example.com" # CHANGEME (OPT)

export DNS_LABELS="dept=networking" # CHANGEME (OPT)

# create network (custom-mode)

gcloud compute networks create $NETBOX_NETWORK_NAME \

--subnet-mode=custom

# create bastion subnet

gcloud compute networks subnets create $SUBNET_BASTION_NAME \

--region=$GCP_REGION \

--network=$NETBOX_NETWORK_NAME \

--range=$SUBNET_BASTION_RANGE

# allocate private range

gcloud compute addresses create $NETBOX_RESERVED_RANGE_NAME \

--global \

--purpose=VPC_PEERING \

--addresses=10.100.0.0 \

--prefix-length=16 \

--network=projects/$PROJECT_ID/global/networks/$NETBOX_NETWORK_NAME

# create peering for managed services

gcloud services vpc-peerings connect \

--service=servicenetworking.googleapis.com \

--ranges=$NETBOX_RESERVED_RANGE_NAME \

--network=$NETBOX_NETWORK_NAME

# create serverless vpc connector (for peering serverless to VPC)

gcloud compute networks vpc-access connectors create $CONNECTOR_NAME \

--network $NETBOX_NETWORK_NAME \

--region $GCP_REGION \

--range $CONNECTOR_RANGE

# create private zone

gcloud dns managed-zones create $DNS_ZONE \

--description="internal zone" \

--dns-name=$DNS_SUFFIX \

--networks=$NETBOX_NETWORK_NAME \

--labels=$DNS_LABELS \

--visibility=private

# firewall allow ssh

gcloud compute firewall-rules create fw-allow-ssh \

--network=$NETBOX_NETWORK_NAME \

--action=allow \

--direction=ingress \

--target-tags=allow-ssh \

--rules=tcp:22

#############################################################

# NETBOX (ENV/SECRETS)

#############################################################

export SECRET_ID="netbox-secrets"

export SECRET_VERSION=1

export SECRET_FILE=".env-local"

export ENV_FILE="netbox.env"

# individual

export SECRET_DB_PASS="db_password"

export SECRET_REDIS_PASS="redis_password"

export SECRET_REDIS_CACHE_PASS="redis_cache_password"

export SECRET_SU_PASS="superuser_password"

export SECRET_EMAIL_PASS="email_password"

export SECRET_SECRET_KEY="secret_key"

# fetch secret values from local .env file

source $SECRET_FILE

# save file with injected values

cat > $ENV_FILE << EOF

# required

ALLOWED_HOSTS="*"

DB_HOST=$POSTGRES_INSTANCE.$DNS_SUFFIX

DB_PORT=$POSTGRES_PORT

DB_NAME=netbox

DB_USER=netbox

REDIS_CACHE_DATABASE=1

REDIS_CACHE_HOST=$REDIS_INSTANCE.$DNS_SUFFIX

REDIS_CACHE_INSECURE_SKIP_TLS_VERIFY=false

REDIS_CACHE_SSL=false

REDIS_DATABASE=0

REDIS_HOST=$REDIS_INSTANCE.$DNS_SUFFIX

REDIS_INSECURE_SKIP_TLS_VERIFY=false

REDIS_SSL=false

EOF

# add config to env

source $ENV_FILE

# create secret for all vars

gcloud secrets create $SECRET_ID --replication-policy="automatic"

gcloud secrets versions add $SECRET_ID --data-file=${PWD}/$SECRET_FILE # version 1

# create env secrets

echo -n $DB_PASSWORD | gcloud secrets create $SECRET_DB_PASS \

--replication-policy="automatic" \

--data-file=-

# redis auth string after creation

# redis_cache auth string after creation

echo -n $SUPERUSER_PASSWORD | gcloud secrets create $SECRET_SU_PASS \

--replication-policy="automatic" \

--data-file=-

echo -n $EMAIL_PASSWORD | gcloud secrets create $SECRET_EMAIL_PASS \

--replication-policy="automatic" \

--data-file=-

echo -n $SECRET_KEY | gcloud secrets create $SECRET_SECRET_KEY \

--replication-policy="automatic" \

--data-file=-

#############################################################

# DATABASE (POSTGRES)

#############################################################

export POSTGRES_INSTANCE="netbox-db"

export POSTGRES_VERSION="POSTGRES_14"

export POSTGRES_TIER="db-f1-micro"

export POSTGRES_PORT=5432

gcloud beta sql instances create $POSTGRES_INSTANCE \

--database-version=$POSTGRES_VERSION \

--tier=$POSTGRES_TIER \

--network=projects/$PROJECT_ID/global/networks/$NETBOX_NETWORK_NAME \

--no-assign-ip \

--allocated-ip-range-name=$NETBOX_RESERVED_RANGE_NAME \

--region=$GCP_REGION

# get internal IP

export POSTGRES_HOST=$(gcloud beta sql instances describe $POSTGRES_INSTANCE --format="value(ipAddresses.ipAddress)")

# add to private-zone DNS

gcloud dns record-sets transaction start --zone=$DNS_ZONE

gcloud dns record-sets transaction add $POSTGRES_HOST \

--name="$POSTGRES_INSTANCE.$DNS_SUFFIX" --ttl="3600" --type="A" --zone=$DNS_ZONE

gcloud dns record-sets transaction execute --zone=$DNS_ZONE

# lock down postgres (admin) user [manually input at prompt]

gcloud sql users set-password postgres \

--instance=$POSTGRES_INSTANCE \

--prompt-for-password

# create netbox user

gcloud sql users create $DB_USER \

--instance=$POSTGRES_INSTANCE \

--password=$DB_PASSWORD

# create database

gcloud sql databases create $DB_NAME \

--instance=$POSTGRES_INSTANCE

#############################################################

# CACHE (REDIS)

#############################################################

export REDIS_INSTANCE="netbox-cache"

export REDIS_VERSION="redis_6_x"

gcloud redis instances create $REDIS_INSTANCE \

--size=1 \

--tier=STANDARD \

--region=$GCP_REGION \

--network=$NETBOX_NETWORK_NAME \

--reserved-ip-range=$NETBOX_RESERVED_RANGE_NAME \

--connect-mode=PRIVATE_SERVICE_ACCESS \

--redis-version=$REDIS_VERSION \

--enable-auth

# get internal IP

export REDIS_HOST=$(gcloud redis instances describe $REDIS_INSTANCE --region $GCP_REGION --format="value(host)")

export REDIS_PORT=$(gcloud redis instances describe $REDIS_INSTANCE --region $GCP_REGION --format="value(port)")

# get auth string

export REDIS_PASSWORD=$(gcloud beta redis instances get-auth-string $REDIS_INSTANCE --region $GCP_REGION --format="value(authString)")

# add to private-zone DNS

gcloud dns record-sets transaction start --zone=$DNS_ZONE

gcloud dns record-sets transaction add $REDIS_HOST \

--name="$REDIS_INSTANCE.$DNS_SUFFIX" --ttl="3600" --type="A" --zone=$DNS_ZONE

gcloud dns record-sets transaction execute --zone=$DNS_ZONE

# add secrets to secret manager

echo -n $REDIS_PASSWORD | gcloud secrets create $SECRET_REDIS_PASS \

--replication-policy="automatic" \

--data-file=-

echo -n $REDIS_PASSWORD | gcloud secrets create $SECRET_REDIS_CACHE_PASS \

--replication-policy="automatic" \

--data-file=-

#############################################################

# COMPUTE (TEST BASTION)

# - NOTE: if real bastion, create in managed instance group size=1

# - NOTE: if real bastion, no external IP and use IAP tunnel only

#############################################################

export BASTION_NAME="bastion-1"

# create compute instance to test from proxy-only network to ILB

gcloud compute instances create $BASTION_NAME \

--machine-type e2-micro \

--zone $GCP_ZONE \

--network $NETBOX_NETWORK_NAME \

--subnet $SUBNET_BASTION_NAME \

--tags allow-ssh

# install netcat

gcloud compute ssh $BASTION_NAME --zone $GCP_ZONE -- sudo apt-get update

gcloud compute ssh $BASTION_NAME --zone $GCP_ZONE -- sudo apt-get -y install netcat

# test internal DNS for database (IP may vary)

gcloud compute ssh $BASTION_NAME --zone $GCP_ZONE -- nc -zv $POSTGRES_INSTANCE.$DNS_SUFFIX $POSTGRES_PORT

# Connection to netbox-db.example.com (10.100.0.5) 5432 port [tcp/postgresql] succeeded!

# test internal DNS for cache (IP may vary)

gcloud compute ssh $BASTION_NAME --zone $GCP_ZONE -- nc -zv $REDIS_INSTANCE.$DNS_SUFFIX $REDIS_PORT

# Connection to netbox-cache.example.com (10.100.1.4) 6379 port [tcp/redis] succeeded!

#############################################################

# ARTIFACT REGISTRY

# - WARNING: arm architecture on Mac will produce non-runnable image

# run pull / tag / push commands from your temp bastion

#############################################################

export REPO_NAME="netbox-repo"

export NETBOX_IMAGE="netboxcommunity/netbox:v3.4-beta1-2.3.0"

export IMAGE_NAME="netbox"

export TAG_NAME="v3.4-beta1-2.3.0"

export IMAGE_PATH=$GCP_REGION-docker.pkg.dev/$PROJECT_ID/$REPO_NAME/$IMAGE_NAME:$TAG_NAME

gcloud artifacts repositories create $REPO_NAME \

--repository-format=docker \

--location=$GCP_REGION \

--description="Docker repository"

# configure auth

gcloud auth configure-docker ${GCP_REGION}-docker.pkg.dev

# fetch latest community netbox image

docker pull $NETBOX_IMAGE

# tag image for artifact registry

docker tag $NETBOX_IMAGE \

$IMAGE_PATH

# push image to artifact registry

docker push $IMAGE_PATH

#############################################################

# NETBOX (CLOUD RUN)

#############################################################

export SERVICE_NAME="netbox"

export SECRET_PATH="env/$SECRET_FILE" # as config in docker-compose.yaml

export SA_EMAIL="[email protected]"

# add compute service account access to secrets

# - NOTE: best practice to create separate service account to run each workload

gcloud secrets add-iam-policy-binding $SECRET_ID \

--member="serviceAccount:$SA_EMAIL" \

--role="roles/secretmanager.secretAccessor"

# individual

gcloud secrets add-iam-policy-binding $SECRET_DB_PASS \

--member="serviceAccount:$SA_EMAIL" \

--role="roles/secretmanager.secretAccessor"

gcloud secrets add-iam-policy-binding $SECRET_REDIS_PASS \

--member="serviceAccount:$SA_EMAIL" \

--role="roles/secretmanager.secretAccessor"

gcloud secrets add-iam-policy-binding $SECRET_REDIS_CACHE_PASS \

--member="serviceAccount:$SA_EMAIL" \

--role="roles/secretmanager.secretAccessor"

gcloud secrets add-iam-policy-binding $SECRET_SU_PASS \

--member="serviceAccount:$SA_EMAIL" \

--role="roles/secretmanager.secretAccessor"

gcloud secrets add-iam-policy-binding $SECRET_EMAIL_PASS \

--member="serviceAccount:$SA_EMAIL" \

--role="roles/secretmanager.secretAccessor"

gcloud secrets add-iam-policy-binding $SECRET_SECRET_KEY \

--member="serviceAccount:$SA_EMAIL" \

--role="roles/secretmanager.secretAccessor"

# deploy cloud run service (default port 8080) config from env

gcloud run deploy $SERVICE_NAME \

--platform managed \

--no-cpu-throttling \

--allow-unauthenticated \

--vpc-connector $CONNECTOR_NAME \

--ingress=internal-and-cloud-load-balancing \

--region $GCP_REGION \

--image $IMAGE_PATH \

--set-env-vars "ALLOWED_HOSTS=$ALLOWED_HOSTS" \

--set-env-vars "DB_HOST=$POSTGRES_INSTANCE.$DNS_SUFFIX" \

--set-env-vars "DB_PORT=$POSTGRES_PORT" \

--set-env-vars "DB_NAME=$DB_NAME" \

--set-env-vars "DB_USER=$DB_USER" \

--set-env-vars "REDIS_HOST=$REDIS_INSTANCE.$DNS_SUFFIX" \

--set-env-vars "REDIS_PORT=$REDIS_PORT" \

--set-env-vars "REDIS_DATABASE=$REDIS_DATABASE" \

--set-env-vars "REDIS_CACHE_HOST=$REDIS_INSTANCE.$DNS_SUFFIX" \

--set-env-vars "REDIS_CACHE_PORT=$REDIS_PORT" \

--set-env-vars "REDIS_CACHE_DATABASE=$REDIS_CACHE_DATABASE" \

--update-secrets=DB_PASSWORD=$SECRET_DB_PASS:$SECRET_VERSION \

--update-secrets=REDIS_PASSWORD=$SECRET_REDIS_PASS:$SECRET_VERSION \

--update-secrets=REDIS_CACHE_PASSWORD=$SECRET_REDIS_CACHE_PASS:$SECRET_VERSION \

--update-secrets=SUPERUSER_PASSWORD=$SECRET_SU_PASS:$SECRET_VERSION \

--update-secrets=EMAIL_PASSWORD=$SECRET_EMAIL_PASS:$SECRET_VERSION \

--update-secrets=SECRET_KEY=$SECRET_SECRET_KEY:$SECRET_VERSION \

--set-env-vars "DB_WAIT_DEBUG=1"

##########################################################

# Load Balancer

##########################################################

export APP_NAME=$SERVICE_NAME

export TLD="msparr.com" # OVERRIDE PRIOR - CHANGE ME TO DESIRED DOMAIN

export DOMAIN="$SERVICE_NAME.$TLD" # netbox.msparr.com

export EXT_IP_NAME="public-ip"

export BACKEND_SERVICE_NAME="$APP_NAME-service"

export SERVERLESS_NEG_NAME="$APP_NAME-neg"

# create static IP address

gcloud compute addresses create --global $EXT_IP_NAME

# wait 10 seconds and then set var

export EXT_IP=$(gcloud compute addresses describe $EXT_IP_NAME --global --format="value(address)")

echo "Remember to add DNS 'A' record [$DOMAIN] for IP [$EXT_IP]"

# create serverless NEG

gcloud compute network-endpoint-groups create $SERVERLESS_NEG_NAME \

--region=$GCP_REGION \

--network-endpoint-type=serverless \

--cloud-run-service=$SERVICE_NAME

# create backend service

gcloud compute backend-services create $BACKEND_SERVICE_NAME \

--load-balancing-scheme=EXTERNAL \

--global

# add serverless NEG to backend service

gcloud compute backend-services add-backend $BACKEND_SERVICE_NAME \

--network-endpoint-group=$SERVERLESS_NEG_NAME \

--network-endpoint-group-region=$GCP_REGION \

--global

# create URL map

gcloud compute url-maps create $APP_NAME-url-map \

--default-service $BACKEND_SERVICE_NAME

# create managed SSL cert

gcloud beta compute ssl-certificates create $APP_NAME-cert \

--domains $DOMAIN

# create target HTTPS proxy

gcloud compute target-https-proxies create $APP_NAME-https-proxy \

--ssl-certificates=$APP_NAME-cert \

--url-map=$APP_NAME-url-map

gcloud compute forwarding-rules create $APP_NAME-fwd-rule \

--target-https-proxy=$APP_NAME-https-proxy \

--global \

--ports=443 \

--address=$EXT_IP_NAME

# verify app is running (wait 10-15 minutes until cert provisions)

curl -k "https://$DOMAIN" # Unauthorized request

##########################################################

# [OPTIONAL] Restrict Traffic with Cloud Armor security policy

# - https://cloud.google.com/armor/docs/configure-security-policies#gcloud

##########################################################

export INTERNAL_POLICY_NAME="internal-users-policy"

export ALLOWED_CIDR="192.168.0.0/24" # CHANGE ME TO DESIRED IP RANGE

# create policies

gcloud compute security-policies create $INTERNAL_POLICY_NAME \

--description "policy for internal test users"

# update default rules

gcloud compute security-policies rules update 2147483647 \

--security-policy $INTERNAL_POLICY_NAME \

--action "deny-502"

# restrict traffic to desired IP ranges

gcloud compute security-policies rules create 1000 \

--security-policy $INTERNAL_POLICY_NAME \

--description "allow traffic from $ALLOWED_CIDR" \

--src-ip-ranges "$ALLOWED_CIDR" \

--action "allow"

# attach policy to backend service (one at a time)

gcloud compute backend-services update $BACKEND_SERVICE_NAME \

--security-policy $INTERNAL_POLICY_NAME \

--global

Additional Complexity And Considerations

One of the reasons I chose the Netbox application to illustrate how to modernize and deploy applications in a cloud-native way is its technical complexity. The application includes file system, sessions, workers, and even daily cron cleanup processing.

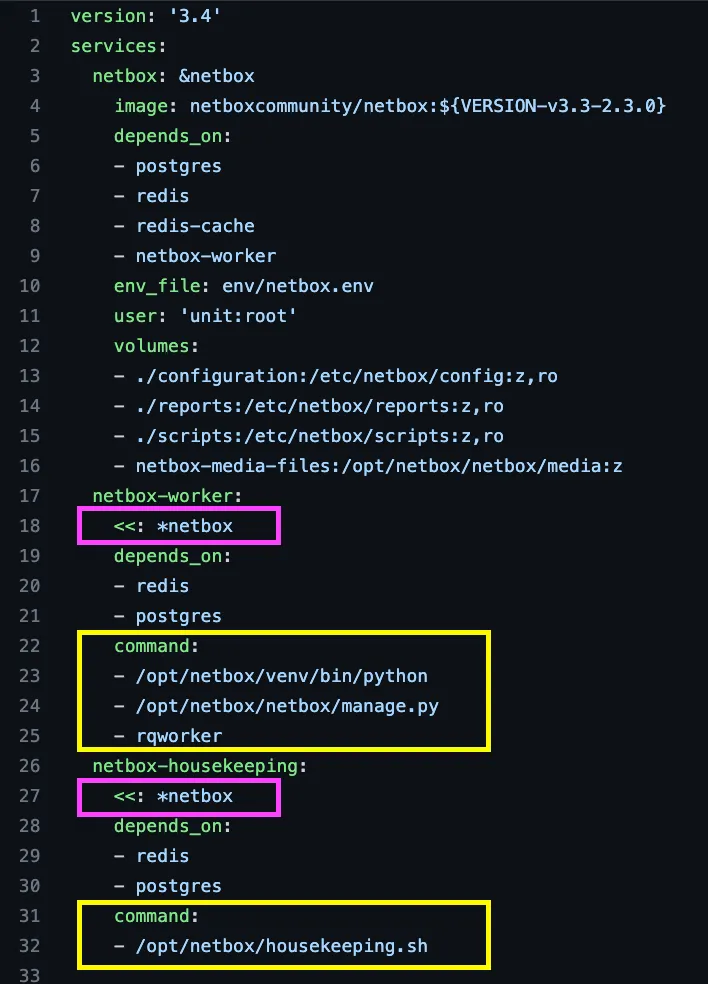

Docker Compose snippet for Netbox application (note netbox-worker and netbox-housekeeping)

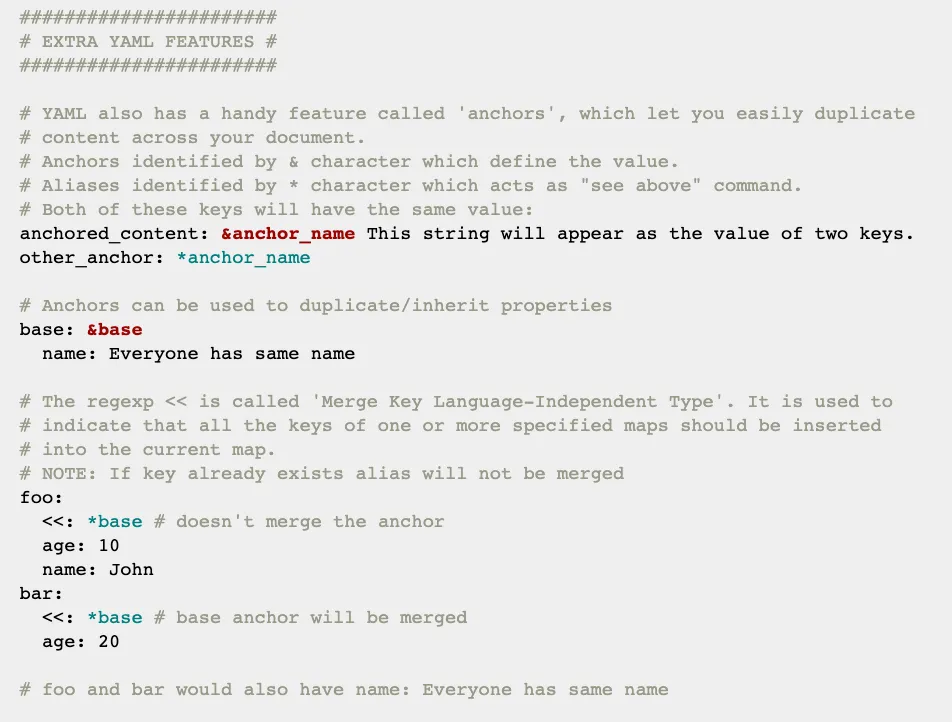

The docker-compose.yaml file snippet above illustrates a feature of YAML called anchors, that is not specific to docker-compose.

You can duplicate configurations in a succinct way, and then override commands to run different scripts at runtime.

Recreating workers on Cloud Run

To recreate this type of functionality on Cloud Run, there are flags --cmd and --args that you can add. I would simply duplicate the commands used to deploy the main application and change the name, then override the CMD to execute a different entrypoint script like below:

gcloud run deploy $WORKER_NAME \

--platform managed \

--allow-unauthenticated \

--vpc-connector $CONNECTOR_NAME \

--ingress=internal-and-cloud-load-balancing \

--region $GCP_REGION \

--image $IMAGE_PATH \

--set-env-vars "ALLOWED_HOSTS=$ALLOWED_HOSTS" \

...

--cmd "/opt/netbox/venv/bin/python" \

--args "/opt/netbox/netbox/manage.py" \

--args "rqworker"

Daily housekeeping job using Cloud Run and Cloud Scheduler

The daily housekeeping job can be run by creating a duplicate service on Cloud Run, and then scheduling that daily job to invoke the service using Cloud Scheduler

gcloud run deploy $HOUSEKEEPING_NAME \

--platform managed \

--allow-unauthenticated \

--vpc-connector $CONNECTOR_NAME \

--ingress=internal-and-cloud-load-balancing \

--region $GCP_REGION \

--image $IMAGE_PATH \

--set-env-vars "ALLOWED_HOSTS=$ALLOWED_HOSTS" \

...

--cmd "/opt/netbox/housekeeping.sh"

After deploying the housekeeping service, we would enable the Cloud Scheduler API:

gcloud services enable cloudscheduler.googleapis.com

We then create a service account, grant it invoker permissions, and create the scheduled job:

# fetch the service URL

export SVC_URL=$(gcloud run services describe $HOUSEKEEPING_NAME \

--platform managed --region $GCP_REGION --format="value(status.url)")

#########################################################

# create cloud scheduler job

#########################################################

export SA_NAME="cloud-scheduler-runner"

export SA_EMAIL="${SA_NAME}@${PROJECT_ID}.iam.gserviceaccount.com"

# create service account

gcloud iam service-accounts create $SA_NAME \

--display-name "${SA_NAME}"

# add sa binding to cloud run app

gcloud run services add-iam-policy-binding $HOUSEKEEPING_NAME \

--platform managed \

--region $GCP_REGION \

--member=serviceAccount:$SA_EMAIL \

--role=roles/run.invoker

# create the job to invoke service every day at 2:30 AM

gcloud scheduler jobs create http housekeeping-job --schedule "30 2 * * *" \

--http-method=GET \

--uri=$SVC_URL \

--oidc-service-account-email=$SA_EMAIL \

--oidc-token-audience=$SVC_URL

File systems

My goal was to prove you can split out a complex application like Netbox and deploy to the cloud using Cloud Run and other managed services. This may not be the best solution for this particular app, but it is possible.

If you need to leverage the file system, currently the serverless platforms are limited, and you may instead want to run on Kubernetes Engine, or even just a Compute Engine VM. You can run a VM as a container which is very slick, and then attach disks/volumes as necessary.

One trick to give you a simple “file system” in Cloud Run, however, is to leverage Secret Manager like I did in the example code, and snippet below.

# create secret for all vars

gcloud secrets create $SECRET_ID --replication-policy="automatic"

gcloud secrets versions add $SECRET_ID --data-file=${PWD}/$SECRET_FILE

# mount file path in cloud run

gcloud run deploy $SERVICE --image $IMAGE_URL \

--update-secrets="/env/netbox.env"=$SECRET_ID:$SECRET_VERSION

Best practice: separate service accounts

Although the examples I shared illustrated a separate service account for the Cloud Scheduler add-on, the best practice would be to create separate service accounts for each service, and the bastion (VM), and assign only the minimum IAM roles each needs. This adheres to the principle of least privilege.

For the Cloud Run service, we should create a separate “netbox-runner” service account, and then grant it only necessary roles such as:

- Cloud SQL Client

- Redis Viewer

- Secret Accessor

- Artifact Registry Reader

- Service Account User

- Storage Object Viewer

Summary

I hope this example illustrates how you can modernize existing applications, and leverage managed services on the public cloud. If you are just looking to get Netbox up and running, then the code snippets above should do the trick, but you also might consider running on VM or K8S.

You could also convert the working example to Terraform using 3rd-party solutions like Terraformer, or even GCP’s own bulk export tools, that can reverse-engineer your existing infra and generate Terraform code.

If your organization is facing similar challenges with IP collisions as you configure your networks, then perhaps it’s time for you to practice IPAM either with a shared spreadsheet, or a popular tool like Netbox.

Happy clouding!

One Response

What is “secret_key” referencing?