Or what to do when Google Application Default Credentials break on you

Authentication on Google Cloud… it just works, right? You spin a new VM, install gcloud on it, and magic! — you can access your Google Cloud Storage buckets.

One of the great promises of Google Cloud is indeed to make authentication simple. Just obtain what they call “Application Default Credentials” (ADC) and it will work everywhere — namely will use your user credentials on your dev machine and service account credentials when running on Google Cloud. It works and works well in that.

As long as you stay within Google Cloud and not try to access other Google services, ADC fulfills its promise. But two words break the above fairy tale, and they are SCOPE and SUBJECT.

What are Scopes?

I’m certain you’ve heard this term before. These are URLs like the below with no real website behind them :)

https://www.googleapis.com/auth/cloud-platform

https://www.googleapis.com/auth/compute.readonly

They are oAuth 2.0 scopes to configure coarse access control to GCP and other Google APIs (Drive, G Suite directory, etc.). Here is the full list.

Back then in the early days of Google Cloud, we didn’t have the flexible IAM system we have today for the fine-grained permission control. Google Cloud only had what they call “primitive” roles of Viewer, Editor, and Owner and relied on scopes to limit what your VMs could do.

For instance, if you wanted to launch a VM that needed to write data to Cloud Storage and list (as in “discover”) other VMs, your action plan would be:

- Launch the VM under service account with Editor role on your project (since you want to write to Cloud Storage, Viewer will not suffice)

- Specify explicit scopes when launching VM being:

compute.readonlyanddevstroage.read_write(Does anyone know why is this called *dev*storage?)

Google quickly realized that scope-based access control is too coarse and hence IAM permissions came to reign, obsoleting scopes in most cases. In fact, when you launch a VM today with a custom service account the scopes default just to cloud-platform — read “full access to all of GCP” — but that doesn’t mean anything yet because the actual access is controlled by IAM permissions granted to your VM’s service account.

When would you care about scopes?

Coming out of GCP ecosystem, chances are that you encountered scopes when you first tried to access a Google service which is not part of GCP. That is, use APIs not covered by the cloud-platform scope such as when you want to read a spreadsheet from your Google Drive.

To access Google Drive, your app credentials need to have

To recap — if you use Google API that is not part of GCP you need to account for scopes when provisioning credentials.

Specifying scopes

To recap, if you are Pythonista, you typically use:

import google.auth creds, project = google.auth.default()

and it just works. But now, you need to have additional scopes and this is where it becomes hairy.

GCE and GKE

With Compute Engine and Kubernetes Engine, you are relatively lucky — you can specify scopes during VM/nodepool creation, though when using gcloud only (Look for the —-scopes option). The web console will either completely deprive you of scopes if you use your own service account, or will only allow you to adjust GCP-related scopes otherwise.

Here is a short CLI demo:

gcloud compute instances create instance-1 ... \

--scopes=cloud-platform,

https://www.googleapis.com/auth/drive

gcloud compute ssh instance-1 -- python3 >>> import google.auth >>> creds, project = google.auth.default() >>> from google.auth.transport import requests >>> creds.refresh(requests.Request()) >>> creds.scopes ['

https://www.googleapis.com/auth/cloud-platform'

, '

https://www.googleapis.com/auth/drive'

]

I say you are “relatively lucky” with GCE and GKE because scopes can only be specified during creation. It’s less of a problem with a single VM, but maybe a pain with GKE where you may find yourself redeploying the whole node pool just to add a scope.

The end game is that your code doesn’t change but you have to apply some DevOps practices in advance.

Regardless, there is a way to obtain credentials with scopes that are not white-listed to the VM in advance which I describe towards the end.

Google App Engine

App Engine is one of the oldest GCP services and is a true serverless framework that in many aspects was ahead of its time. However, with great heritage comes plenty of legacy restrictions. One of them is that all App Engine apps have their scopes fixed to just cloud-platform and there is no easy way out of this.

One workaround would be to impersonate the default App Engine service account to another service account, or even to itself requesting new scopes along the way. Here are the official Google Cloud docs on the subject. Let’s see how it looks in Python:

import google.auth from google.auth import impersonated_credentials as ic

SCOPES = ["

https://www.googleapis.com/auth/drive

"] svcacc = "test

@my-project-id.iam.gserviceaccount.com

"

adc, _ = google.auth.default()

creds = ic.Credentials(source_credentials=adc,

target_principal=svcacc,

target_scopes=SCOPES)

service = build("sheets", "v4", credentials=creds,

cache_discovery=False)

Pay attention to the cache_discovery=False argument — it’s necessary due to a bug. For the above to work you also need to grant the Token Creator role for the AppEngine default service account in your project to your target service account:

gcloud iam service-accounts add-iam-policy-binding \

test@{$PRJ_ID}.iam.gserviceaccount.com \

--member="serviceAccount:{$PRJ_ID}@

"\ --role=roles/iam.serviceAccountTokenCreator

Please note that there is an important security consideration for this approach — all App Engine services in a given project run under the same service account. Hence all of them will able to impersonate, which may be a security concern.

There is no easy way around this, unfortunately, but I present a solution further down this post.

Update Nov 2021: App Engine now allows specifying a customer service account per service.

Cloud Run / Functions

When deploying Cloud Run service or Cloud Function there is no mentioning of scopes — finally! Why burden yourself when you can just request the scopes you need on the fly like so:

import google.auth SCOPES = ["

https://www.googleapis.com/auth/drive

"] creds, _ = google.auth.default(scopes=SCOPES)

Then proceed as usual with the API of your choice. See here the full example.

I believe this is how it should’ve actually worked in GKE/GCE/GAE as well.

In case you’re wondering — no, doing the same in GCE/GKE won’t yield the desired effect. On GCE/GKE, the scopes you request in your app are ignored and reset to those configured for the VM/node pool.

Local Dev

When I started to work with Google Directory API I was surprised that to invoke the API one must use a GCP service account and consequently have a proper GCP project with the desired API enabled.

Since ADC credentials obtained in the local development env (on your laptop, that is) usually will belong to your user, they are practically useless for working with many non-GCP APIs (e.g. Directory API).

The only way is to, again, impersonate and the code from the GAE section above works here “as is”.

What are Subjects?

Subjects are oftentimes a tiny detail that complicates authentication bootstrap code even further. The thing is when accessing Directory API (e.g. list users for your G Suite domain), you act on behalf of a certain user in the directory that needs to be specified as the credential’s subject.

Considering this, it can actually be a closed-loop impersonation when running from a local dev — a locally-authenticated [email protected] impersonates a service account in order to talk to the Directory API of its own domain; and then this service account acts on behalf of [email protected] in the directory.

Anyhow, we need to specify this “act as” email as a credentials subject which explodes our auth setup code to:

import google.auth import google.auth.iam from google.auth.transport.requests import Request from google.oauth2 import service_account as sa

TOKEN_URI = "

https://accounts.google.com/o/oauth2/token

" SCOPES = ["

https://www.googleapis.com/auth/drive

"] svcacc = "test

@my-project-id.iam.gserviceaccount.com

" subject = "[email protected]"

adc, _ = google.auth.default()

signer = google.auth.iam.Signer(Request(), adc, svcacc)

creds = sa.Credentials(signer, svcacc, TOKEN_URI,

scopes=SCOPES, subject=subject)

service = build("sheets", "v4", credentials=creds,

cache_discovery=False)

It works for all of the cases described above too— just pass subject=None if you don’t use Directory API and make sure to assign Token Creator roles properly.

So we have a solution, yay! The only thing is that when you “productionize” this approach and throw identity tokes into the equation it results in ~200 lines of code just to obtain authentication credentials, half of which are comments, which is usually a sign of complexity.

A Better Way?

We have a solution that works everywhere (apart from the potential security concern with App Engine), but it now feels so convoluted compared to the simple google.auth.default() call we used before. Is there another way?

I have one suggestion that may sound simpler to some. At least it addresses the App Engine security concern with impersonation. Here it is:

- Create a key file for the service account you want to impersonate. Yes, the plain, old, and “ugly” key file.

- Encrypt the file with Mozilla sops + GCP KMS and store it as part of your code.

- Grant your operational service account a decryptor role to the GCP KMS key you used to encrypt the service account key file.

Now your bootstrap code will look like this:

import json import os import subprocess from google.oauth2 import service_account as sa

path = os.getenv("SERVICE_ACCOUNT_KEY_PATH")

json_data: str = subprocess.run(["sops", "-d", path],

check=True, capture_output=True,

timeout=10).stdout

sa_info = json.loads(json_data)

creds = sa.Credentials.from_service_account_info(sa_info)

The resulting creds object has two handy methods with_scopes() and with_subject() that return new credentials object with the updated scopes/subject.

While this requires more setup, including incorporating 30MiB sops binary into your Cloud Functions and container images, it’s very easy to understand the flow (contrary to the previous approach).

This solution also solves the issue with App Engine — it’s still true that App Engine’s default service account can potentially decrypt all of the key files, but different GAE services deployed from different git repos will not have access to each others’ encrypted service account key files — hence we have secret isolation here!

Auth Traceability

This is a bonus part— thank you for making this far.

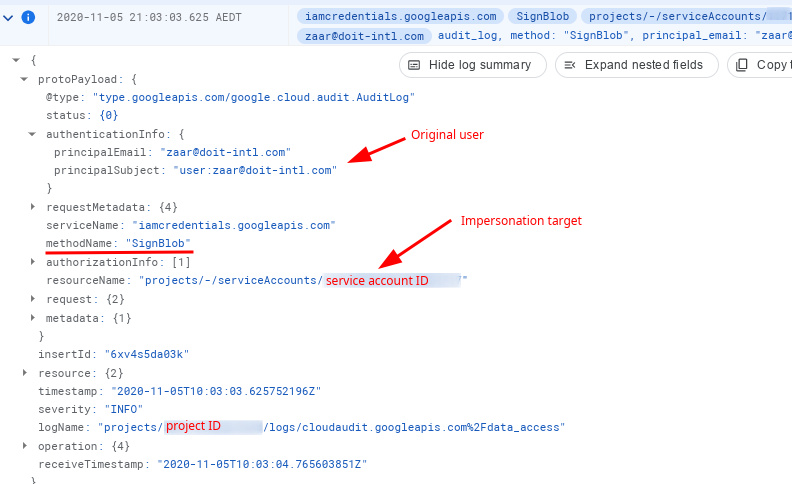

One nice bonus of impersonation over the ugly service account key files is that you can trace the original principal who did the impersonation to a service account.

Here is the audit cheat-sheet:

- Enable Data Access audit logs for IAM service

- Impersonate, e.g.

gcloud --impersonate-service-account=<email> projects list - Open Logs Explorer and select “Service Account” from the “Resource” drop- down and “data_access” from the “Log name” drop-down.

Or just input the below query:

resource.type="service_account"

logName="projects/<project-id>/logs/cloudaudit.googleapis.com%2Fdata_access"

protoPayload.methodName=("SignBlob" OR "GenerateAccessToken")

Depending on how you perform impersonation, payloads with methodName of SignBlob and GenerateAccessToken is what you are after:

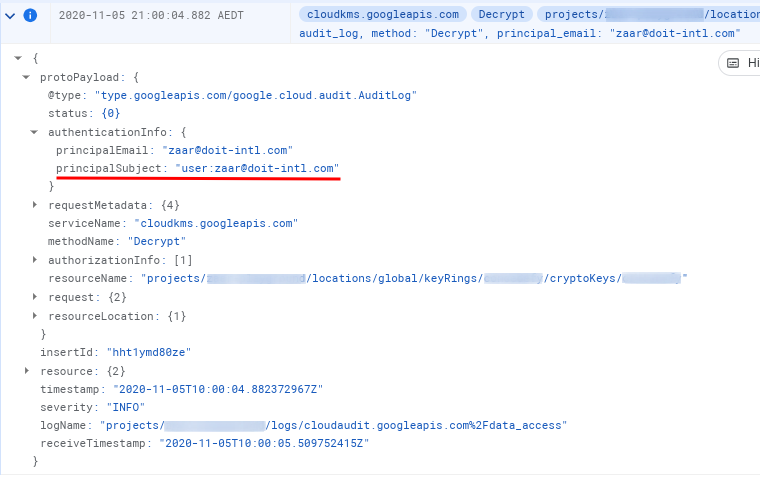

Now, with service account keys + SOPS approach, we are back to square one — or are we? Eventually, we just use the service account key so there is no direct indication of who is the actual entity behind the operation but we need to decrypt that JSON key file first using Cloud KMS (which is done by SOPS behind the scenes) and this is where we leave the breadcrumbs:

- Enable Data Access audit logs for the Cloud KMS service

- Use SOPS to decrypt your JSON key file

- Now querying for

resource.type=”cloudkms_cryptokey” resource.labels.location=”global”, we have our clues:

That is, we can see who used our keys to decrypt stuff; and while not strong enough evidence on its own, keeping good naming conventions together with a “one KMS key per one service account” approach can provide a strong correlation for suspect tracing.

Epilogue

I hope this post was able to shed a light on why your authentication may be breaking on you the moment you step out of a GCP-only world into the much-wider Google APIs ecosystem.

To recap: oAuth scopes, while not being a real security mechanism for many services that provide a real IAM nowadays, are still mandatory to get things to function; and depending on your platform — App Engine, GCE/GKE, etc.— it may require a decent effort to make to get there.

Zaar Hai is a Staff Cloud Architect at DoiT International. Check our career page if you’d like to work with Zaar and other Senior Cloud Architects at DoiT International!