Kubernetes has become a key technology in cloud computing, and it can revolutionize how your organization deploys, scales, and manages containerized applications. As an open-source container orchestration system, it simplifies managing distributed systems, letting applications run reliably across different environments. How can Kubernetes work for your company? By automating deployment, scaling, and recovery, Kubernetes can help your organization streamline operations and focus on innovation.

If your organization is seeking efficiency and reliability, Kubernetes offers tools to help you achieve these goals. These tools enable teams to manage systems with confidence and improve workflows. With features like load balancing, automated rollouts, and scaling, Kubernetes is all but essential for DevOps. It can optimize resources while keeping your applications resilient.

What is Kubernetes architecture?

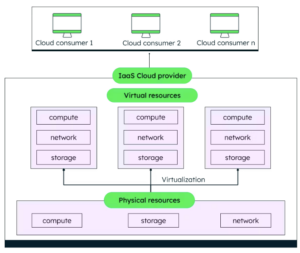

Before we get into Kubernetes architecture, it’s important to understand containerization and its impact on application deployment. Containerization is like a lightweight version of virtualization. It bundles an application along with all its dependencies (like libraries, binaries, and config files) into one portable package called a container. Unlike traditional virtual machines that need a full operating system for each instance, containers share the host system’s OS kernel, making them way more efficient and quicker to start up.

Containerization is a lightweight alternative to traditional virtualization, sharing the host OS kernel to reduce overhead, start faster, and use fewer resources. Containers provide consistent environments and enable ”build once, run anywhere” functionality.

Kubernetes simplifies managing large-scale container deployments with features like scalability, automation, self-healing, and a strong ecosystem, making it a leading container orchestration platform. It provides a reliable framework for handling the complexities of distributed computing environments with efficiency and precision. For example, in a traditional environment, your team would have to manually manage the deployment and scaling of applications. This process is time-consuming, error-prone, and difficult to maintain as the infrastructure grows.

By automating tasks with a single API, Kubernetes makes things easier, letting you manage containers across different environments. It also comes with self-healing features, so it can recover from failures on its own without any help. With Kubernetes, your team can smoothly roll out new app versions while keeping everything running and responsive, even during heavy traffic.

Let’s lay out the basics of how Kubernetes tackles some of the biggest challenges in software deployment:

- Automatic scaling: Kubernetes adjusts resources in real time based on application demand, ensuring your system can handle fluctuations without manual intervention.

- Self-healing capabilities: If a container fails, Kubernetes detects the issue and automatically replaces it, keeping your applications running smoothly.

- Zero-downtime updates: With seamless rollouts and rollbacks, updates can be implemented without disrupting users, minimizing downtime.

- Advanced networking: Kubernetes manages complex networking needs, providing smooth communication between multiple containers in distributed systems.

- Intelligent resource utilization: Workload scheduling is optimized to make the best use of infrastructure, helping you control costs while maintaining performance.

Kubernetes simplifies the challenges of managing containerized applications, allowing you to concentrate on building and running software without the burden of managing infrastructure. Here are some key benefits of using Kubernetes:

- Flexibility: Deploy applications with ease across a variety of environments.

- Consistency: Maintain reliable performance, whether on-premises, with cloud providers, or in hybrid environments.

- Efficiency: Reduce operational overhead by automating routine management tasks.

- Scalability: Effectively manage complex, distributed systems without added difficulty.

- Cost optimization: Improve resource utilization to manage costs more effectively.

When you're ready to implement Kubernetes, you've got some excellent managed service options from major cloud providers. Amazon's Elastic Kubernetes Service (EKS), Google Kubernetes Engine (GKE), and Azure Kubernetes Service (AKS) take care of the heavy lifting for you. These services handle the complex tasks of managing your control plane, automating updates, and maintaining high availability.

Each comes with its own perks—EKS integrates seamlessly with AWS services, GKE offers advanced auto-scaling features, and AKS provides strong Azure DevOps integration. By choosing a managed service, you can focus on your applications while the provider handles the infrastructure complexities.

Ultimately, since it offers practical solutions to make your workflow easier and can fit your specific needs, Kubernetes simplifies the challenges of managing applications.

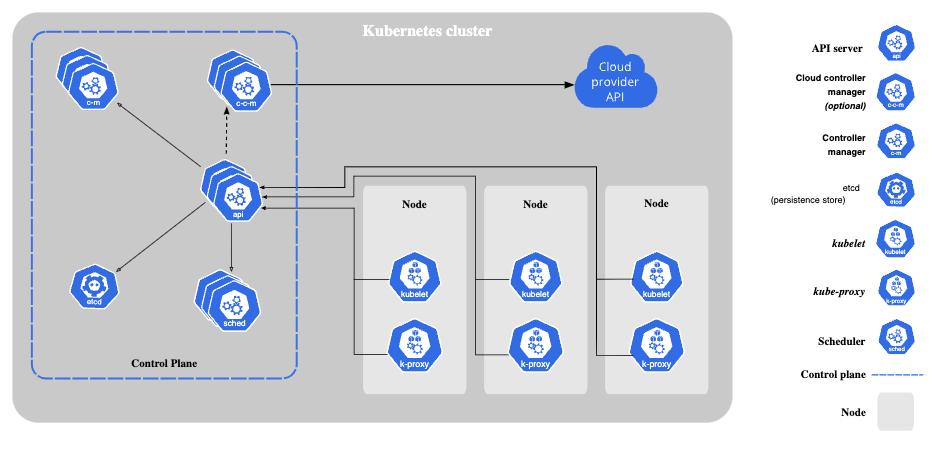

The core Kubernetes architecture components

(Source)

(Source)

Kubernetes architecture is built around two core components: the control plane and the worker nodes. This structure is designed to provide a reliable, scalable, and flexible platform for managing containerized applications. By separating responsibilities between these components, Kubernetes allows for efficient orchestration and can simplify scaling and managing workloads.

Kubernetes control plane architecture

The control plane oversees a Kubernetes cluster—a group of worker machines (called nodes) that run containerized applications. In Kubernetes, the cluster is the backbone of your deployment, bringing together all the machines and resources needed to run your apps as one cohesive, manageable unit.

Control plane components include:

kube-apiserver

kube-apiserver is the central management component of a Kubernetes cluster. It works by:

- Providing access to the Kubernetes API, which serves as the main entry point for interacting with the cluster

- Managing all administrative tasks and overseeing cluster state to keep everything running smoothly

- Acting as the primary interface for cluster interactions, allowing you to control and manage operations effectively

etcd

etcd is a distributed and consistent key-value store that plays a critical role in Kubernetes architecture. Its key benefits include:

- Serving as the primary data storage mechanism for the cluster, maintaining the complete state of the system

- Keeping your cluster’s data secure and recoverable with reliable configuration backups

- Providing stability and reliability with its consistent state management across the entire cluster

- Minimizing downtime due to high availability through its distributed architecture

kube-scheduler

The kube-scheduler is responsible for determining the best placement for pods within a Kubernetes cluster. It plays a big role in maintaining efficient and intelligent scheduling. When scheduling pods, the kube-scheduler takes several factors into account:

- Resource requirements: Ensures the pod has the CPU, memory, and other resources it needs.

- Hardware/software constraints: Matches the pod to nodes that meet specific hardware or software needs.

- Affinity and anti-affinity rules: Respects rules that define which pods should be placed together (or kept apart).

- Data locality: Places pods closer to the data they need to minimize latency.

- Performance optimization: Balances workloads across nodes to enhance cluster performance.

kube-controller-manager

The kube-controller-manager oversees multiple controller processes to maintain the cluster’s desired state. Key controllers include:

- Node controller: Keeps an eye on the health and status of nodes to make sure they’re working properly.

- Replication controller: Keeps the correct number of pod replicas running as specified.

- Endpoints controller: Manages the population of service endpoints for efficient communication.

- Service account & token controllers: Handles authentication and manages access tokens for secure operations.

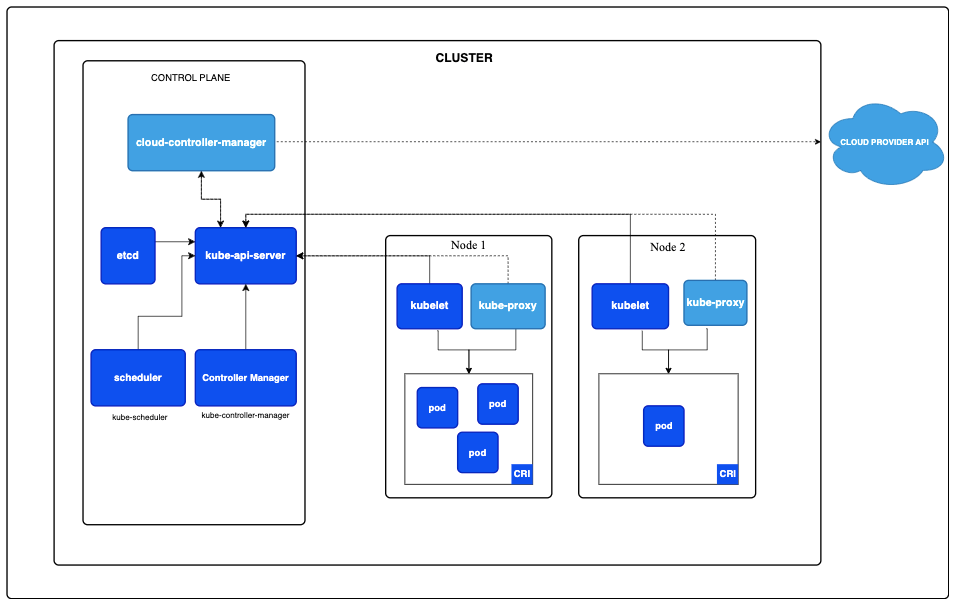

Kubernetes node architecture

(Source)

(Source)

Worker nodes are computational engines of the Kubernetes cluster, running your containerized applications. These nodes are essentially the computing resources you’re paying for in your cloud setup, so the number and size of them will directly affect your costs. When it comes to cutting Kubernetes expenses, organizations usually focus on making better use of their nodes. Tweaking the node count or picking the right sizes can make a big difference in bringing down costs. Worker nodes include the following components:

kubelet

The kubelet is the primary node agent in Kubernetes. It’s responsible for several key tasks that ensure the smooth operation of your cluster. Here’s a breakdown of its main responsibilities:

- Communicating with the control plane: The kubelet regularly checks in with the control plane to receive instructions and report the node's status and workloads.

- Correctly running pods: It makes sure containers within each pod are running as expected, taking action if something goes wrong.

- Monitoring node and pod health: The kubelet tracks the health of the node and its pods, reporting any issues to help maintain stability and performance.

- Managing container lifecycles: From starting and stopping containers to managing restarts, the kubelet oversees the entire lifecycle of containers.

Container runtime

The container runtime is a component responsible for running containers in a Kubernetes environment. It plays a role in smooth container execution and resource management.

- Supports multiple runtimes:

The container runtime is flexible and compatible with several runtime options, including:- Docker

- Containerd

- CRI-O

- Key responsibilities:

The container runtime manages essential tasks, such as:- Pulling container images from registries

- Executing containers efficiently

- Isolating resources to for stable and secure performance

What are other Kubernetes infrastructure components?

Kubernetes includes several key infrastructure components that work alongside its core functionality. Here’s a breakdown of each component to help you understand their roles:

- Pods: The smallest deployable units in Kubernetes, pods contain one or more containers that share resources and run together as a single unit.

- Deployments: Used to define the desired state for pods and replica sets, deployments allow your application to run smoothly and scale appropriately.

- Services: These create a stable way to manage network rules and communication, allowing different parts of your application to interact reliably.

- Namespaces: Providing a way to isolate resources within your cluster, namespaces make it easier to organize and manage complex environments.

- Persistent volumes: This keeps your data safe by separating storage from individual pods, so it sticks around even if the pods get re-created.

- ConfigMaps and secrets: Helping manage configuration and sensitive data efficiently, ConfigMaps and secrets keep your applications both flexible and secure.

Each of these components plays an important role in streamlining application management and enabling smooth scaling within your cluster. By working together, they help simplify complex processes, making it easier to manage and grow your system efficiently.

Interactions between components

Simplified Kubernetes diagram

(Source)

The Kubernetes ecosystem relies on well-orchestrated interactions to create a dynamic container management environment. When a deployment request is made, the user submits a configuration through the Kubernetes API. The kube-apiserver validates the request, acting as the entry point for all cluster operations. Once validated, the scheduler analyzes the cluster’s state to find the best node for the workload, considering resource availability, hardware constraints, and workload distribution.

Next, the kubelet on the target node pulls the required container images from the registry, while kube-proxy configures network routing to maintain communication within the cluster and with external services. The container runtime then launches the containers, moving the system closer to the desired state.

Kubernetes’ true strength lies in its continuous monitoring and self-healing. Controllers constantly compare the current cluster state with the desired state, making corrections as needed. This includes replacing failed containers, redistributing workloads when nodes fail, and reallocating resources to maintain performance and reliability.

This orchestration of components allows Kubernetes clusters to stay resilient, efficient, and responsive to changing demands. Its ability to monitor, heal, and optimize reduces your operational overhead, boosts reliability, and accelerates innovation.

Kubernetes architecture best practices and principles

Building strong use cases for Kubernetes architecture involves following a set of foundational best practices. Let’s break down some of the most important key principles to help you design systems that are reliable, scalable, and secure.

Unchanging infrastructure

- Treat containers as disposable. This means designing your system so containers can be replaced rather than modified in place.

- Avoid making changes directly to running containers. Instead, use declarative configurations to establish consistency and repeatability.

- Rely on versioned, immutable container images to maintain stability and predictability, providing a reliable foundation for your applications.

Microservices design

- Structure your application as loosely coupled services. This allows for components to function independently, improving flexibility.

- Design each service to scale independently based on demand, enabling better resource allocation.

- Simplify maintenance and updates by keeping services small and focused on specific tasks.

Resource management

- Specify clear resource requests and limits for containers to prevent resource contention and overuse.

- Implement intelligent scaling strategies, such as horizontal pod autoscaling, to keep your application flexible enough to adapt to workload changes.

- Monitor resource usage regularly to identify inefficiencies and optimize system performance.

Security considerations

- Protect your cluster with strong authentication mechanisms, being mindful to only allow access to authorized users and systems.

- Use role-based access control (RBAC) to manage permissions, limiting access to what is necessary for each role.

- Keep your Kubernetes components and container images up to date with the latest patches and updates to reduce vulnerabilities.

- Minimize container privileges by following the principle of least privilege, reducing the risk of security breaches.

By following these best practices, you can create a Kubernetes architecture that is efficient, scalable, and secure. While challenges may (and will likely) arise, these principles provide a strong foundation to guide your efforts and help you maintain a reliable system.

High availability and scalability

(Source)

Kubernetes is built to deliver high availability and scalability—two must-haves for today’s mission-critical applications. Its high availability comes from a few smart strategies. For starters, Kubernetes uses multiple control plane instances to avoid single points of failure, so the system keeps running even if something goes down. Core components use a leader election process to stay functional, meaning the cluster keeps working smoothly even when individual parts face issues.

On the node level, Kubernetes has built-in redundancy to boost resilience. Workloads are spread across nodes, and it uses intelligent failover mechanisms to quickly recover from node failures without affecting application performance. To go a step further, your organization can set up multi-zone or multi-region deployments, adding geographic redundancy to guard against bigger infrastructure outages.

Scalability is another key feature of Kubernetes. The horizontal pod autoscaler automatically adjusts the number of pod replicas based on real-time performance metrics, helping applications handle traffic changes efficiently while optimizing resources. Similarly, the cluster autoscaler can add or remove nodes as needed to keep up with your workload demands, making scaling seamless and cost-effective.

In addition, Kubernetes is designed to handle large-scale operations and can support thousands of node components in a single cluster. It uses dynamic resource allocation to distribute workloads smartly, which helps avoid bottlenecks and makes the most of available resources. These features make Kubernetes a powerful, enterprise-ready solution that can adapt to even the toughest business challenges, such as peak traffic periods or unexpected resource constraints.

Kubernetes offers plenty of ways to keep costs under control by making the most of your resources. Setting pod resource requests and limits can help you use resources efficiently, while adjusting node sizing and autoscaling can assist with striking the right balance between cost and performance. Tools like Prometheus can give you valuable insights into your usage metrics. DoiT makes cost optimization even easier with automated analysis, smart recommendations, clear cost visibility, and hands-on support—helping you save on infrastructure costs without sacrificing reliability.

Optimize your Kubernetes journey with DoiT

Unless you have the right expertise, managing Kubernetes environments can be a challenging prospect. At DoiT, we offer comprehensive Kubernetes management solutions designed to help your organization tackle these challenges effectively. Our platform focuses on:

- Optimizing cluster performance for smoother operations

- Reducing cloud infrastructure costs to improve your budget efficiency

- Streamlining deployment processes to save you time and resources

Implementing best-practice configurations for your long-term success through our GKE Accelerator and EKS Accelerator programs

DoiT’s platform makes it easy to optimize your Kubernetes deployments. You get tools like real-time scaling analysis with smart recommendations, cost management features like expense dashboards and right-sizing tips, and performance boosters like container-level analytics and smarter workload placement. Simplify scaling, cut costs, and boost performance without the hassle.

From AWS to Azure, by utilizing DoiT’s Kubernetes accelerators and cloud analytics, you can simplify complex Kubernetes architectures and turn them into a strategic DevOps advantage for your business. Discover DoiT’s Kubernetes solutions today and explore how our solutions can help.