Are you experiencing slow transfer speeds between your GCE VM and a Cloud Storage bucket? Then read on to learn how to maximize your upload and download throughput for Linux and Windows VMs!

Overview

I recently had a DoiT International customer ask why their data on a Google Compute Engine (henceforth referred to as GCE) Windows Server’s local SSD was uploading to a Cloud Storage bucket much slower than expected. At first, I began with what I thought would be a simple benchmarking of gsutil commands to demonstrate the effectiveness of using ideal gsutil arguments as recommended by the GCP documentation. Instead, my ‘quick’ look into the issue turned into a full-blown investigation into data transfer performance between GCE and GCS, as my initial findings were unusual and very much unexpected.

If you are simply interested in knowing what the ideal methods are for moving data between GCE and GCS for a Linux or Windows machine, go ahead and scroll all the way down to “Effective Transfer Tool Use Conclusions”.

If you instead want to scratch your head over the bizarre, often counter-intuitive throughput rates achievable through commonly used commands and arguments, stay with me as we dive into the details of what led to my complex recommendations summary at the end of this article.

Linux VM performance with gsutil: Large files

Although the customer’s request involved data transfer on a Windows server, I first performed basic benchmarking where I felt the most comfortable:

Linux, via the “Debian GNU/Linux 10 (buster)” GCE public image.

Since the customer was already attempting file transfers from local SSDs and I wanted to minimize the odds that networked disks would impact transfer speeds, I configured two VM sizes, n2-standard-4 and n2-standard-80, with each having one local SSD attached where we will perform benchmarking.

The GCS bucket I will use, as well as all VMs described in this article, are created as regional resources located in us-central1.

To simulate the customer’s large file upload experience, I created an empty file 30 GBs in size:

fallocate -l 30G temp_30GB_file

From here, I tested two commonly recommended gsutil parameters:

-m: Used to perform parallel, multi-threaded copy. Useful for transferring a large number of files in parallel, not the upload of individual files.-o GSUtil:parallel_composite_upload_threshold=150M: Used to split large files exceeding the specified threshold into parts that are then uploaded in parallel and combined upon upload completion of all parts.

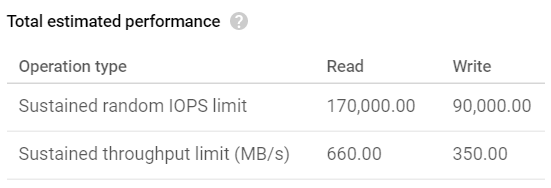

The estimated max performance for the local SSD on both VMs is as follows:

We should therefore be able to achieve up to 660 MB/s read and 350 MB/s write throughput with gsutil. Let’s see what the upload benchmarks revealed:

time gsutil cp temp_30GB_file gs://doit-speed-test-bucket/ # n2-standard-4: 2m21.893s, 216.50 MB/s # n2-standard-80: 2m11.676s, 233.30 MB/s time gsutil -m cp temp_30GB_file gs://doit-speed-test-bucket/ # n2-standard-4: 2m48.710s, 182.09 MB/s # n2-standard-80: 2m29.348s, 205.69 MB/s time gsutil -o GSUtil:parallel_composite_upload_threshold=150M cp temp_30GB_file gs://doit-speed-test-bucket/ # n2-standard-4: 1m40.104s, 306.88 MB/s # n2-standard-80: 0m52.145s, 589.13 MB/s time gsutil -m -o GSUtil:parallel_composite_upload_threshold=150M cp temp_30GB_file gs://doit-speed-test-bucket/ # n2-standard-4: 1m44.579s, 293.75 MB/s # n2-standard-80: 0m51.154s, 600.54 MB/s

As expected based on GCP’s gsutil documentation, large file uploads benefit from including -o GSUtil. When more vCPUs are made available to assist in the parallel upload of file parts, upload time is improved dramatically, to the point that with a consistent 600 MB/s upload speed on the n2-standard-80 we come close to achieving the SSD’s max throughput of 660 MB/s. Including -m for only one file decreases upload time by a few seconds. So far, we’ve seen nothing out of the ordinary.

Let’s check out the download benchmarks:

time gsutil cp gs://doit-speed-test-bucket/temp_30GB_file . # n2-standard-4: 8m3.186s, 63.58 MB/s # n2-standard-80: 6m13.585, 82.23 MB/s time gsutil -m cp gs://doit-speed-test-bucket/temp_30GB_file . # n2-standard-4: 7m57.881s, 64.28 MB/s # n2-standard-80: 6m20.131s, 80.81 MB/s

Download performance on the 80 vCPU VM only achieved 23% of the maximum local SSD write throughput. Additionally, despite the enabling of multi-threading with -m not improving performance for this single file download, and despite both machines utilizing well under their maximum throughput (10 Gbps for n2-standard-4, 32 Gbps for n2-standard-80), evidently using a higher tier machine within the same family leads to a ~30% improvement in download speed. Weird, but not as weird as getting only 1/4th of local SSD write throughput with an absurdly expensive VM.

What is going on?

After much searching around on this issue, I found no answers but instead discovered s5cmd, a tool designed to dramatically improve uploads to and downloads from S3 buckets. It claims to run 12X faster than the equivalent AWS CLI commands (e.g. aws s3 cp) due in large part to being written in Go, a compiled language, versus the AWS CLI that is written in Python. It just so happens that gsutil is also written in Python. Perhaps gsutil is severely hampered by its language choice, or simply optimized poorly? Given that GCS buckets can be configured to have S3 API Interoperability, is it possible to speed up uploads and downloads with s5cmd by simply working with a compiled tool?

Linux VM performance with s5cmd: Large files

It took a little bit to get s5cmd working, mostly because I had to discover the hard way that GCS Interoperability doesn’t support S3’s multipart upload API, and given that this tool is written only with AWS in mind it will fail on large file uploads in GCP. You must provide -p=1000000, an argument that forces multi-part upload to be avoided. See s5cmd issues #1 and #2 for more info.

Note that s5cmd also offers a -c parameter for setting the number of concurrent parts/files transferred, with a default value of 5.

With those two arguments in mind I performed the following Linux upload benchmarks:

time s5cmd --endpoint-url https://storage.googleapis.com cp -c=1 -p=1000000 temp_30GB_file s3://doit-speed-test-bucket/ # n2-standard-4: 6m7.459s, 83.60 MB/s # n2-standard-80: 6m50.272s, 74.88 MB/s time s5cmd --endpoint-url https://storage.googleapis.com cp -p=1000000 temp_30GB_file s3://doit-speed-test-bucket/ # n2-standard-4: 7m18.682s, 70.03 MB/s # n2-standard-80: 6m48.380s, 75.22 MB/s

As expected, large file uploads perform considerably worse compared to gsutil given the lack of a multi-part upload strategy as an option. We are seeing 75–85 MB/s upload compared to gsutil’s 200–600 MB/s. Providing concurrency of 1 vs. the default 5 only has a small impact on improving performance. Thus, due to s5cmd’s treatment of AWS as a first-class citizen without consideration for GCP, we cannot improve uploads by using s5cmd.

Below are the s5cmd download benchmarks:

time s5cmd --endpoint-url https://storage.googleapis.com cp -c=1 -p=1000000 s3://doit-speed-test-bucket/temp_30GB_file . # n2-standard-4: 1m56.170s, 264.44 MB/s # n2-standard-80: 1m46.196s, 289.28 MB/s times5cmd --endpoint-url https://storage.googleapis.com cp -c=1 s3://doit-speed-test-bucket/temp_30GB_file . # n2-standard-4: 3m21.380s, 152.55 MB/s # n2-standard-80: 3m45.414s, 136.28 MB/s time s5cmd --endpoint-url https://storage.googleapis.com cp -p=1000000 s3://doit-speed-test-bucket/temp_30GB_file . # n2-standard-4: 2m33.148s, 200.59 MB/s # n2-standard-80: 2m48.071s, 182.78 MB/s time s5cmd --endpoint-url https://storage.googleapis.com cp s3://doit-speed-test-bucket/temp_30GB_file . # n2-standard-4: 1m46.378s, 288.78 MB/s # n2-standard-80: 2m1.116s, 253.64 MB/s

What a dramatic improvement! While there is some variability in download time, it seems that by leaving out -c and -p, leaving them to their defaults, we achieve optimal speed. We are unable to reach the max write throughput of 350 MB/s, but ~289 MB/s on an n2-standard-4 is much closer to that than ~64 MB/s provided by gsutil on the same machine. That is a 4.5X increase in download speed simply by swapping out the data transfer tool used.

Summarizing all of the above findings, for Linux:

- Given that

s5cmdcannot enable multi-part uploads when working with GCS, it makes sense to continue usinggsutilfor upload to GCS so long as you include-o GSUtil:parallel_composite_upload_threshold=150M. s5cmdwith its default parameters blowsgsutilout of the water in download performance. Simply utilizing a data transfer tool written with a compiled language yields dramatic (4.5X) performance improvements.

Windows VM performance with gsutil: Large files

If you thought the above wasn’t unusual enough, buckle in as we go off the deep end with Windows. Since the DoIT customer was dealing with Windows Server, after all, it was time to set out on benchmarking that OS. I began to suspect their problem was not going to be between the keyboard and the chair.

Having confirmed that, for Linux, gsutil works great for upload when given the right parameters and s5cmd works great for download with default parameters, it was time to try these commands on Windows where I will once again feel humbled by my lack of experience with Powershell.

I eventually managed to gather benchmarks from an n2-standard-4 machine with a local SSD attached running on the “Windows Server version 1809 Datacenter Core for Containers, built on 20200813” GCE VM image. Due to the per vCPU licensing fees that Windows server charges, I’ve opted to not gather metrics from an n2-standard-80 in this experiment.

An important side note before we dive into the metrics:

The GCP documentation on attaching local SSDs recommends that for “All Windows Servers” you should use the SCSI driver to attach your local SSD rather than the NVMe driver you typically use for a Linux machine, as SCSI is better optimized for achieving maximum throughput performance. I went ahead and provisioned two VMs with a local SSD attached, one attached via NVMe and one via SCSI, determined to compare their performance alongside the various tools and parameters I’ve been investigating thus far.

Below are the upload speed benchmarks:

Measure-Command {gsutil cp temp_30GB_file gs://doit-speed-test-bucket/}

# NVMe: 3m50.064s, 133.53 MB/s

# SCSI: 4m7.256s, 124.24 MB/s

Measure-Command {gsutil -m cp temp_30GB_file gs://doit-speed-test-bucket/}

# NVMe: 3m59.462s, 128.29 MB/s

# SCSI: 3m34.013s, 143.54 MB/s

Measure-Command {gsutil -o GSUtil:parallel_composite_upload_threshold=150M cp

temp_30GB_file gs://doit-speed-test-bucket/}

# NVMe: 5m54.046s, 86.77 MB/s

# SCSI: 6m13.929s, 82.15 MB/s

Measure-Command {gsutil -m -o GSUtil:parallel_composite_upload_threshold=150M cp

temp_30GB_file gs://doit-speed-test-bucket/}

# NVMe: 5m55.751s, 86.40 MB/s

# SCSI: 5m58.078s, 85.79 MB/s

With no arguments provided to gsutil, upload throughput is ~60% of the throughput achieved on a Linux machine. Providing any combination of arguments degrades performance. When multi-part upload is enabled—which led to a 42% improvement in upload speed on Linux — the upload speed drops by 35%. You may also notice that when -m is not provided and gsutil is allowed to upload more optimally for a single large file, upload from the NVMe drive completes more quickly than on the SCSI drive, the latter of which supposedly has drivers more optimized for Windows Servers. What is going on?!

Low upload performance around 80–85 MB/s was the exact range that the DoiT customer was experiencing, so their problem was at least reproducible. By removing the GCP-recommended argument

-o GSUtil:parallel_composite_upload_threshold=150M for large file uploads, the customer could remove a 35% performance penalty. 🤷

Download benchmarking tells a more harrowing tale:

Measure-Command {gsutil cp gs://doit-speed-test-bucket/temp_30GB_file .}

# NVMe 1st attempt: 11m39.426s, 43.92 MB/s

# NVMe 2nd attempt: 9m1.857s, 56.69 MB/s

# SCSI 1st attempt: 8m54.462s, 57.48 MB/s

# SCSI 2nd attempt: 10m1.023s, 51.05 MB/s

Measure-Command {gsutil -m cp gs://doit-speed-test-bucket/temp_30GB_file .}

# NVMe 1st attempt: 8m52.537s, 57.69 MB/s

# NVMe 2nd attempt: 22m4.824s, 23.19 MB/s

# NVMe 3rd attempt: 8m50.202s, 57.94 MB/s

# SCSI 1st attempt: 7m29.502s, 68.34 MB/s

# SCSI 2nd attempt: 9m9.652s, 55.89 MB/s

I could not yield consistent download benchmarks due to the following issue:

- Each download operation would hang for up to 2 minutes before initiating

- The download would begin and progress at about 68–70 MB/s, until…

- It sometimes paused again for an indeterminate amount of time

The process of hanging up and re-initiating the download would continue back-and-forth, causing same-VM same-disk download speed averages to range from 23 MB/s to 58 MB/s. It was madness trying to determine whether NVMe or SCSI were more optimal for downloads with these random, extended hang-ups in the download process. More on this verdict later.

Windows VM performance with s5cmd: Large files

Frustrated with wild and wacky gsutil download performance, I quickly moved on to s5cmd — perhaps it might solve or reduce the impact of hangups?

Let’s cover the s5cmd upload benchmarks first:

Measure-Command {s5cmd --endpoint-url https://storage.googleapis.com cp -c=1 -p=1000000 temp_30GB_file s3://doit-speed-test-bucket/}

# NVMe: 6m21.780s, 80.46 MB/s

# SCSI: 7m14.162s, 70.76 MB/s

Measure-Command {s5cmd --endpoint-url https://storage.googleapis.com cp -p=1000000 temp_30GB_file s3://doit-speed-test-bucket/}

# NVMe: 12m56.066s, 39.58 MB/s

# SCSI: 8m12.255s, 62.41 MB/s

Similar to s5cmd upload on Linux, it is hampered by the inability to utilize multi-part uploads. Upload performance with concurrency set to 1 is comparable to that achieved by the same tool on a Linux machine, but performance with concurrency left to its default value of 5 causes dramatic drops (and swings) in performance. Including concurrency is unusual in the severity of its impact, but since s5cmd upload performance continues to be markedly worse than gsutil upload performance (strange given that this is true when both are not using multi-part uploads) we don’t want to use s5cmd for uploads anyway; let’s just ignore s5cmd’s upload concurrency oddity.

Moving on to the s5cmd download benchmarks:

Measure-Command { s5cmd --endpoint-url https://storage.googleapis.com cp -c=1 -p=1000000 s3://doit-speed-test-bucket/temp_30GB_file .}

# NVMe 1st attempt: 2m17.954s, 222.68 MB/s

# NVMe 2nd attempt: 1m44.718s, 293.36 MB/s

# SCSI 1st attempt: 3m9.581s, 162.04 MB/s

# SCSI 2nd attempt: 1m52.500s, 273.07 MB/s

Measure-Command {s5cmd --endpoint-url https://storage.googleapis.com cp

-c=1 s3://doit-speed-test-bucket/temp_30GB_file .}

# NVMe 1st attempt: 3m18.006s, 155.15 MB/s

# NVMe 2nd attempt: 4m2.792s, 126.53 MB/s

# SCSI 1st attempt: 3m37.126s, 141.48 MB/s

# SCSI 2nd attempt: 4m9.657s, 123.05 MB/s

Measure-Command { s5cmd --endpoint-url https://storage.googleapis.com cp -p=1000000 s3://doit-speed-test-bucket/temp_30GB_file .}

# NVMe 1st attempt: 2m17.151s, 223.99 MB/s

# NVMe 2nd attempt: 1m47.217s, 286.52 MB/s

# SCSI 1st attempt: 4m39.120s, 110.06 MB/s

# SCSI 2nd attempt: 1m42.159s, 300.71 MB/s

Measure-Command {s5cmd --endpoint-url https://storage.googleapis.com cp s3://doit-speed-test-bucket/temp_30GB_file .}

# NVMe 1st attempt: 2m48.714s, 182.08 MB/s

# NVMe 2nd attempt: 2m41.174s, 190.60 MB/s

# SCSI 1st attempt: 2m35.480s, 197.58 MB/s

# SCSI 2nd attempt: 2m40.483s, 191.42 MB/s

While there are some hangups and variability with downloads as with gsutil, s5cmd is much more performant than gsutil at downloads once again. It also experienced lower duration and/or lower frequency hangups. These strange hangups still remain an occasional issue, though.

In contrast to how I achieved maximum performance on a Linux VM by leaving out the -c and -p parameters, optimal performance seems to have been achieved by including both of these with -c=1 -p=1000000. It is difficult to declare inclusion of these as the most optimal configuration given the random hangups dogging my benchmarks, but it seems to run well enough with these arguments. As with gsutil, it is also challenging to determine whether NVMe or SCSI are better optimized due to the hangups.

In an attempt to better understand download speeds on NVMe and SCSI with optimal s5cmd arguments, I wrote a function that reports the Avg, Min, and Max runtime from 20 repeated downloads, with the goal of averaging out the momentary hangups:

Measure-CommandAvg {s5cmd --endpoint-url https://storage.googleapis.com cp -c=1 -p=1000000 s3://doit-speed-test-bucket/temp_30GB_file .}

### With 20 sample downloads

# NVMe:

# Avg: 1m48.014s, 284.41 MB/s

# Min: 1m23.411s, 368.30 MB/s

# Max: 3m10.989s, 160.85 MB/s

# SCSI:

# Avg: 1m47.737s, 285.14 MB/s

# Min: 1m24.784s, 362.33 MB/s

# Max: 4m44.807s, 107.86 MB/s

There continues to be variability in how long the same download takes to complete, but it is evident that SCSI does not provide an advantage over NVMe in general for large file downloads, despite being the supposedly ideal driver to use with a local SSD on a Windows VM.

Let’s also validate whether uploads are more performant via NVMe using the same averaging function running on 20 repeated uploads:

Measure-CommandAvg {gsutil cp temp_30GB_file gs://doit-speed-test-bucket/}

# NVMe:

# Avg: 3m23.216s, 151.17 MB/s

# Min: 2m31.169s, 203.22 MB/s

# Max: 4m13.943s, 121.42 MB/s

# SCSI:

# Avg: 5m1.570s, 101.87 MB/s

# Min: 3m2.649s, 168.19 MB/s

# Max: 35m3.276s, 14.61 MB/s

We see validation of our earlier individual runs that indicated NVMe might be more performant than SCSI for uploads. In this repeated twenty sample run case, NVMe is considerably more performant.

Thus, with Windows VMs, in contrast to the GCP docs, not only should we avoid using -o GSUtil:parallel_composite_upload_threshold=150M with gsutil when uploading to GCS, we should also avoid using SCSI and prefer NVMe for our local SSD driver to improve uploads and maybe downloads. We also see that for downloads and uploads there are frequent, unpredictable pauses that range from 1–2 minutes to as much as 10–30 minutes.

What do I tell the customer…

At this point, I informed the customer that there are data transfer limitations inherent with using a Windows VM, however these could be partially mitigated by:

- Leaving optional arguments to their defaults for

gsutil cplarge file uploads, in spite of the GCP documentation suggesting otherwise - By using

s5cmd -c=1 -p=1000000instead ofgsutilfor downloads - By using the NVMe instead of SCSI driver for local SSD storage to improve both upload and possibly download speeds, in spite of the GCP documentation suggesting otherwise

However, I also informed the customer that uploads and downloads would be dramatically improved simply by avoiding Windows-based hangups entirely; move the data over to a Linux machine via disk snapshots, then perform data sync operations with GCS from a Linux-attached disk. That ultimately proved to be the quickest way to gain the expected throughput between a GCE VM and GCS, and led to a satisfied customer that was nonetheless left frustrated with nonsensical performance issues on their Windows Server.

My takeaway from the experience was this: Not only is gsutil woefully unoptimized for operations on Windows servers, there appears to be an underlying issue with GCS’ ability to transfer data to and from Windows as delays and hangups exist within both gsutil and s5cmd for both download and upload operations.

The customer issue was solved…and yet my curiosity had not yet been sated. What bandwidth banditry might I find when trying to transfer a large number of small files instead of a small number of large files?

Linux VM performance with gsutil: Small files

Moving back to Linux, I split the large 30 GB file into 50K (well, 50,001) files:

mkdir parts split -b 644245 temp_30GB_file mv x* parts/

And proceeded to benchmark upload performance with gsutil:

nohup bash -c 'time gsutil cp -r parts/* gs://doit-speed-test-bucket/smallparts/' & # n2-standard-4: 71m30.420s, 7.16 MB/s # n2-standard-80: 69m32.803s, 7.36 MB/s nohup bash -c 'time gsutil -m cp -r parts/* gs://doit-speed-test-bucket/smallparts/' & # n2-standard-4: 9m7.045s, 56.16 MB/s # n2-standard-80: 3m41.081s, 138.95 MB/s

As expected, providing -m to engage in multi-threaded, parallel upload of files dramatically improves upload speed — do not attempt to upload a large folder of files without it. The more vCPUs your machine possesses, the more file uploads you can engage in simultaneously.

Below are the download performance benchmarks with gsutil:

nohup bash -c 'time gsutil cp -r gs://doit-speed-test-bucket/smallparts/ parts/' & # n2-standard-4: 61m24.516s, 8.34 MB/s # n2-standard-80: 56m54.841s, 9.00 MB/s nohup bash -c 'time gsutil -m cp -r gs://doit-speed-test-bucket/smallparts/ parts/' & # n2-standard-4: 7m42.249s, 66.46 MB/s # n2-standard-80: 3m38.421s, 140.65 MB/s

Again, providing -m is a must — do not attempt to download a large folder of files without it. As with uploads, gsutil performance is improved through parallel file uploads with -m and numerous available vCPUs.

I have found nothing out of the ordinary on Linux with gsutil-based mass small file downloads and uploads.

Linux VM performance with s5cmd: Small files

Having already established that s5cmd should not be used for file uploads to GCS, I will only report on the Linux download benchmarks below:

nohup bash -c 'time s5cmd --endpoint-url https://storage.googleapis.com cp s3://doit-speed-test-bucket/smallparts/* parts/' & # n2-standard-4: 1m19.531s, 386.26 MB/s # n2-standard-80: 1m31.592s, 335.40 MB/s nohup bash -c 'time s5cmd --endpoint-url https://storage.googleapis.com cp -c=80 s3://doit-speed-test-bucket/smallparts/* parts/' & # n2-standard-80: 1m29.837s, 341.95 MB/s

On the n2-standard-4 machine we see a 6.9X speedup in mass small file download speed when compared to gsutil. It makes sense then to use s5cmd for downloading many small files as well as for downloading larger files.

Nothing out of the ordinary was observed on Linux with s5cmd-based mass small file downloads.

Windows VM performance with s5cmd: Small files (and additional large file tests)

Given that s5cmd has significantly faster downloads than gsutil on any OS, I will only consider s5cmd for Windows download benchmarks with small files:

Measure-CommandAvg {s5cmd --endpoint-url https://storage.googleapis.com cp s3://doit-speed-test-bucket/smallparts/* parts/}

# NVMe:

# Avg: 2m39.540s, 192.55 MB/s

# Min: 2m35.323s, 197.78 MB/s

# Max: 2m44.260s, 187.02 MB/s

# SCSI:

# Avg: 2m45.431s, 185.70 MB/s

# Min: 2m40.785s, 191.06 MB/s

# Max: 2m50.930s, 179.72 MB/s

We see that downloading 50K smaller files to a Windows VM performs better and more predictably than when downloading much larger files. NVMe outperforms SCSI only by a sliver.

There is an odd consistency and lack of long hang-ups with the data sync in this use case vs. the individual large file copy commands seen earlier. Just to fully verify that the hang-up trend is more likely to occur with large files, I ran the averaging function over 20 repeated downloads of the 30 GB file:

Measure-CommandAvg {s5cmd --endpoint-url https://storage.googleapis.com cp -p=1000000 s3://doit-speed-test-bucket/temp_30GB_file .}

### With 20 sample downloads

# NVMe:

# Avg: 3m3.770s, 167.17 MB/s

# Min: 1m34.901s, 323.70 MB/s

# Max: 10m34.575s, 48.41 MB/s

# SCSI:

# Avg: 2m20.131s, 219.22 MB/s

# Min: 1m31.585s, 335.43 MB/s

# Max: 3m43.215s, 137.63 MB/s

We see that the Windows download runtime on NVMe ranges from 1m37s to 10m35s, whereas with 50K small files on the same OS the download time only ranged from 2m35s to 2m44s. Thus, there appears to be a Windows or GCS-specific issue with large file transfers on a Windows VM.

Also note that the average NVMe download time appears to be about 73% longer (3m3s vs 1m46s) than when running s5cmd on Linux.

It is tempting to say that SCSI might be more advantageous for mass small file downloads than NVMe based on the results above, but with random hang-ups in the download process skewing the average, I’m going to stick with NVMe as the preferred driver given its proven effectiveness at uploading mass small files (see below) and its comparably equal performance at downloading large files as shown earlier.

Windows VM performance with gsutil: Small files

Below are the metrics for uploading many small files from a Windows VM:

Measure-CommandAvg {gsutil -q -m cp -r parts gs://doit-speed-test-bucket/smallparts/}

# NVMe:

# Avg: 16m36.562s, 30.83 MB/s

# Min: 16m22.914s, 31.25 MB/s

# Max: 17m0.299s, 30.11 MB/s

# SCSI:

# Avg: 17m29.591s, 29.27 MB/s

# Min: 17m5.236s, 29.96 MB/s

# Max: 18m3.469s, 28.35 MB/s

We see NVMe outperforms SCSI, and we continue to see that speeds are much slower than on a Linux machine. With Linux, mass small file uploads take about 9m7s, making the average Windows NVMe upload time of 16m36s about 82% slower than Linux.

Benchmark Reproducibility

If you are interested in performing your own benchmarks to replicate my findings, below are the shell and Powershell scripts I used along with comments summarizing the throughput I observed:

Effective Transfer Tool Use Conclusions

All in all, there is far more complexity than there should be in determining what the best method is for transferring data between GCE VMs — with data located on a local SSD — and GCS.

Windows servers experience drastic reductions in both download and upload speeds for reasons as-of-yet unknown when compared to the best equivalent commands on a Linux machine. These performance drops are substantial, typically 70–80% slower than the equivalent best command to run on Linux. Large file transfers are impacted more significantly than the transfer of many small files.

Thus, if you need to migrate TBs of data or particularly large files from Windows to GCS in a time-sensitive manner, you may want to bypass these performance issues by taking a disk snapshot, attaching a disk created from that snapshot to a Linux machine, and uploading the data via that OS.

Separate from Windows server issues, the default data transfer tool gsutil available on GCE VMs is inadequate for high-throughput downloads for any OS. By using s5cmd instead, you can achieve several-fold improvements in download speed.

To help you sort through the myriad of tool and argument choices, below is a summary of my recommendations for maximizing throughput based on the benchmarks covered in this article:

Linux — Download

- Single large file:

s5cmd --endpoint-urlcp s3://your_bucket/your_file . - Multiple files, small or large:

s5cmd --endpoint-urlcp s3://your_bucket/path* your_path/

Linux — Upload

- Single large file:

gsutil -o GSUtil:parallel_composite_upload_threshold=150M cp your_file gs://your_bucket/ - Multiple files, small or large:

gsutil -m -o GSUtil:parallel_composite_upload_threshold=150M cp -r your_path/ gs://your_bucket/

Windows Server— Download

- Use NVMe, not SCSI, for connecting a local SSD

- Single large file:

s5cmd --endpoint-urlcp -c=1 -p=1000000 s3://your_bucket/your_file . - Multiple files, small or large:

s5cmd --endpoint-urlcp s3://your_bucket/path* your_path/

Windows Server— Upload

- Use NVMe, not SCSI, for connecting a local SSD

- Single large file:

gsutil cp your_file gs://your_bucket/ - Multiple files, small or large:

gsutil -m cp -r your_path/ gs://your_bucket/your_path/