Photo by Ar_TH from Shutterstock

Introduction

In the vast landscape of cloud computing, network management is a critical component of ensuring your applications and services run smoothly. One fundamental aspect of network management is the allocation of IP (Internet Protocol) addresses to various resources within your cloud environment. These IP addresses serve as digital identification for your cloud resources, enabling them to communicate with each other and the internet at large.

When designing a network, whether in a traditional on-premises setting or within a cloud platform like Google Cloud Platform (GCP), you encounter the concepts of overlapping and non-overlapping IP address ranges.

Overlapping IP ranges occur when two or more IP address ranges share common IP addresses, essentially overlapping with each other.

Non-overlapping IP ranges are a set of IP addresses where each address is unique and does not intersect or overlap with addresses from another range.

Each Virtual Private Cloud (VPC) network in GCP is made up of one or more subnets which are either overlapping or non-overlapping with the IP address ranges in the other VPCs. It's crucial to use a non-overlapping IP range for subnets since private network access is not allowed by default between overlapping subnets.

In my previous article, I explained how you can use Private Service Connect (PSC) to privately access services in VPC networks that contain overlapping IP ranges.

GCP has recently introduced Private NAT in a Preview release, which aims to facilitate private communication between overlapping networks. In this article, we will discuss how to configure Inter-VPC NAT to privately access services running in different VPC networks that contain overlapping and non-overlapping IP ranges.

What is Inter-VPC NAT?

In GCP, VPC peering is not allowed between overlapping networks since it does not allow sharing of specific subnets between VPCs. Inter-VPC NAT is a Private NAT gateway offering that enables VPC network resources in overlapping subnets to communicate with the resources in non-overlapping subnets over another VPC, even if other subnets do overlap, by using a NAT configuration of type=PRIVATE.

It works in conjunction with Network Connectivity Center which is a unified, holistic, and simplified network connectivity management system. It’s designed to bring together the different networking capabilities of Google Cloud into a single interface, making it easier for you to connect and manage your cloud and on-premises networks.

Network Connectivity Center uses a hub-and-spoke model for network connectivity. A hub is a central point of network connectivity to your Virtual Private Cloud (VPC) networks. You can think of it as a virtual router that aggregates connectivity from various spokes. The spokes can be VPNs, Interconnects, third-party routers, or other VPC networks.

To enable Inter-VPC NAT between VPC networks, you must configure each VPC network as a VPC spoke of a Network Connectivity Center hub. VPC spokes allow users to connect multiple VPC networks and exchange specific IPv4 subnet routes which is not possible in VPC peering. This provides full IPv4 connectivity between all workloads that reside in all these VPC networks with each other.

When you create the spoke, you must prevent the overlapping IP address ranges from being shared with other VPC spokes.

Limitations

Inter-VPC NAT currently has the following key limitations in Preview.

- Inter-VPC NAT does not support Endpoint-Independent Mapping.

- Inter-VPC NAT with cross-project support is not available in Preview.

- Logging support for Inter-VPC NAT is not available in Preview.

- In Preview, You must use only the gcloud CLI to update any existing Private NAT gateway. Using the Google Cloud console to update an existing Private NAT gateway might lead to an incorrect configuration.

- Inter-VPC NAT supports only TCP and UDP connections.

- Inter-VPC NAT supports network address translation (NAT) between Network Connectivity Center Virtual Private Cloud (VPC) spokes only, and not with Virtual Private Cloud networks connected using VPC Network Peering.

- A virtual machine (VM) instance in a VPC network can only access destinations in a non-overlapping subnetwork in a peered network and not in an overlapping subnetwork.

GCP might lift some limitations before the service goes GA. Refer to official docs for updates.

Reference Architecture

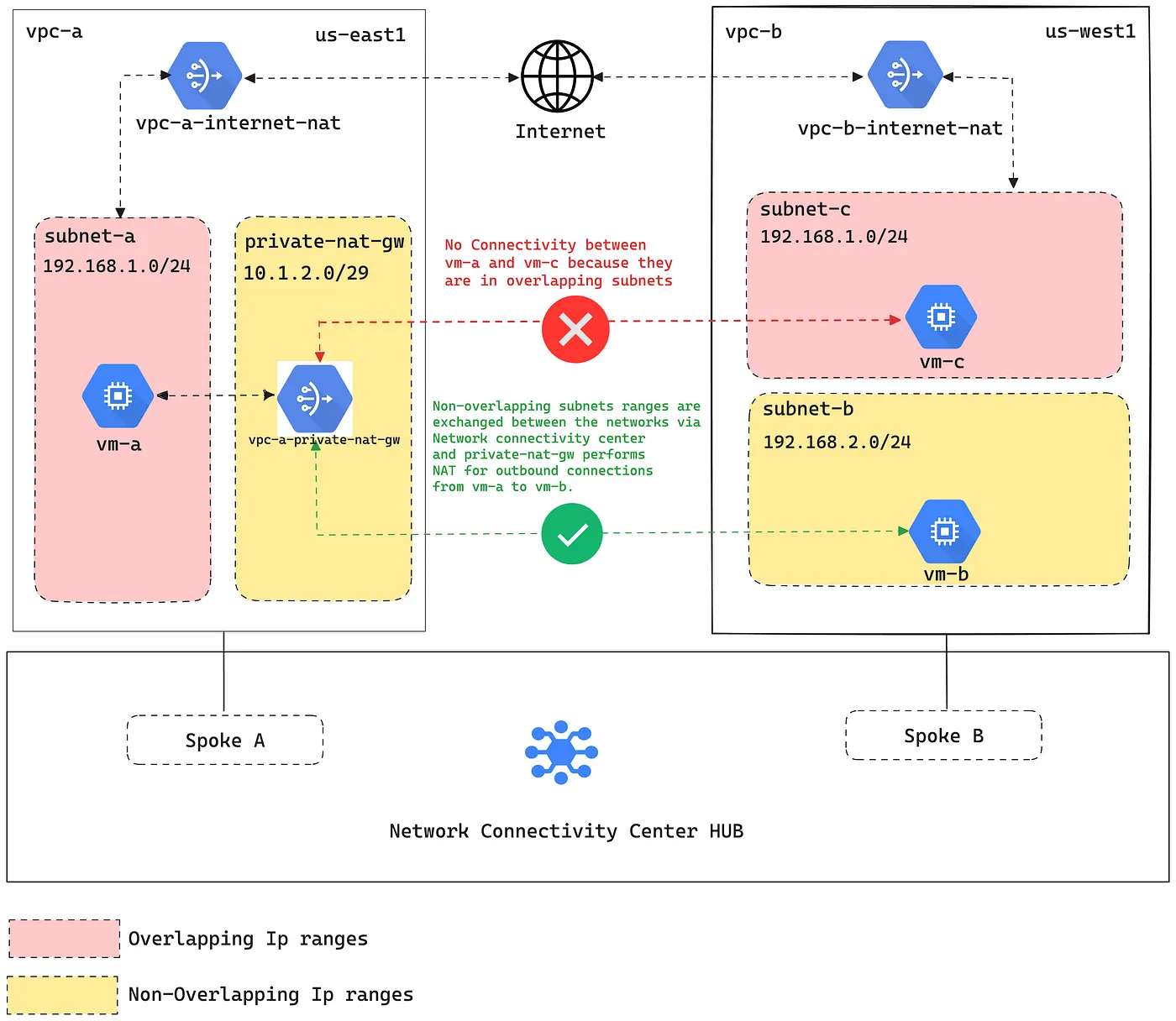

In this setup, vpc-aand vpc-bhave a subnet with an overlapping IP address range. vpc-b also has a non-overlapping subnet that hosts a sample application.

A private NAT gateway will be deployed in vpc-ato allow instances in the overlapping subnet to connect to the sample application in the non-overlapping subnet.

Setup Network and Deploy Sample Application

Step 1: Set up the necessary environment variables.

export PROJECT_ID="your-project-id" #ex: chimbu-playground export VPC_A_SUBNET_REGION="us-east1" export VPC_A_SUBNET_A_CIDR="192.168.1.0/24" export VPC_B_SUBNET_REGION="us-west1" export VPC_B_SUBNET_B_CIDR="192.168.2.0/24" #non-overlapping subnet between vpc-a and vpc-b export VPC_B_SUBNET_C_CIDR="192.168.1.0/24" #overlapping subnet between vpc-a and vpc-b export VPC_A_PRIVATE_NAT_GW_CIDR="10.1.2.0/29"

Step 2: Create the VPC networks and subnets.

#Create vpc-a custom Network and subnet gcloud beta compute networks create vpc-a \ --subnet-mode=custom gcloud beta compute networks subnets create subnet-a \ --network="vpc-a" \ --role="ACTIVE" \ --purpose="PRIVATE" \ --range=$VPC_A_SUBNET_A_CIDR \ --region=$VPC_A_SUBNET_REGION # Create vpc-b custom Network and subnets gcloud beta compute networks create vpc-b \ --subnet-mode=custom gcloud beta compute networks subnets create subnet-b \ --network="vpc-b" \ --role="ACTIVE" \ --purpose="PRIVATE" \ --range=$VPC_B_SUBNET_B_CIDR \ --region=$VPC_B_SUBNET_REGION gcloud beta compute networks subnets create subnet-c \ --network="vpc-b" \ --role="ACTIVE" \ --purpose="PRIVATE" \ --range=$VPC_B_SUBNET_C_CIDR \ --region=$VPC_B_SUBNET_REGION

Step 3: Create firewall rules for Identity-Aware Proxy (IAP) TCP forwarding to enable SSH access to VM instances.

We will be creating VM instances without external IPs in the following steps, and IAP TCP forwarding allows users to establish an encrypted tunnel over which they can forward SSH, RDP, and other traffic to VM instances.

Ensure users are assigned with roles/iap.tunnelResourceAccessor to perform TCP forwarding and related tasks.

gcloud compute firewall-rules create vpc-a-iap \ --direction=INGRESS \ --priority=1000 \ --network=vpc-a \ --action=ALLOW \ --rules=tcp:22 \ --source-ranges=35.235.240.0/20 gcloud compute firewall-rules create vpc-b-iap \ --direction=INGRESS \ --priority=1000 \ --network=vpc-b \ --action=ALLOW \ --rules=tcp:22 \ --source-ranges=35.235.240.0/20

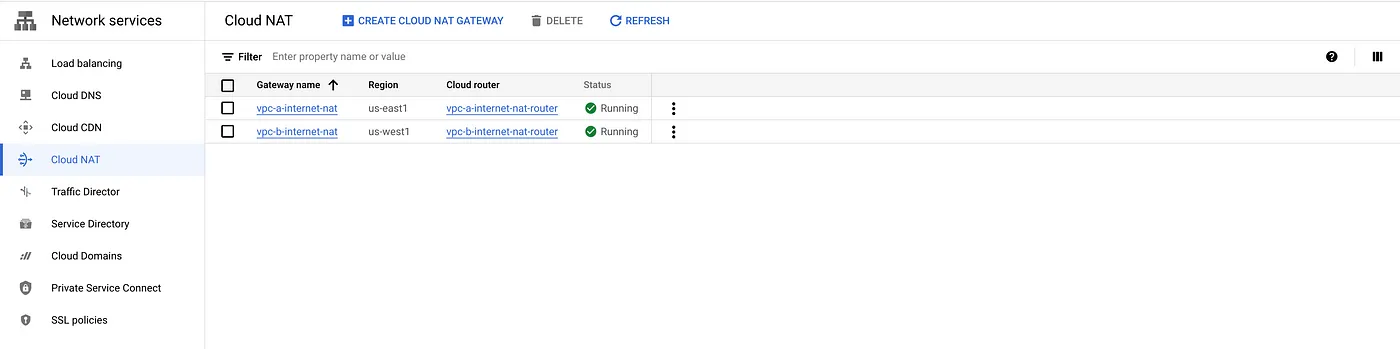

Step 4: Create a Cloud NAT instance for internet access. This Cloud NAT instance does not allow private communication between VPCs. That will be done separately.

#vpc-a Cloud router and NAT gcloud compute routers create vpc-a-internet-nat-router \ --network=vpc-a \ --region=$VPC_A_SUBNET_REGION gcloud beta compute routers nats create vpc-a-internet-nat \ --router=vpc-a-internet-nat-router \ --nat-all-subnet-ip-ranges \ --region=$VPC_A_SUBNET_REGION \ --auto-allocate-nat-external-ips #vpc-b Cloud router and NAT gcloud compute routers create vpc-b-internet-nat-router \ --network=vpc-b \ --region=$VPC_B_SUBNET_REGION gcloud beta compute routers nats create vpc-b-internet-nat \ --router=vpc-b-internet-nat-router \ --nat-all-subnet-ip-ranges \ --region=$VPC_B_SUBNET_REGION \ --auto-allocate-nat-external-ips

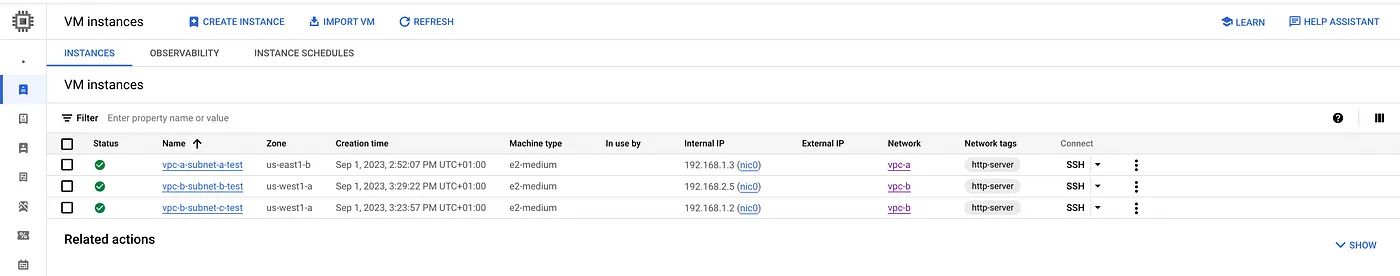

Step 5: Create test Compute Engine instances without External IP.

#Test instance in vpc-a network and subnet-a gcloud compute instances create vpc-a-subnet-a-test \ --project=$PROJECT_ID \ --zone="us-east1-b" \ --machine-type=e2-medium \ --network-interface=stack-type=IPV4_ONLY,subnet=subnet-a,no-address \ --tags=http-server \ --create-disk=auto-delete=yes,boot=yes,device-name=vpc-a-subnet-a-test,image=projects/debian-cloud/global/images/debian-11-bullseye-v20230814,mode=rw,size=10,type=projects/$PROJECT_ID/zones/us-east1-b/diskTypes/pd-balanced

#Test instance in vpc-b network and subnet-b, Also deployed nginx service.Cloud NAT to download the required ngninx packages. gcloud compute instances create vpc-b-subnet-b-test \ --project=$PROJECT_ID \ --zone="us-west1-a" \ --machine-type=e2-medium \ --network-interface=stack-type=IPV4_ONLY,subnet=subnet-b,no-address \ --metadata=startup-script=\#/bin/sh$'\n'sudo\ \ apt\ update$'\n'sudo\ apt\ install\ nginx\ -y\ \ --tags=http-server \ --create-disk=auto-delete=yes,boot=yes,device-name=vpc-b-subnet-b-test,image=projects/debian-cloud/global/images/debian-11-bullseye-v20230814,mode=rw,size=10,type=projects/$PROJECT_ID/zones/us-west1-a/diskTypes/pd-balanced #Test instance deployed in vpc-b network and subnet-c gcloud compute instances create vpc-b-subnet-c-test \ --project=$PROJECT_ID \ --zone="us-west1-a" \ --machine-type=e2-medium \ --network-interface=stack-type=IPV4_ONLY,subnet=subnet-c,no-address \ --tags=http-server \ --create-disk=auto-delete=yes,boot=yes,device-name=vpc-b-subnet-c-test,image=projects/debian-cloud/global/images/debian-11-bullseye-v20230814,mode=rw,size=10,type=projects/$PROJECT_ID/zones/us-west1-a/diskTypes/pd-balanced

At this point in our process, The vpc-a-subnet-a-testinstance in vpc-a cannot access the service running in the vpc-b-subnet-b-testinstance because there is no network connectivity, and we cannot create VPC peering between the networks because of the overlapping subnet.

Setup Hub and VPC Spokes in Network Connectivity Center

To enable communication between two VPC networks with overlapping subnet IP ranges, you need to configure the VPC networks as VPC spokes connected to the same Network Connectivity Center hub.

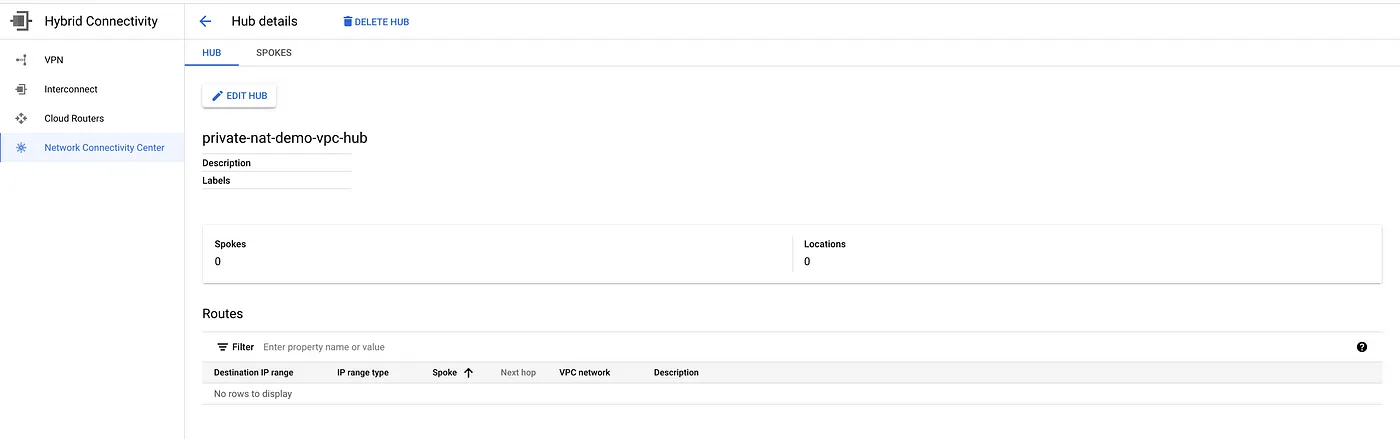

Step 6: Create a network connectivity center hub.

gcloud network-connectivity hubs create private-nat-demo-vpc-hub

Step 7: Add the VPC networks as Spokes to Hub. Ensure the overlapping networks are excluded from export.

#Add vpc-a to the hub and exclue VPC_A_SUBNET_A_CIDR from export. gcloud network-connectivity spokes linked-vpc-network create vpc-a \ --hub="https://www.googleapis.com/networkconnectivity/v1/projects/$PROJECT_ID/locations/global/hubs/private-nat-demo-vpc-hub" --global \ --vpc-network="https://www.googleapis.com/compute/v1/projects/$PROJECT_ID/global/networks/vpc-a" \ --exclude-export-ranges=$VPC_A_SUBNET_A_CIDR #Add vpc-b to the hub and exclue VPC_B_SUBNET_C_CIDR from export.VPC_B_SUBNET_B_CIDR will be exported between the VPCs. gcloud network-connectivity spokes linked-vpc-network create vpc-b \ --hub="https://www.googleapis.com/networkconnectivity/v1/projects/$PROJECT_ID/locations/global/hubs/private-nat-demo-vpc-hub" --global \ --vpc-network="https://www.googleapis.com/compute/v1/projects/$PROJECT_ID/global/networks/vpc-b" \ --exclude-export-ranges=$VPC_B_SUBNET_C_CIDR

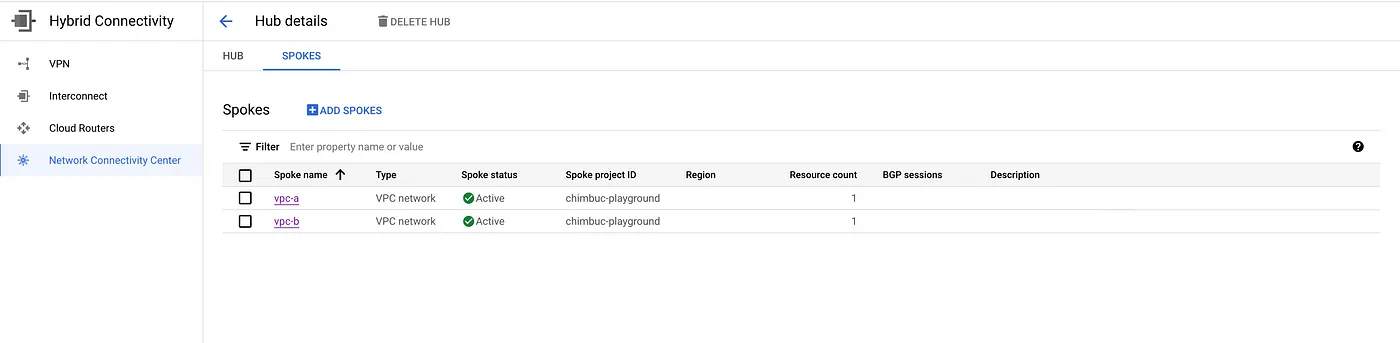

VPC Spokes

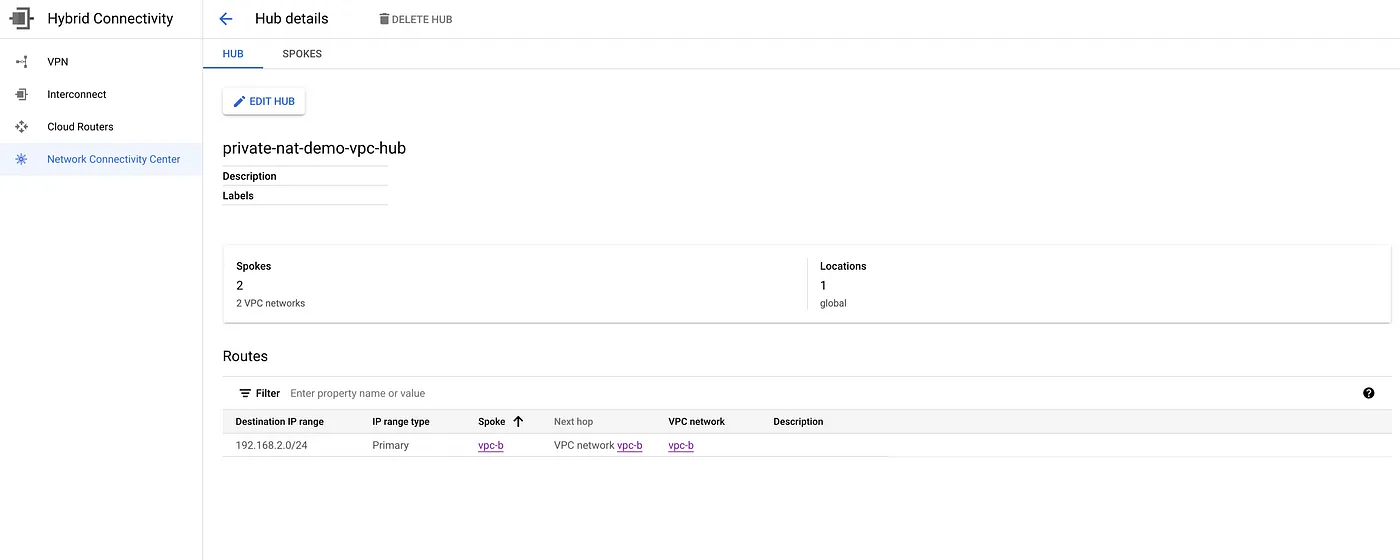

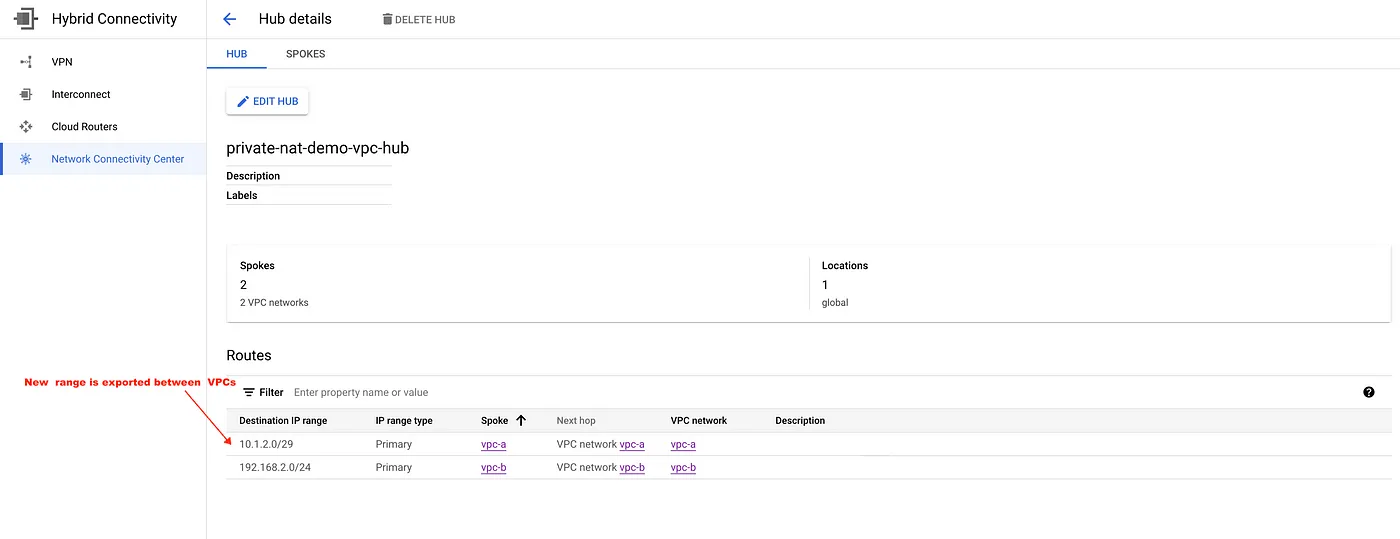

Exchanged route between the VPCs

Setup Inter-VPC NAT

The Inter-VPC NAT requires a dedicated subnet with the purpose of PRIVATE_NAT. The Private NAT gateway uses IP address ranges from this subnet to perform NAT. This subnet should not overlap with an existing subnet in any VPC spokes attached to the same Network Connectivity Center hub. This subnet is used only for Private NAT.

Step 8: Create a subnet for private NAT. This will be created in vpc-a since it needs to access the service running in vpc-b. The private NAT only performs NAT for outbound requests.

gcloud beta compute networks subnets create private-nat-gateway \ --network=vpc-a \ --region=$VPC_A_SUBNET_REGION \ --range=$VPC_A_PRIVATE_NAT_GW_CIDR \ --purpose=PRIVATE_NAT

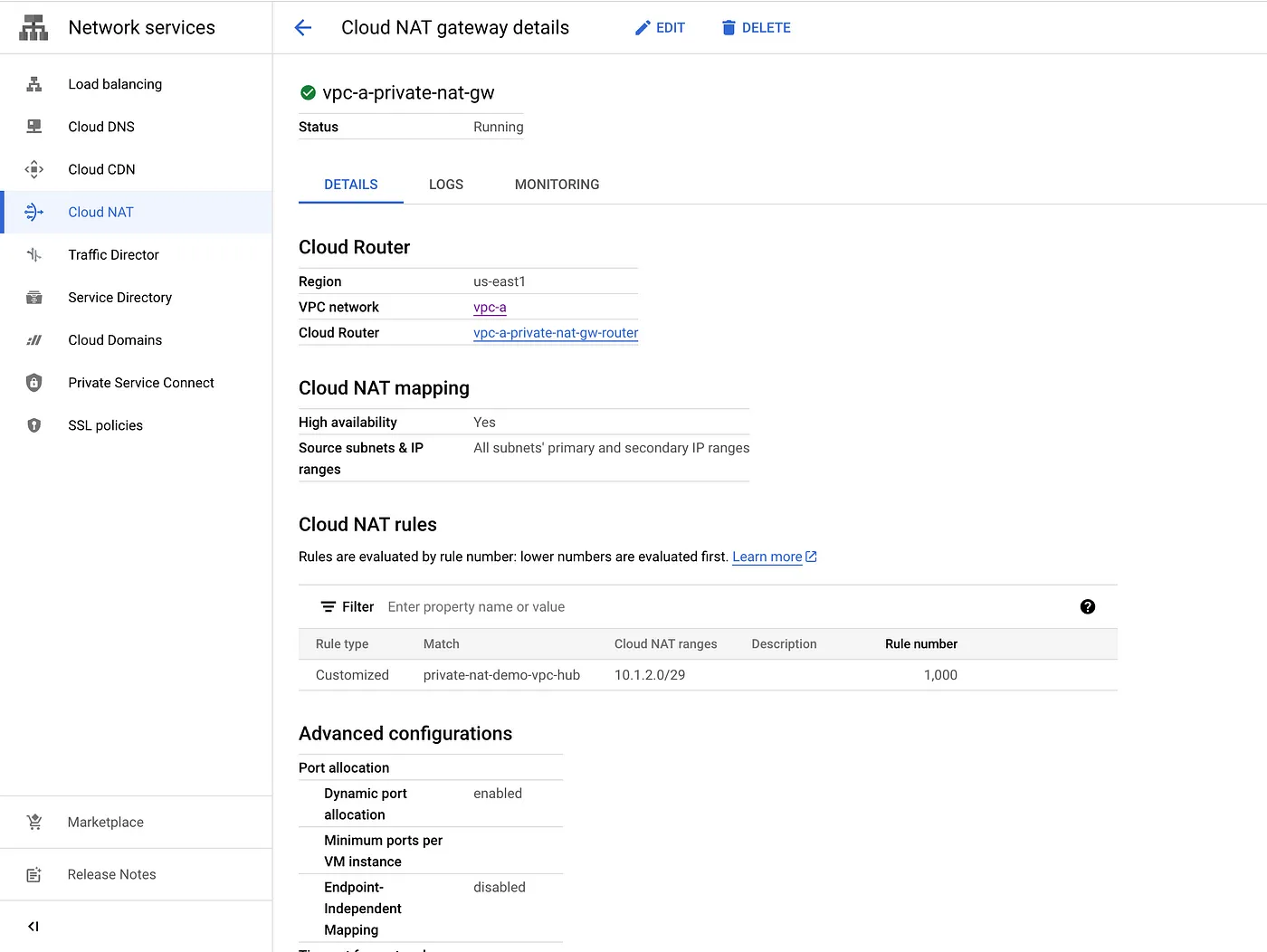

Step 9: Create a cloud router and private NAT gateway.

gcloud compute routers create vpc-a-private-nat-gw-router \ --network="vpc-a" \ --region=$VPC_A_SUBNET_REGION gcloud beta compute routers nats create vpc-a-private-nat-gw \ --router=vpc-a-private-nat-gw-router \ --type=PRIVATE \ --nat-all-subnet-ip-ranges \ --region=$VPC_A_SUBNET_REGION

Step 10: Create a NAT rule in the Private NAT gateway to perform NAT on traffic egressing through the source VPC spoke to any of the peer VPC spokes attached to a matching Network Connectivity Center hub. Based on the NAT rule, the Private NAT gateway assigns NAT IP addresses from the Private NAT subnet to perform NAT on the traffic.

gcloud beta compute routers nats rules create 1000 \ --router=vpc-a-private-nat-gw-router \ --nat=vpc-a-private-nat-gw \ --match='nexthop.hub == "//networkconnectivity.googleapis.com/projects/chimbuc-playground/locations/global/hubs/private-nat-demo-vpc-hub"' \ --source-nat-active-ranges=private-nat-gateway \ --region=$VPC_A_SUBNET_REGION

Step 11: Create a firewall rule in vpc-b to allow the connections from the private NAT gateway.

gcloud compute firewall-rules create vpc-b-allow-ingress-private-nat-gw \ --direction=INGRESS \ --priority=1000 \ --network=vpc-b \ --action=ALLOW \ --rules=tcp \ --source-ranges=$VPC_A_PRIVATE_NAT_GW_CIDR

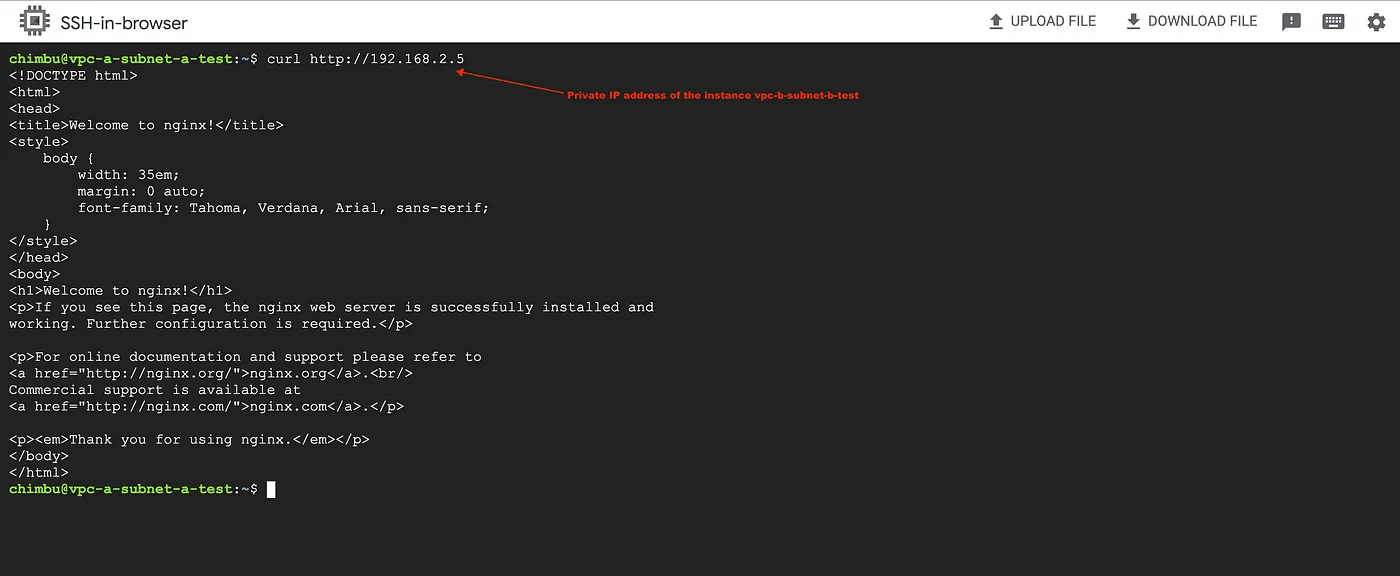

Step 12: Test the connectivity between vpc-aand vpc-b networks.

From the screenshot, we can confirm the instance in vpc-acan access the service running vpc-bnon-overlapping subnet via the Inter-VPC NAT.

Conclusion

Inter-VPC NAT is a powerful tool that can simplify private communication between overlapping networks. By using Inter-VPC NAT, you can ensure that a resource can privately communicate with resources in non-overlapping subnets in other VPC, even if the resource happens to be in a subnet that does have an overlap with other subnets.

Use Private Service Connect to enable private communication between overlapping subnets. I hope this blog post has been helpful. Reach out to me if you have any questions.