This is a simple step-by-step guide to set up a multi-cluster Anthos Service Mesh (ASM) using the managed control plane.

The accompanying code can be found here: https://github.com/caddac/anthos-examples/tree/main/asm

What is a service mesh and why would you need one?

A service mesh is an abstraction built on top of various compute platforms – in this case clusters – to simplify inter-service communication, observability and security. It moves these responsibilities to the platform, facilitating a simpler application. In this blog post, we’ll deploy Anthos Service Mesh, which is a managed Istio installation. Istio is a service mesh for kubernetes, a powerful tool to gain consistent insight into service-to-service observability and security. Using Istio, this is achieved by proxying all interservice communication through proxies run as sidecars to your pods.

Services can use Istio’s consistent and transparent patterns to communicate with other services and don’t need to concern themselves with how to reach another service, security from incoming requests, or publishing metrics about inter-service traffic. All of this is handled in Istio. These features are even more important when working with a multi-cluster configuration and operational overhead is even higher.

Using a service mesh does come with some drawbacks, including setup complexity, resource overhead and cost. Istio has many features and several ways to configure it, including istioctl, IstioOperator and helm. However, Anthos Service Mesh with managed control plane does not support the IstioOperator API or helm.

OK, here we go!

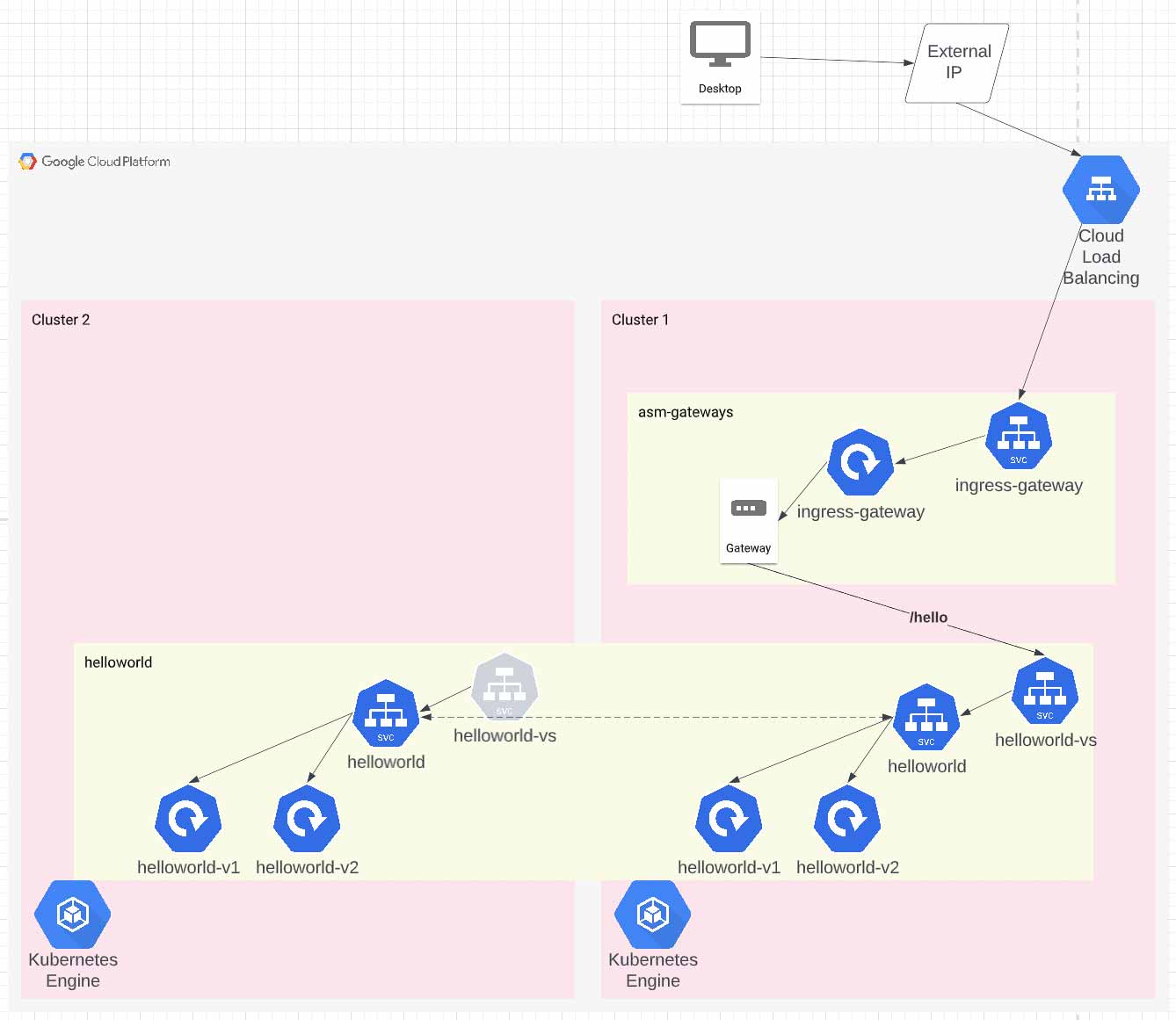

In this tutorial, we’ll build two GKE clusters and deploy the istio hello-world application to them. Then we’ll install a single ingress (on just one cluster) and show that traffic is load-balanced across the 4 hello-world pods running on two GKE clusters.

Deploy the terraform

In the asm/infra directory, update the values in the var_inputs.auto.tfvars file.

Plan and apply it:

$ terraform apply

Note: If the apply fails, try applying again. Sometimes terraform gets ahead of itself when applying so many dependent resources all at once. I had to apply it three times to get all the resources created.

There is a lot going on here, so let's cover it briefly.

This deploys a new GCP project and enables several required APIs [1]. Quick note here, the official docs seem to be missing the sts and anthos APIs, so make sure you enable those.

It also deploys a VPC, two subnetworks with secondary ranges and a firewall rule to let everything communicate. Finally, it enables the hub mesh feature for the project.

Each file deploys a cluster. These differ only in the names of the clusters, regions and subnet references. Here are two things to note on the cluster config. First, we’ve set the mesh_id label so GCP knows which mesh this cluster belongs to. Second, we’ve enabled Workload Identity, which allows Kubernetes service accounts to act as GCP service accounts [2] without needing to download or even create JSON keys.

We register the cluster to the fleet, using the cluster name as the membership id.

Finally, we install the managed control plane. We’re using the automatic control-plane (which means “managed”), but which revision? Check the revision label on the namespace to see which revision of the control plane is being used [5]. Yes, it’s that easy!

At this point, we have two clusters running in the same fleet, both with ASM installed using the managed control plane. So, although we can start installing Istio components, the clusters don’t have cross-cluster service discovery working yet.

Configure cross cluster service discovery

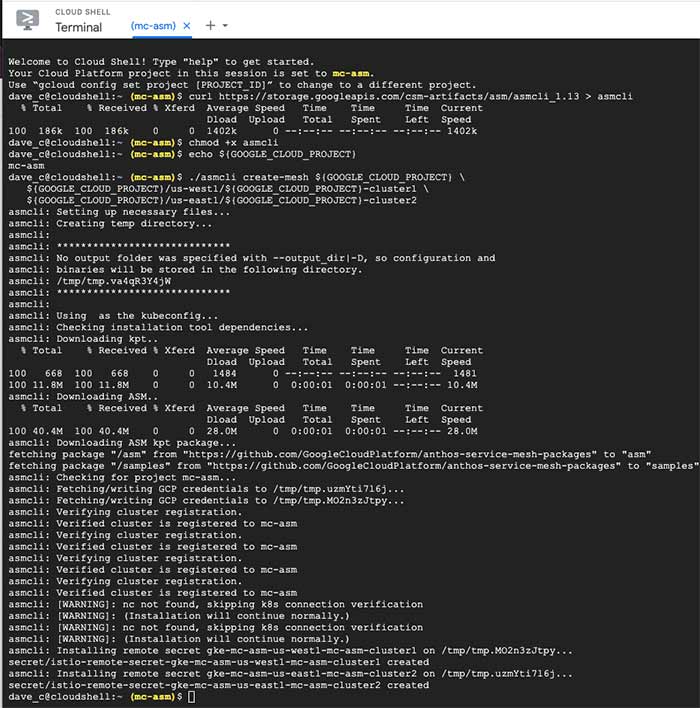

We need to install secrets for each cluster onto every other cluster in our fleet. Luckily, there is a cli tool for this – asmcli. Unfortunately, it doesn’t work on macOS yet, so it's easiest to run it in the cloud console.

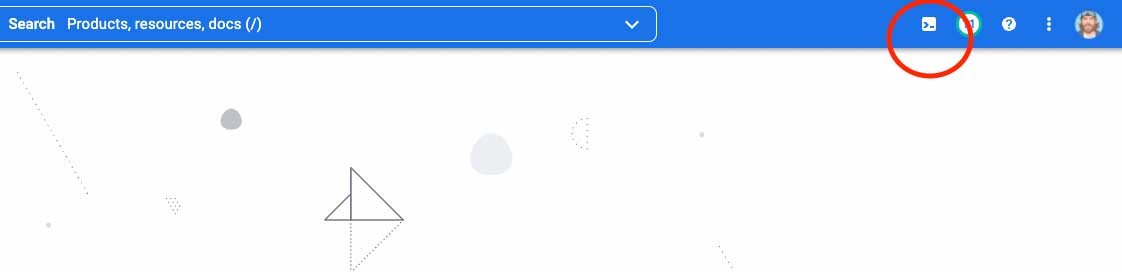

Open the cloud console in your project by browsing to the GCP console and clicking the cloud console button

After the console pops up at the bottom of the screen, run the following commands:

$ chmod +x asmcli

$ ./asmcli create-mesh ${GOOGLE_CLOUD_PROJECT} \

${GOOGLE_CLOUD_PROJECT}/us-west1/${GOOGLE_CLOUD_PROJECT}-cluster1 \

${GOOGLE_CLOUD_PROJECT}/us-east1/${GOOGLE_CLOUD_PROJECT}-cluster2

Quick note here: If you are using private clusters, there are additional steps to enable cross-cluster service discovery [6][7].

Deploy the apps and Istio components

From the asm/manifests directory, we can install apps to test our mesh. One cluster 1, we’re going to install the helloworld app and the Istio components for ingress.

Start by getting the contexts for the clusters:

gcloud container clusters get-credentials ${PROJECT_ID}-cluster1 --region us-west1 --project ${PROJECT_ID}

gcloud container clusters get-credentials ${PROJECT_ID}-cluster2 --region us-east1 --project ${PROJECT_ID}

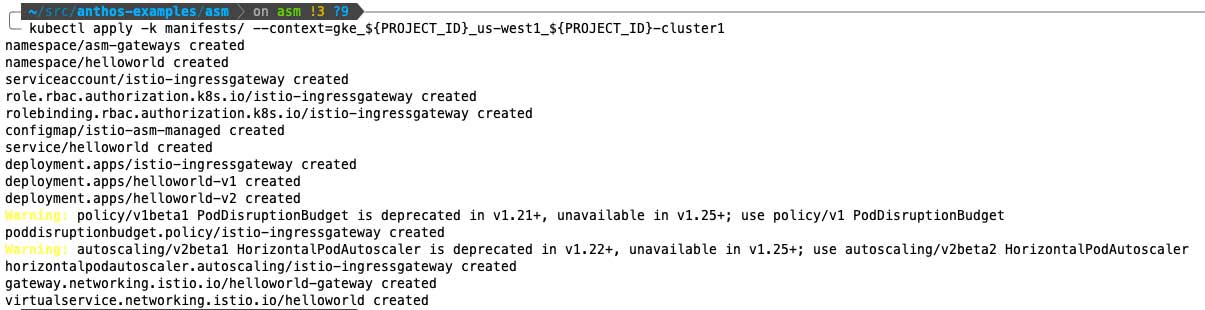

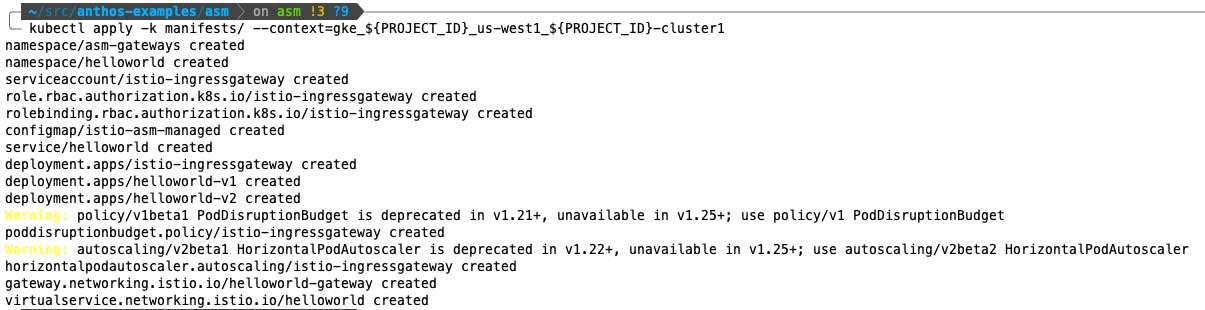

Install cluster 1 by running:

And cluster 2 by running:

Review your work

After a few minutes, everything should be up, and you can inspect your work.

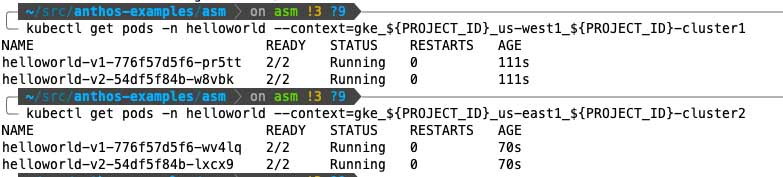

Verify all the helloworld pods are running both clusters:

$ kubectl get pods -n helloworld

--context=gke_${PROJECT_ID}_us-west1_${PROJECT_ID}-cluster1

$ kubectl get pods -n helloworld

--context=gke_${PROJECT_ID}_us-east1_${PROJECT_ID}-cluster2

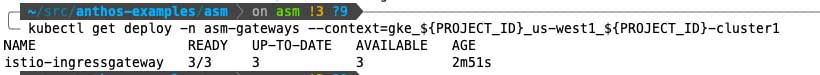

Verify the ingress-gateway deployment is running on cluster 1

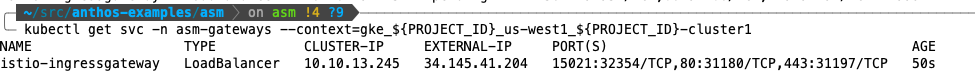

Verify the ingress-gateway service has an external IP on cluster 1

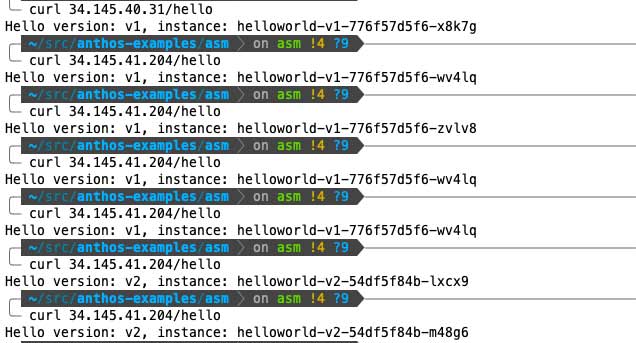

Finally, verify cross-cluster load balancing works by querying the public endpoint several times. You’ll notice the version and pod id changes as different pods are hit. You should get 4 different pod ids and 2 different versions.

Customizing, viewing logs

Enable envoy debug logs

We did this back when we deployed the asm configuration. Check out the file asm/manifests/gateways/asm-config.yaml. The ConfigMap we deployed configured the envoy proxies to log their access logs to stdout. There are other options you can enable in similar ways [3].

Control plane logs

You can still view control plane logs when using the managed control plane. They are in Logs Explorer under the resource “Istio Control Plane”

References

- https://cloud.google.com/service-mesh/docs/managed/auto-control-plane-with-fleet#before_you_begin

- https://cloud.google.com/kubernetes-engine/docs/how-to/workload-identity

- https://cloud.google.com/service-mesh/docs/managed/enable-managed-anthos-service-mesh-optional-features

- https://cloud.google.com/service-mesh/docs/managed/auto-control-plane-with-fleet

- https://cloud.google.com/service-mesh/docs/managed/select-a-release-channel#how_to_select_a_release_channel

- https://cloud.google.com/service-mesh/docs/unified-install/gke-install-multi-cluster#private-clusters-endpoint

- https://cloud.google.com/service-mesh/docs/unified-install/gke-install-multi-cluster#private-clusters-authorized-network